By: Brij B. Gupta, Department of Computer Science and Information Engineering, Asia University, Taichung 413, Taiwan.

Edge AI and TinyML represent transformative paradigms in the field of artificial intelligence, emphasizing the processing of data directly on resource-constrained devices rather than relying on centralized cloud computing. Edge AI refers to the deployment of AI algorithms on devices such as smartphones, IoT devices, and embedded systems, allowing for real-time data processing that minimizes latency and bandwidth usage. TinyML, a subset of Edge AI, focuses specifically on light- weight machine learning models that can operate efficiently on low-power hardware, including microcontrollers and sensors.[1][2]

These technologies are notable for their wide-ranging applications across various sectors, including healthcare, agriculture, home automation, and retail. In healthcare, for instance, Edge AI facilitates rapid diagnosis and patient monitoring through wearable devices, significantly enhancing patient care and safety. Similarly, TinyML empowers farmers to monitor crops and livestock in real-time, promoting data-driven decision-making for improved sustainability.[3][4][5][6] As the demand for efficient and responsive systems grows, the integration of Edge AI and TinyML is poised to redefine operational standards across industries.[7][8]

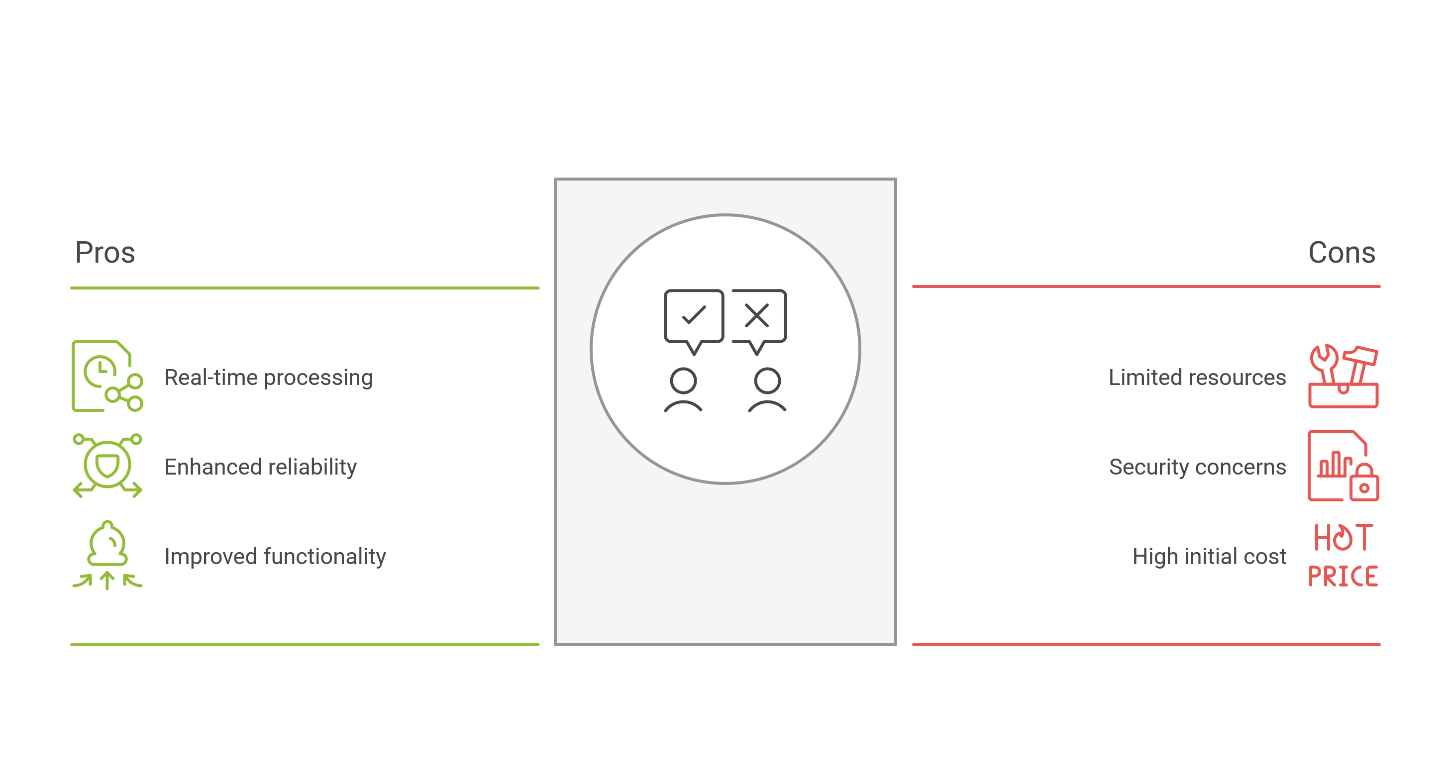

Despite their potential, the deployment of Edge AI and TinyML is not without chal- lenges. Issues related to computational resource constraints, power efficiency, data privacy, and security persist, necessitating innovative solutions to ensure robust and secure implementations. Additionally, the complexity involved in developing models suitable for edge environments highlights the need for specialized expertise in both machine learning and embedded systems, further complicating widespread adoption.[9][10][11]

Looking ahead, the TinyML market is projected to expand significantly, with estimates suggesting a rise from 15.2 million deployments in 2020 to 2.5 billion by 2030, dri- ven by advances in hardware and growing developer engagement.[8] Concurrently, trends indicate an increasing focus on Generative Edge AI, with expectations that

it will enhance human-machine interaction and unlock new use cases. As these technologies continue to evolve, ethical considerations and inclusivity in AI gover- nance will be essential to ensure equitable access and avoid exacerbating existing inequalities in technology utilization.[12][13][14]

Historical Background

The origins of artificial intelligence (AI) can be traced back to a proposal in 1956 by pioneers John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, who convened a summer research conference to explore the potential of program- ming computers to emulate higher cognitive functions of the human brain. McCarthy later defined AI as “the science and engineering of making intelligent machines, especially intelligent computer programs,” which emphasizes the broad nature of the field that seeks to enable machines to achieve goals through computational decision-making[1][2].

Throughout the decades, AI has undergone significant transformations, evolving from theoretical concepts to practical applications. In 1959, AI researcher Arthur Samuel coined the term “machine learning” to describe programs capable of learning from experience. This marked a pivotal moment in the field, laying the groundwork for the development of algorithms that would enable machines to improve their performance over time through data[2].

As technology progressed, traditional machine learning relied heavily on centralized servers to process vast amounts of data sourced from the internet. However, the advent of edge computing shifted this paradigm, allowing for data processing closer to the source—namely, on devices like smartphones and embedded systems. This approach not only reduced latency and bandwidth usage but also enhanced the speed and efficiency of data processing, which became particularly beneficial in time-sensitive sectors such as healthcare[3][4].

In recent years, the rise of Tiny Machine Learning (TinyML) has further revolutionized the field by enabling the deployment of lightweight machine learning models on

low-power devices, such as microcontrollers and sensors. This trend signifies a move towards more efficient and accessible AI solutions that can operate in real-time without the need for continuous internet connectivity, thus addressing some of the limitations associated with traditional AI implementations[7][8].

Core Concepts

Overview of Edge AI and TinyML

Edge AI refers to the deployment of artificial intelligence (AI) algorithms directly on edge devices—such as smartphones, IoT devices, and embedded systems—allow- ing for data processing closer to the source of generation. This approach minimizes the reliance on centralized cloud computing, thereby reducing latency and bandwidth usage while enhancing the efficiency and speed of data processing[3][2]. TinyML, a subset of Edge AI, specifically focuses on machine learning (ML) algorithms that can run on resource-constrained devices, such as microcontrollers[9].

Key Benefits of Edge AI

One of the primary advantages of Edge AI is the ability to conduct real-time data processing without the delays associated with cloud computing. This is especially crucial in sectors like healthcare, where rapid analysis of patient data can significantly impact decision-making and patient outcomes[3]. Edge AI enables devices to respond instantly to local data, enhancing the overall functionality and reliability of systems, particularly in critical applications[2].

Generative Edge AI

Generative Edge AI represents a growing field that utilizes generative models to create new data or insights from existing information, often tailored for specific tasks or environments. The Generative Edge AI Working Group plays a pivotal role in this area by fostering a community of collaboration among academia, industry, and individual contributors. This group focuses on knowledge sharing, networking, and engaging activities aimed at promoting innovation and practical deployment of generative AI solutions at the edge[9].

Educational Initiatives and Community Engagement

To support the development of Edge AI and TinyML, various educational resources and community activities are being established. These include tutorials, webinars, roundtable discussions, and whitepapers that cover foundational and advanced topics in the field. Such initiatives are designed to facilitate knowledge exchange and collaboration, creating a vibrant ecosystem that bridges the gap between theoretical research and practical applications[9][15].

Integration with Existing Infrastructure

The integration of Edge AI solutions into existing infrastructure is essential for organizations looking to leverage its benefits. Platforms like Red Hat’s OpenShift AI enable the use of multiple AI/ML frameworks, allowing companies to adapt their edge AI strategies according to evolving needs while maintaining a robust and reliable deployment environment[16]. This flexibility supports the seamless incorporation of Edge AI capabilities into various sectors, including healthcare, manufacturing, and energy management[4][17].

Applications

Edge AI and TinyML have opened new avenues for a range of applications across various sectors, leveraging real-time data processing capabilities to enhance effi- ciency and responsiveness.

Healthcare

In healthcare, edge AI plays a crucial role in facilitating rapid diagnosis and improving patient care. Wearable devices powered by edge AI continuously monitor vital signs such as heart rate, blood pressure, glucose levels, and respiration. These devices can also detect sudden falls and alert caregivers promptly[5][6]. Furthermore, in emergency medical situations, edge AI allows paramedics to analyze data from health monitoring devices on-site, providing essential insights to doctors before thepatient arrives at the hospital[6]. This capability fosters timely decision-making and interventions, thus enhancing overall patient safety and health outcomes[18].

Agriculture

In agriculture, TinyML devices enable real-time monitoring of crops and livestock, facilitating data-driven decisions that enhance productivity and sustainability[19]. This application of edge AI ensures that farmers can respond swiftly to changing conditions, optimizing resource use and improving yields.

Home Automation

Smart homes have significantly benefited from the integration of edge AI, enabling immediate feedback and local processing for applications such as security systems, lighting, and environmental controls. By processing data on-site, smart homes can operate independently without relying on internet connectivity, which enhances pri- vacy and reduces the risk of unauthorized access to personal data[6]. Techniques such as human pose estimation and hand gesture detection empower users to control various systems within the home, providing an intuitive and user-friendly experience[5].

Retail

Retail environments have also embraced edge AI to optimize operations. For in- stance, retailers utilize edge AI to address challenges like inventory management, shrinkage, and stockouts, leading to improved efficiency[18]. The technology sup- ports the creation of frictionless in-store experiences while prioritizing the health and safety of employees and customers. Notably, companies like D.Phone have deployed in-store Wi-Fi integrated with edge AI to enhance customer engagement and differentiate their offerings in a competitive market[18].

Smart Buildings

The rise of smart buildings illustrates the transformative potential of edge AI when integrated with sustainable architecture. By acting as centralized intelligence hubs, these buildings utilize data from various IoT devices to monitor and optimize functions such as lighting, heating, ventilation, security, and energy consumption[18]. This real-time analysis allows for personalized occupant comfort and streamlined building maintenance, contributing to both energy efficiency and user satisfaction.

Technical Aspects

Embedded Technology and TinyML

Embedded technology, particularly through the use of TinyML applications, revo- lutionizes traditional manufacturing processes by enhancing efficiency and quality

control. Historically, manufacturers depended on labor-intensive, manual quality con- trol, which was prone to human error. TinyML, equipped with advanced sensors and algorithms, enables real-time monitoring and optimization of various processes. This integration allows for proactive maintenance by monitoring parameters like temperature, pressure, and vibration, ultimately preventing equipment failures and improving product quality and reliability[20][8].

General-Purpose Technologies and Their Implications

TinyML represents a general-purpose technology (GPT) that is characterized by its pervasiveness across sectors, continuous improvement, and ability to facilitate complementary innovations. Its applications extend to various industries, including

healthcare, manufacturing, and finance, thereby impacting a wide range of economic activities. With continuous advancements in algorithms and computing power, TinyML remains adaptable and responsive to the evolving technological landscape- [1].

Hardware Considerations

The deployment of TinyML solutions is heavily influenced by hardware constraints. The selection of appropriate hardware is crucial; options include CPUs, GPUs, FP- GAs, and ASICs, each presenting unique advantages and limitations. For instance, CPUs like the Raspberry Pi are user-friendly and cost-effective but may not support the parallel processing required for more complex models. In contrast, specialized hardware accelerators can significantly enhance performance for deep learning tasks but may present challenges in compatibility and deployment across heterogeneous devices[21][22].

Data Processing Challenges

Effective implementation of TinyML algorithms also faces challenges related to data processing. Limited power availability, memory constraints, and the need for consistent algorithm performance are critical obstacles. Additionally, deploying ma- chine learning models on a large scale is complicated by the diversity of hardware specifications across devices, necessitating tailored solutions for each platform. The architecture of embedded systems must align with both hardware and software requirements, further complicating the deployment process[23][22].

Federated Learning and Privacy

Federated learning emerges as a promising solution to enhance on-device training while addressing privacy concerns. By allowing models to learn from decentralized data without transmitting sensitive information, federated learning supports the training of algorithms directly on edge devices. However, this approach can be computationally intensive and may require significant data transfer, presenting its own set of complexities[24].

Challenges and Limitations

Edge AI and TinyML present significant opportunities for deploying machine learning models in resource-constrained environments; however, they also face numerous challenges and limitations that impact their effectiveness and usability.

Resource Constraints

One of the primary challenges in deploying Edge AI and TinyML solutions is the limitation on computational resources. Edge devices typically have restricted computational power, memory, and storage when compared to cloud-based servers, which necessitates careful optimization of machine learning models to operate efficiently within these constraints[10]. This often requires trade-offs between model complexity and resource efficiency, leading to potential compromises in model accuracy[11].

Additionally, achieving robust model performance in dynamic and unpredictable environments remains a considerable challenge for developers[11].

Power Efficiency

Power consumption is another critical issue, particularly for battery-powered edge devices such as wearables and IoT sensors. These devices must be designed to minimize energy usage to ensure extended operation without frequent recharging[- 22]. For instance, while ideal conditions may predict a battery life of over ten years, actual implementations frequently reveal much shorter lifespans due to high power consumption rates[22]. Balancing the complexity of models with power efficiency is essential for the viability of TinyML applications, as excessive power demands can lead to rapid depletion of device batteries[25][22].

Data Privacy and Security

As more data processing occurs at the edge, ensuring data privacy and security becomes increasingly important. With the rise of personal data protection regulations such as GDPR and CCPA, maintaining compliance while leveraging local processing is crucial[26]. Edge devices that process sensitive information locally can significantly enhance user trust by mitigating the need to transmit personal data over the internet, thus reducing the risk of data breaches[26]. However, the security of data stored and processed at the edge remains a concern, as many devices lack robust security measures, leading to vulnerabilities[27][28].

Development Complexity

The development and optimization of models suitable for edge deployment can be complex and time-consuming. It often requires specialized expertise in both machine learning and embedded systems[11]. Furthermore, the lack of established research on security and privacy in the context of TinyML applications underscores the need for ongoing exploration and analysis to address these emerging concerns effectively[27][28].

Future Trends

Growth of the TinyML Market

The TinyML market is anticipated to experience significant growth, with forecasts predicting an increase from 15.2 million deployments in 2020 to an impressive 2.5 billion by 2030.[8] This surge is indicative of a vibrant developer community and the increasing integration of machine learning into edge devices, driven by advancements in hardware specifically designed for these tasks.[12]

Adoption of Generative Edge AI

Adoption trends suggest that Generative Edge AI will be a key area of focus in the coming years. A survey highlighted that over 76% of respondents are motivated by the desire to enhance human-machine interaction and the emergence of previously unattainable use cases.[9] With optimism surrounding market timing, over 70% of those surveyed expect Generative Edge AI solutions to start appearing by 2025, with sustained momentum into 2026 and beyond.[9]

Advancements in Hardware and Infrastructure

As technology continues to evolve, specialized chips optimized for running TinyML models on edge devices are being developed, which will facilitate the execution of complex algorithms with minimal power consumption.[12] Furthermore, the rollout of 5G networks is expected to enhance communication between edge devices and cloud services, promoting a hybrid approach that combines local processing with cloud capabilities for more intensive computations.[12] However, challenges such as high transmission capacity, reliability, and maintenance still need to be addressed to fully realize the potential of edge computing technologies.[22]

Emphasis on Ethical Governance and Inclusivity

The integration of AI and machine learning into economic policy highlights the necessity of addressing infrastructural disparities and ethical concerns to promote inclusive growth.[13] Policymakers are urged to facilitate geographic diffusion of AI activities and ensure equitable access to technology to counteract the tendency towards “winner-take-most” dynamics that could exacerbate inequality.[14]

References

- Ramachandran, A. (2024, December 3). The AI Revolution: How AI is Radically Redefining Economic Growth, Reshaping Productivity Dynamics & Charting an Unprecedented Path for Future. https://www.linkedin.com/pulse/ai-revolution-how-radically-redefining-economic-path-ramachandran-gcfue/

- What is edge machine learning (edge ML)? | Edge Impulse Documentation. (n.d.). Edge Impulse Documentation. https://docs.edgeimpulse.com/docs/concepts/what-is-edge-machine-learning

- Cogent | Blog | Edge Computing in Healthcare: Transforming Patient Care and Operations. (n.d.). https://www.cogentinfo.com/resources/edge-computing-in-healthcare-transforming-patient-care-and-operations

- Powell, P., & Smalley, I. (2025, June 2). Edge computing: Top use cases. IBM. https://www.ibm.com/think/topics/edge-computing-use-cases

- Powell, P., & Smalley, I. (2025, June 2). Edge computing: Top use cases. IBM. https://www.ibm.com/think/topics/edge-computing-use-cases

- Sáez, J. V. (n.d.). The role of TinyML in the industry: Overview. https://www.barbara.tech/blog/the-role-of-tinyml-in-the-industry-overview

- Generative Edge AI Working Group: Enabling creativity and intelligence at the network’s edge. (n.d.). EDGE AI FOUNDATION. https://www.edgeaifoundation.org/edgeai-content/generative-edge-ai-working-group-enabling-creativity-and-intelligence-at-the-networks-edge

- Solutions, P. (2025, April 18). Discover Real-World applications for edge computing. Penguin Solutions. https://www.penguinsolutions.com/en-us/resources/blog/what-are-the-real-world-applications-for-edge-computing

- Arizmendi, L. (2025, May 29). Moving AI to the edge: Benefits, challenges and solutions. Red Hat. https://www.redhat.com/en/blog/moving-ai-edge-benefits-challenges-and-solutions

- How edge computing is driving advancements in healthcare – Intel. (n.d.). Intel. https://www.intel.com/content/www/us/en/learn/edge-computing-in-healthcare.html

- Vina, A. (n.d.). Real-World Edge AI Applications | Ultralytics. https://www.ultralytics.com/blog/understanding-the-real-world-applications-of-edge-ai

- Edge AI. (2025, June 2). IBM. https://www.ibm.com/think/topics/edge-ai

- Jaber, S. (2024, March 19). AI at the Edge Report. Chapter 3: Applications & Case Studies. Wevolver. https://www.wevolver.com/article/ai-at-the-edge-report-chapter-3-applications-case-studies

- Dilmegani, C. (2025, April 7). TinyML(EdgeAI) in 2025: Machine Learning at the Edge. AIMultiple. https://research.aimultiple.com/tinyml/

- Ektos. (2023, October 19). 4 TinyML applications transforming the manufacturing industry. https://www.linkedin.com/pulse/4-tinyml-applications-transforming-manufacturing-industry

- Y, J. (2023, February 15). The hard problems of edge AI hardware. The Asianometry Newsletter. https://www.asianometry.com/p/the-hard-problems-of-edge-ai-hardware

- Kejriwal, K. (2023, August 29). TinyML: Applications, Limitations, and It’s Use in IoT & Edge Devices. Unite.AI. https://www.unite.ai/tinyml-applications-limitations-and-its-use-in-iot-edge-devices/

- Elhanashi, A., Dini, P., Saponara, S., & Zheng, Q. (2024). Advancements in TinyML: applications, limitations, and impact on IoT devices. Electronics, 13(17), 3562. https://doi.org/10.3390/electronics13173562

- Situnayake, D., & Plunkett, J. (n.d.). AI at the Edge. O’Reilly Online Learning. https://www.oreilly.com/library/view/ai-at-the/9781098120191/ch04.html

- Edge AI and TinyML: Career Opportunities and Trends in Machine Learning for 2024. (2024, August 21). Machine Learning Jobs. https://machinelearningjobs.co.uk/career-advice/edge-ai-and-tinyml-career-opportunities-and-trends-in-machine-learning-for-2024

- Enterprise Big Data Framework Alliance. (2024, May 31). EdgeAI and TinyML Applications Advantages and Limitations. Enterprise Big Data Framework. https://www.bigdataframework.org/knowledge/edgeai-and-tinyml-applications-advantages-and-limitations-by-hwan-goh/

- What are the challenges to embed tinyML in edge devices? (n.d.). https://www.ayyeka.com/blog/what-are-the-challenges-to-embed-tinyml-in-edge-computing

- Api4ai. (2025, April 27). Edge AI Vision: Deep Learning Tips | by API4AI | Medium. Medium. https://medium.com/%40API4AI/edge-ai-vision-deep-learning-on-tiny-devices-11382f327db6

- TinyML Security: Exploring Vulnerabilities in Resource-Constrained Machine Learning Systems. (n.d.). https://arxiv.org/html/2411.07114v1

- T, G. (2024, March 22). Tiny Tech, Big Impact: Edge AI and TinyML are revolutionising data processing. Sify. https://www.sify.com/ai-analytics/tiny-tech-big-impact-edge-ai-and-tinyml-are-revolutionising-data-processing/

- T, G. (2024, March 22). Tiny Tech, Big Impact: Edge AI and TinyML are revolutionising data processing. Sify. https://www.sify.com/ai-analytics/tiny-tech-big-impact-edge-ai-and-tinyml-are-revolutionising-data-processing/

- Lee, S. (n.d.). AI and ML Propel Change in Developing Nations. https://www.numberanalytics.com/blog/ai-ml-economic-change

- Jacobs, J., Muro, M., & Liu, S. (2023, July 20). Building AI cities: How to spread the benefits of an emerging technology across more of America. Brookings. https://www.brookings.edu/articles/building-ai-cities-how-to-spread-the-benefits-of-an-emerging-technology-across-more-of-america/

- Zhou, Z., Li, Y., Li, J., Yu, K., Kou, G., Wang, M., & Gupta, B. B. (2022). Gan-siamese network for cross-domain vehicle re-identification in intelligent transport systems. IEEE transactions on network science and engineering, 10(5), 2779-2790.

- Deveci, M., Pamucar, D., Gokasar, I., Köppen, M., Gupta, B. B., & Daim, T. (2023). Evaluation of Metaverse traffic safety implementations using fuzzy Einstein based logarithmic methodology of additive weights and TOPSIS method. Technological Forecasting and Social Change, 194, 122681.

- Deveci, M., Gokasar, I., Pamucar, D., Zaidan, A. A., Wen, X., & Gupta, B. B. (2023). Evaluation of Cooperative Intelligent Transportation System scenarios for resilience in transportation using type-2 neutrosophic fuzzy VIKOR. Transportation research part a: policy and practice, 172, 103666.

Cite As

Gupta B.B. (2025) A Gentle Introduction to Edge AI and TinyML, Insights2Techinfo, pp.1