By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

Compared to other modalities, such as fingerprint or iris identification, facial aging poses a significant challenge for biometric recognition systems. To develop strong, age-invariant recognition requires decades’ worth of high-quality longitu- dinal datasets, which are often in short supply. In this study, we will go through the advanced generative models to blend the realistic, identity, age, and de-aged facial images to allow the scalable expansion of current datasets in a scalable manner. in order to maintain the biometric utility and to get good visual real- ism, the method includes the identity-preserving loss functions. additionally we propose a de-aging and sketch generation framework for forensic applications, where a deepfake-based neural network, which reveals facial characteristics, and a GAN-based on pix2pix, create realistic drawings. The important gains in real- ism are shown by experimental evaluations using the CHUK and AR Datasets, as reflected by reduced Frechet inception Distance (FID), increased structural Similarity index (SSIM), and Peak Signal to noise Ratio (PSNR) metrices. The system contains the capability to handle various facial attributes across gender, race, and culture, which shows its potential in both the security and creative media environments. This study demonstrates how synthetic age progression and regression can advanced forensic facial recognition, to overcome facial con- straints and increase the potential for media creation.

Keywords

De-aging, Generative Models, Identity Preservation, Forensic Facial Recognition, Deepfakes

Introduction

Facial aging is a form of variation that produces challenges to automated face recognition systems compared to other elements like expression, lighting, and posture. Unlike these differences, which can often be mitigated with these established techniques, biological aging is influenced by complex genetic, demo- graphic, and environmental factors, which lead to facial appearance over time. The high-quality datasets that combine the images of individuals, which are captured over decades, are crucial for training robust age recognition models. Yet, these datasets are hard to find and to collect on a large scale. To overcome this limitation, generative models offer a practical solution by creating realistic age- regressed and age-progressed facial images. When the training data is limited, the traditional GAN-based approaches struggle with preservation of identity, biological plausibility, and artifact-free outputs. Recent advances, such as a combination of identity-preserving loss functions and minimal image-capturing supervision, will be able to provide accurate and realistic facial aging [1] simulations from small input sets. In this article, we will discuss the AI-based techniques for realistic, identity-preserving facial aging and de-aging. using the text-to-image diffusion models and the generation of the sketches, where it can be applied in biometrics, creative media, and forensic investigations.

Proposed Methodology

This proposed method produces a latent diffusion model to perform realistic and preserving Facial aging and de-aging using AI. This method uses the Dream Booth framework, which has a customized loss function and regularization technique to maintain the biometric accuracy while modeling the age progression and regression. The steps for implementing this approach are as follows:

Model Framework

We use a Latent Diffusion Model (LDM), which has 3 different parts such as:

Variational Autoencoder (VAE)

The work of the VAEs is to encode the high-resolution face photos into low- dimensional latent representations and decode them back after editing.

U-Net Backbone

The U-Net backbone uses cross-attention layers to perform the denoising in the latent Space for text conditioning.

Text Encoder

The text encoder is used to Transform the user-defined prompts into embeddings that guide the aging or de-aging process.

Training Strategy

The model is trained using 2 fine-tuned Datasets, which are:

Training Set

The Training set will have a small collection (about 10 – 20) of face images of the target individual, just to capture the identity-specific features of the person in the image [3].

Regularization Set

The regularization set will have about 600 image-caption pairs, with 6 groups containing: Child, teen, young, adult, middle-aged, senior, and representing a variety of issues. During inference, these captions function as semantic age labels.

Loss Function for Identity Preservation

It is used to make sure that the generated images retain the subject’s identity while displaying realistic aging [4]. Some of the loss functions that are shown in Table 1 are used here:

Table 1: Ablation Loss Function study Results

CONFIGURATION | RECONSTRUCTION LOSS | BIOMETRIC LOSS | PRIOR LOSS | IDENTITY SCORE |

Baseline | Yes | No | No | 0.68 |

+ Biometric | Yes | Yes | No | 0.77 |

+ Prior | Yes | Yes | Yes | 0.79 |

full Model | Yes | Yes | Yes | 0.82 |

Reconstruction Loss

The reconstruction loss function minimizes the differences between the original and generated images.

prior Preservation Loss

The Prior preservation loss function uses the information at the Class level (such as ”person”) to maintain the variability.

Biometric Loss

The Biometric loss function determines the L1 face embeddings, which are taken from a VGGFace model that has already been trained.

Age Conditioned Generation

During the final stage, the aging or de-aging is attained by providing the model with a unique sub token, class label, and a target age group (”image of a normal person as elderly”) where which enables fine control over the visual transforma- tion across different age phases while conserving the identity traits.

Model Application

The proposed method can be applied in multiple domains, such as:

Biometric Systems

It is applied in Biometric systems to improve the age-invariant recognition by adding synthetic images to the datasets [2].

Forensics

The application of the model is done in Forensics by using de-aged facial reconstructions to help find missing people, or it can be used to reopen cold cases [5].

Media and creative content

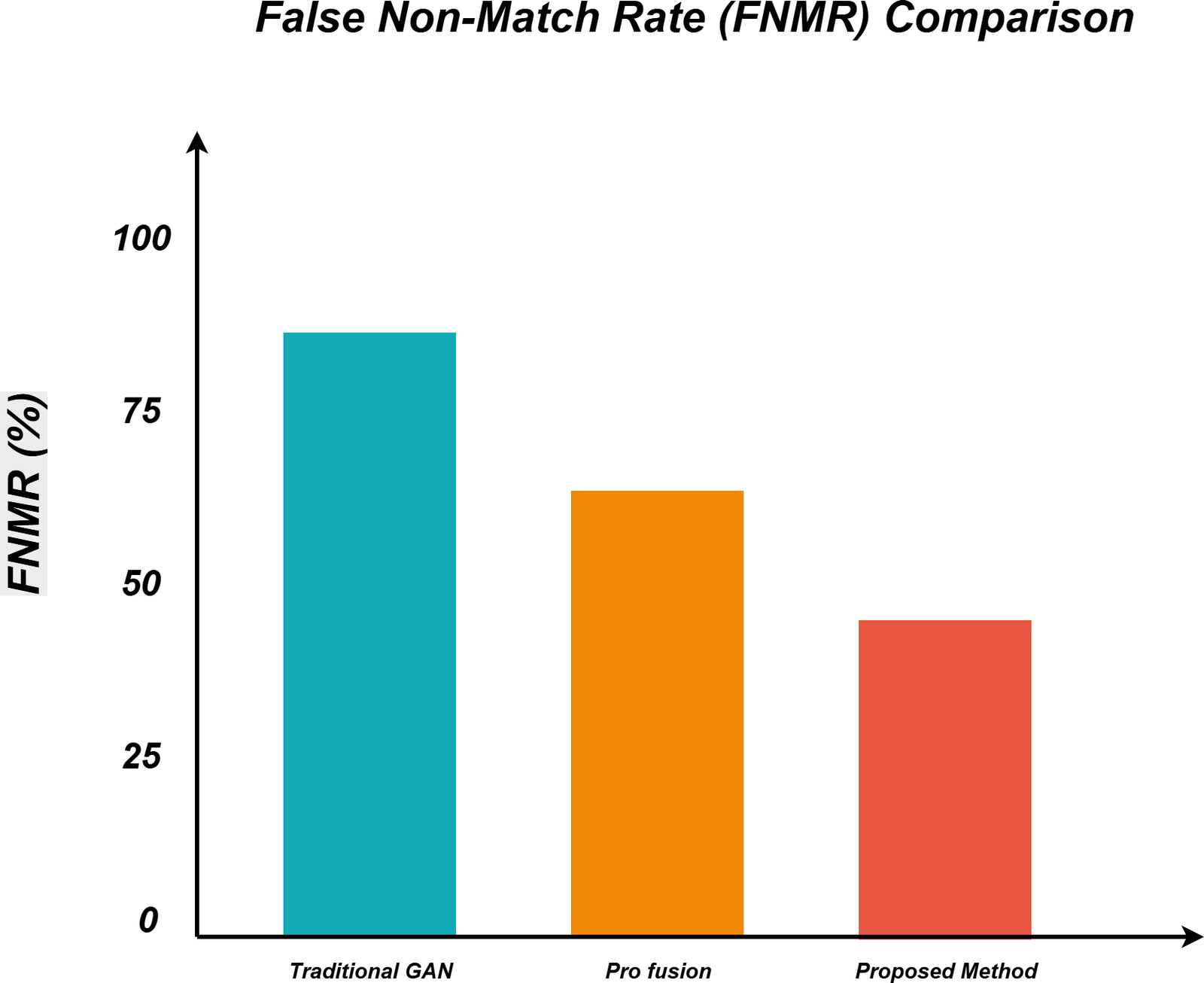

Here, the model is used for regulated age editing for entertainment and visual purposes. The False Non-Match Rate (FNMR) comparison results have been shown in Figure 1 below.

Figure 1: FNMR comparison

Conclusion

In this study, we have introduced a facial aging and de-aging approach that is based on the regularization-driven latent diffusion models, which is improved with contrastive, cosine, and biometric losses to ensure identity preservation. The experiments which is made on CelebA, LFW, and AgeDB using ArcFace and Adaface have shown significant gains over GAN-based techniques, which is used to reduce the FNMR by up to 44 percent which surpassing proFusion by an extra 18 percent. The FNMR has been further decreased by fine-tuning Arcface on artificially aged photos which is generated by AI. The future work will be on the single-image age editing and integration with neural radiance fields for 3D facial aging.

References

- Sudipta Banerjee, Govind Mittal, Ameya Joshi, Sai Pranaswi Mullangi, Chinmay Hegde, and Nasir Memon. Identity-aware facial age editing using latent diffusion. IEEE Transactions on Biometrics, Behavior, and Identity Science, 6(4):443–457, 2024.

- Sowmya BJ, S Seema, S Rohith, et al. A visual computing unified application using deep learning and computer vision techniques. International Journal of Interactive Mobile Technologies, 18(1), 2024.

- Amal Boutadjine, Fouzi Harrag, Khaled Shaalan, and Sabrina Karboua. A comprehensive study on multimedia deepfakes. In 2023 International Con- ference on Advances in Electronics, Control and Communication Systems (ICAECCS), pages 1–6. IEEE, 2023.

- Kathleen Loock. On the realist aesthetics of digital de-aging in contemporary hollywood cinema. Orbis Litterarum, 76(4):214–225, 2021.

- Jason Elroy Martis, MS Sannidhan, N Pratheeksha Hegde, and L Sadananda. Precision sketching with de-aging networks in forensics. Fron- tiers in Signal Processing, 4:1355573, 2024.

- Arya, V., Gaurav, A., Gupta, B. B., Hsu, C. H., & Baghban, H. (2022, December). Detection of malicious node in vanets using digital twin. In International Conference on Big Data Intelligence and Computing (pp. 204-212). Singapore: Springer Nature Singapore.

- Lu, Y., Guo, Y., Liu, R. W., Chui, K. T., & Gupta, B. B. (2022). GradDT: Gradient-guided despeckling transformer for industrial imaging sensors. IEEE Transactions on Industrial Informatics, 19(2), 2238-2248.

- Sedik, A., Maleh, Y., El Banby, G. M., Khalaf, A. A., Abd El-Samie, F. E., Gupta, B. B., … & Abd El-Latif, A. A. (2022). AI-enabled digital forgery analysis and crucial interactions monitoring in smart communities. Technological Forecasting and Social Change, 177, 121555.

- Pollack, M. E. (2005). Intelligent technology for an aging population: The use of AI to assist elders with cognitive impairment. AI magazine, 26(2), 9-9.

- Zhavoronkov, A., & Mamoshina, P. (2019). Deep aging clocks: the emergence of AI-based biomarkers of aging and longevity. Trends in Pharmacological Sciences, 40(8), 546-549.

- Ganceviciene, R., Liakou, A. I., Theodoridis, A., Makrantonaki, E., & Zouboulis, C. C. (2012). Skin anti-aging strategies. Dermato-endocrinology, 4(3), 308-319.

Cite As

Kalyan C S N (2025) AI Aging and De-aging: Synthetic Age Progression in Media, Insights2Techinfo, pp.1