By: C S Nakul Kalyan, CCRI, Asia University

Abstract

The use of Artificial Intelligence (AI) in the hiring process has made the process of evaluating the individuals who appear for the interview more efficient. On the other side, it has created new types of security risks. One of the biggest misuses of the deepfake technology is when the attackers come as a job applicant or a business executive to trick the organizations, and the misuse of the technology is one of the crucial risks. In this study, we will go through the advancement of AI-driven hiring systems and the detection frameworks to evaluate deepfake job interviews and CEO impersonation. While the identity fraud is addressed by the deep learning based face and audio Techniques, the machine learning models such as Support Vector machines (SVM) and Large Language Models (LLMs) will be used in resume screening, assessment, and language analysis. Here we can say that, the AI will be used as a good recruitment process and as the same time it can be manipulated using deepfakes. In the digital hiring systems, we will require an integration of assessment, verification, and monitoring in which can be used to maintain the authenticity and reduce new social risks.

Keywords

Fake job interviews, CEO Impersonation, Artificial Intelligence (AI), Machine learning (ML), Deepfake Detection, emotional and Sentiment Analysis.

Introduction

Artificial Intelligence has become a crucial form for hiring individuals in on- line interviews, tests, and resume screening [2]. From these advancements, we have got new types of threats which has been brought by the same technical advancements. The abuse that is done by the deepfake technology are enabling the intruders to use the fake content and show them as job seekers or business executives [4]. The fraudulent candidates can use the deepfakes to pass the automated systems, and in corporate organizations, the CEO impersonation attacks misuse the trust to manipulate people into doing inappropriate acts. To overcome such threats, the study has focused on AI-driven verification procedures, where the CNNs will be used to detect deepfakes, such as verifying face identities, lip-sync discrepancies [5], and to check the candidate’s behavior, while the AI-driven chatbots will improve the security by monitoring the inter- view and helping the candidates throughout the process [1]. In this article, we will go through the deepfakes in job interviews and CEO Impersonation as an increasing threat. The methods will combine the approaches, such as ML-driven HR systems, automated hiring models [2], and deepfake detection models [5], to provide solutions to maintain the originality in the digital environment.

Proposed Methodology

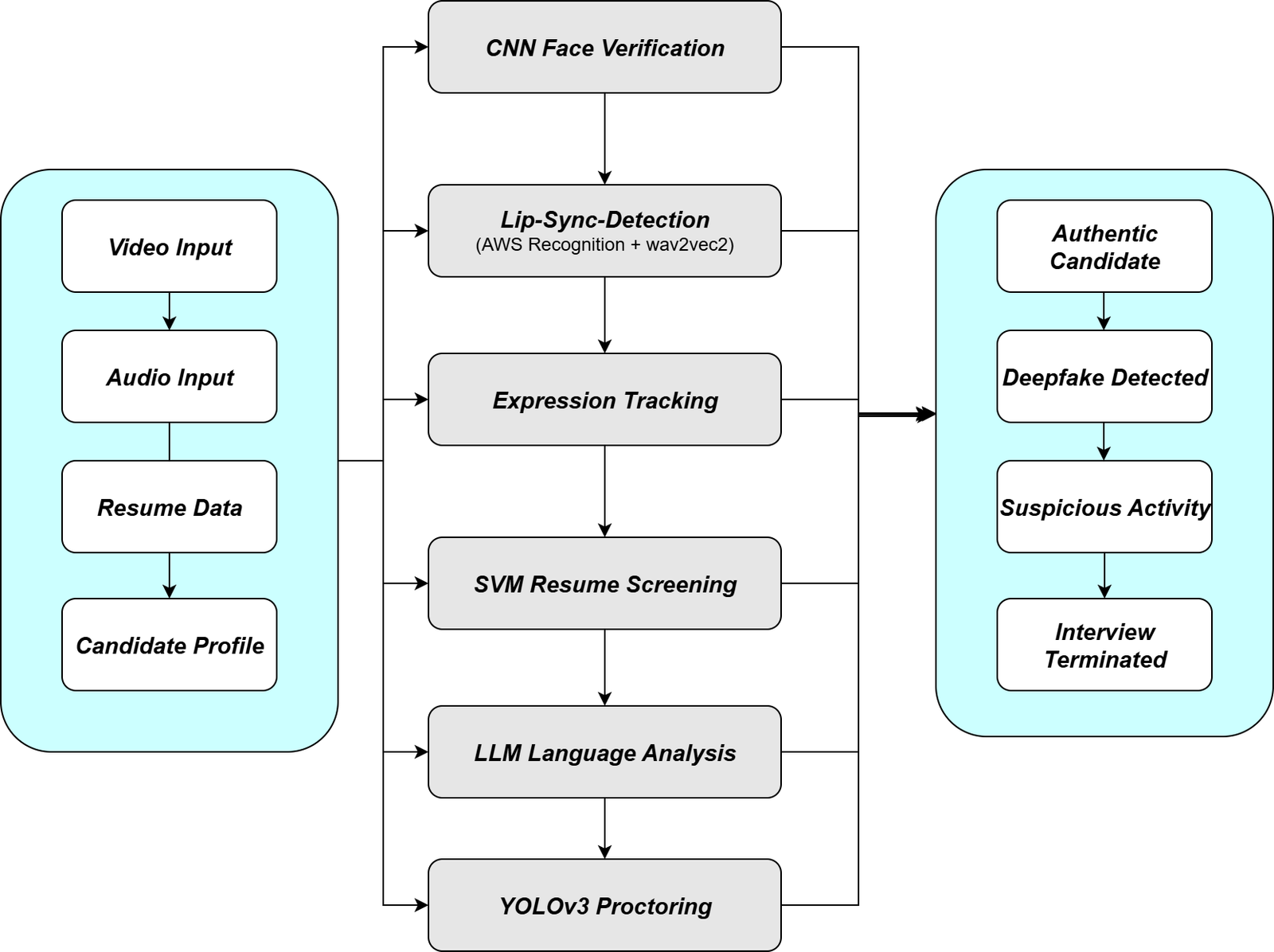

To inspect the rising threats of deepfake-based job interviews and the CEO impersonation attacks, this study provides 3 different methods on AI-driven recruitment and security involved detection methods. The combined methods are presented below as different subtopics shown in Figure 1.

Data Collection and Pre-processing

Data for analyzing and detecting the deepfakes were gathered from 3 main sources such as present in Table 1:

Table 1: Dataset overview and sources

Dataset Type | Size | Source Description | Usage |

Candidate and Resume data Interview video data Custom Q/A Dataset | 100k+ Various Candidates 1000+ Questions | Large-Scale recruitment dataset Recorded videos with multiple roles Domain-specific Freq asked questions | HR Focused interview systems lip-sync authenticity checks Candidate response evaluation |

Candidate and Resume Data

The candidate and resume data is said to be a large-scale recruitment dataset with containing over 100k + datasets, which includes Resumes, assessments, and facial data, which is used by the HR-focused interview systems [1].

Interview Video Data

In the video data, the recorded video with the various candidates across the many roles has been collected and used to check the lip-sync mismatches, emotional variations, and authenticity checks [5].

Custom Q/A Dataset

In the Q/A dataset, the 1000+ set of frequently asked questions has been recorded in various specific domains, which is used to evaluate the candidates’ responses to the questions asked.

pre-processing Steps

The pre-processing steps, which are done here, are Audio-video pre-processing, which will be used for frame extraction and noise removal. The next pre- processing step is NLP-based text vectorization, where the TF-IDF is used, and the last step is the feature engineering for emotion and pattern detection.

Automated Candidate Screening

The Automated candidate screening will be done in 2 main approaches, which are:

Resume Shortlisting

There are 2 approaches in resume shortlisting: the ML-based approach and the NLP-based approach. The ML approach will be using Support Vector Machines (SVMs) and ensemble classifiers, which are used to filter the applicants by skills and qualifications. The NLP approach uses the TF-IDF and cosine similarity to segregate the resumes that are against the job descriptions.

Cognitive and Language Skills

For testing the language skills of the candidates, the fine-tuned Large Language Models (LLMs) will be used to evaluate the reasoning, communication, and problem-solving skills of the candidates who appear for the interviews [3].

Identity and Deepfake Verification

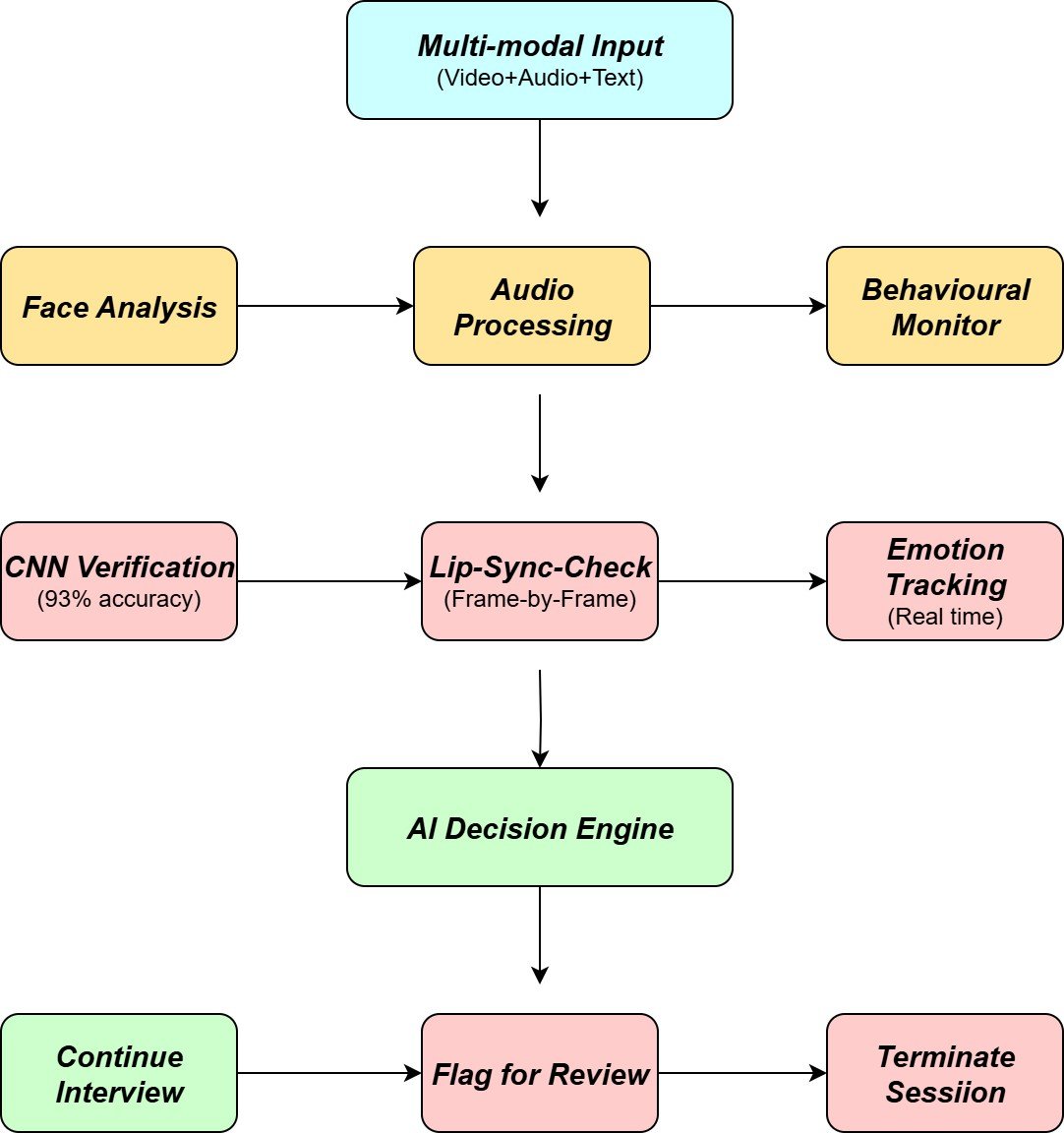

To identify the manipulated content and to verify the deepfakes technology usage, the following technologies will be used such as shown in Figure 2.

Figure 2: Deepfake Detection Pipeline

Face Verification

For the process of face verification, the CNN models are used to cross-check the candidate’s face with the image in the official mail IDs, which are provided by them which can detect manipulation attempts [1].

Fake Lip-Sync Detection

There are two models that are used in fake lip-sync detection: the AWS Rekog- nition + wav2vec2 and the librosa-based audio analysis. The AWS Rekognition will compare the words heard or produced with the lip movements for detecting the mismatches between them. And the librosa-based audio analysis is done by evaluating the scores frame by frame.

Expression Tracking

In the expression tracking, the emotional states such as calm, happy, sad, fear, etc were checked with the situation to evaluate the authenticity and engagement.

Automated Interviewing Systems

The Automated interviewing systems were done by the following techniques such as:

Deepfake Interview Bots

Some of the automated pipelines use the deepfake avatars of recruiters to copy the human interviewers, which will boost the realism.

Speech-to-Text Analysis

In the speech analysis, the candidate’s responses were noted and cross-checked for the similarity scoring against the ground-truth dataset.

Thinking Pattern and Sentiment Analysis

While cross-checking the candidate’s response with the truth dataset, the NLP systems will categorize the candidate’s response as positive, neutral, or negative patterns of involvement, which will be helpful for the hiring process.

Monitoring and Proctoring Against Manipulation

To detect the proctoring acts, which are done from the candidate’s side, the following techniques will be used such as:

Face Proctoring

The model, such as YOLOv3, is used with OpenCV to detect suspicious activities, such as the presence of any gadgets or the presence of multiple humans in the background during the interview. If any of the activities have been detected, the warning will be given, and if the act is repeated, the interview will be terminated.

Behavioral Monitoring

The behavioral monitoring will detect the sentiment and emotional state of the candidate and cross-check with the text responses for fraud detection.

Discrepancy Flags

The Discrepancy flags will be raised when the modal detects any synchronization mismatches or any unusual facial movements have been made. If any of the fraud activities are found, then the alerts will be raised for the possible pre-recorded deepfakes usage, and will terminate the interview process.

Modal Optimization and Evaluation

The Modal optimization and the evaluation process have been done by the following steps such as:

Training Approaches

The training approaches will use different learning algorithms such as logistic regression, decision trees, gradient boosting, CNNs, and fine-tuned LLMs, which have been tested across different modules for the modal training [3].

Validation

Here, the validation is performed by the 10-fold cross-validation process in which will produce the accuracy, precision, recall, and F1-Score reporting as the output will be provided on these factors.

Optimization

The optimization which is been done here is L1/L2 regularization to prevent overfitting, and the process of quantization for lightweight deployment will be done on resource-limited platforms to produce the best optimized results by the models.

Performance Benchmarks

The results based on the performance benchmarks, which are achieved, as fol- lows: The lip-sync detection has achieved a total of – 93 percent accuracy, The Resume shortlisting process has achieved a total of – 92 percent accuracy, and here the semantic similarity score has shown the significance difference between the correct and unclear replies which the candidates give during the interview.

Conclusion

The Deepfake technology has been a huge threat to recruitment and corporate security due to the allowance of job application and CEO fraud. The proposed technique shows that not only is the improvement of AI in hiring efficiency be enough, but it must also improve in defense. The models, such as ML, CNN, audio-video synchronization, and emotion tracking systems, will be used to sup- port the candidate assessment and will help detect deepfakes. The mechanisms such as the proctoring systems, and AI-driven chatbots, helps to increase the engagement and reliability of the interview process. To protect the digital hiring process, a comprehensive integration of assessment, verification, and monitoring is needed, which will ensure the authenticity and reduce the danger of AI-driven assaults.

References

- Diaa Salama AbdElminaam, Noha ElMasry, Youssef Talaat, Mohamed Adel, Ahmed Hisham, Kareem Atef, Abdelrahman Mohamed, and Mohamed Akram. Hr-chat bot: Designing and building effective interview chat-bots for fake cv detection. In 2021 International Mobile, Intelligent, and Ubiquitous Computing Conference (MIUCC), pages 403–408. IEEE, 2021.

- Yash Chaudhari, Prathamesh Jadhav, and Yashvardhan Gupta. An end to end solution for automated hiring. In 2022 Fourth International Confer- ence on Emerging Research in Electronics, Computer Science and Technol- ogy (ICERECT), pages 1–6. IEEE, 2022.

- GC Dixon, S Srithanujan, HDTN Ranaweera, RMP M Ariyarathne, KL Weerasinghe, and N Gamage. Ml-driven hr system: Candidate assess- ment for enhanced recruitment outcomes. In 2024 3rd International Confer- ence on Automation, Computing and Renewable Systems (ICACRS), pages 1572–1577. IEEE, 2024.

- Ebba Lundberg and Peter Mozelius. The potential effects of deepfakes on news media and entertainment. AI & SOCIETY, 40(4):2159–2170, 2025.

- Tirthesh Patange, Swati Shinde, Pratik Nikat, Chaitanya Nalawade, and Prathamesh Pandit. Enhancing virtual hiring through ai-driven fake lip- sync detection and career chatbot. In 2025 Global Conference in Emerging Technology (GINOTECH), pages 1–6. IEEE, 2025.

- Arya, V., Gaurav, A., Gupta, B. B., Hsu, C. H., & Baghban, H. (2022, December). Detection of malicious node in vanets using digital twin. In International Conference on Big Data Intelligence and Computing (pp. 204-212). Singapore: Springer Nature Singapore.

- Lu, Y., Guo, Y., Liu, R. W., Chui, K. T., & Gupta, B. B. (2022). GradDT: Gradient-guided despeckling transformer for industrial imaging sensors. IEEE Transactions on Industrial Informatics, 19(2), 2238-2248.

- Sedik, A., Maleh, Y., El Banby, G. M., Khalaf, A. A., Abd El-Samie, F. E., Gupta, B. B., … & Abd El-Latif, A. A. (2022). AI-enabled digital forgery analysis and crucial interactions monitoring in smart communities. Technological Forecasting and Social Change, 177, 121555.

- Renier, L. A., Shubham, K., Vijay, R. S., Mishra, S. S., Kleinlogel, E. P., Jayagopi, D. B., & Schmid Mast, M. (2024). A deepfake-based study on facial expressiveness and social outcomes. Scientific Reports, 14(1), 3642.

- Prasher, N., Mishra, K. K., & Shamsuddin, Z. (2025). Impact of Deepfake Technology on Job Satisfaction and Commitment: Case Analysis. In Mastering Deepfake Technology: Strategies for Ethical Management and Security (pp. 271-288). River Publishers.

Cite As

Kalyan C S N (2025) Deepfake Job Interviews and CEO Impersonation: AI in Social Engineering Attacks, Insights2Techinfo, pp.1