By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

Deepfake pornography is a problematic issue that involves Artificial Intelligence, consent, and digital harassment. This will be done by face-swapping and generative technologies, which leads to a forced development of realistic sexual content, which mainly targets women, and it increases the image-based sexual abuse. This study states the technological foundations of deepfakes and places them within larger ethical, legal, and societal disadvantages [4]. It goes through the victim’s autonomy and privacy in the expanding role of online platforms in spreading such content [2]. The analysis considers further legal measures, such as international frameworks like the General Data Protection Regulation (GDPR) and the US Legislative proposals, and the modifications to the Digital Services Act. Here, we will discuss regulatory issues and potential solutions [4], stating the need for more protection and technology interventions to prevent abuse while building responsible AI progress.

Keywords

Deepfake Pornography, Digital harassment, Artificial Intelligence, Face swap- ping, General Data Protection Regulation (GDPR).

Introduction

Digital Technology is a major domain that always has the potential for both innovation and abuse. Artificial intelligence (AI) plays a major role in this dualism. AI is overtaking human intelligence to complete the jobs that are done with more advancement in fields such as healthcare, education, and communication etc. This can be misused, such as enabling cybercrime, online harassment, and digital manipulation etc. One of the most dangerous applications of AI today is deepfake technology, where they use machine learning and deep learning approaches to transfer one image or video to another, which will produce hyper-realistic content. deepfakes have been used for abuse mainly in the field of deepfake pornography business, where the non-consensual images and videos has been created and used as an assault. These types of misuse will cause the individual to psychological suffering, reputational ruin, and long-term digital abuse. The misuse of deepfake techniques has created a major social concern about the balance between safety and innovation. In this article, we will go through deepfake pornography as a kind of digital harassment, which mainly focuses on the technological basis and legislative issues, and we will get an idea about how society might face the growing stress between innovation and the misuse of AI and deepfakes.

Proposed Methodology

This Proposed Methodology provides a clear understanding of how deepfake content is created, a case study analysis, and preventive measures against the misuse of the Technologies in real-world scenarios, such as the legal frameworks, has been shown in Table 1. These steps include:

Table 1: Legal Frameworks Addressing Deepfake Pornography by Jurisdiction

Jurisdiction | Legislation | Key Provisions |

United States | National Defense Authorization Act (NDAA) | Criminalizes non-consensual Deepfakes |

European Union | General Data Protection Regulation (GDPR) | Privacy Protection |

United Kingdom | Online Safety Act | Content removal obligations |

Australia | Online Safety Act | Rapid removal notices |

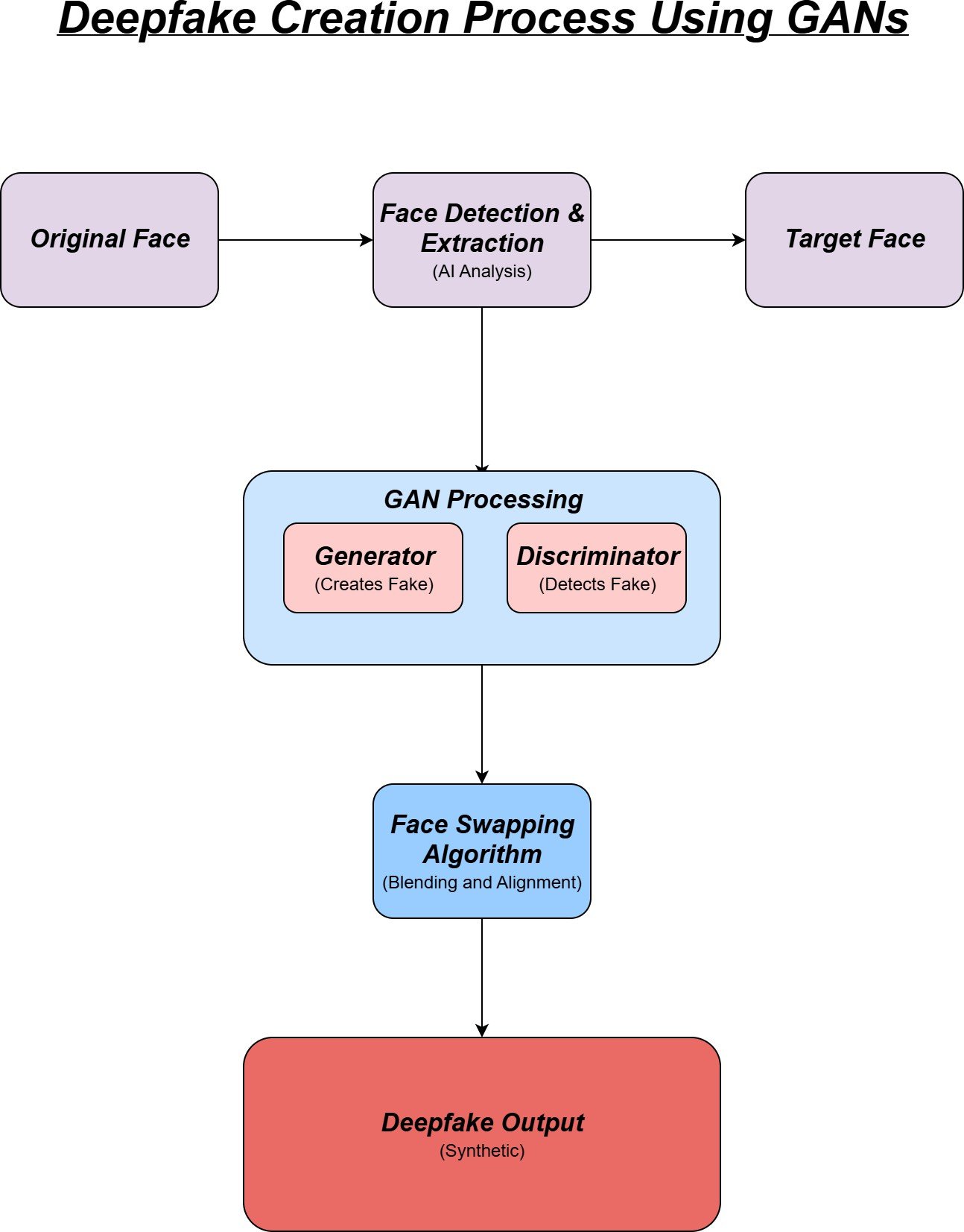

Technical overview of deepfake Creation

The generation of deepfake content has been done by machine learning models such as Generative Adversarial Networks (GANs) and autoencoders, which can perform face swapping and voice synthesis forgery, and many more. In this method [4], we can go through a brief explanation of how the source images and audio are manipulated to access the generation of deepfake pornography, where the technical process is shown in Figure 1.

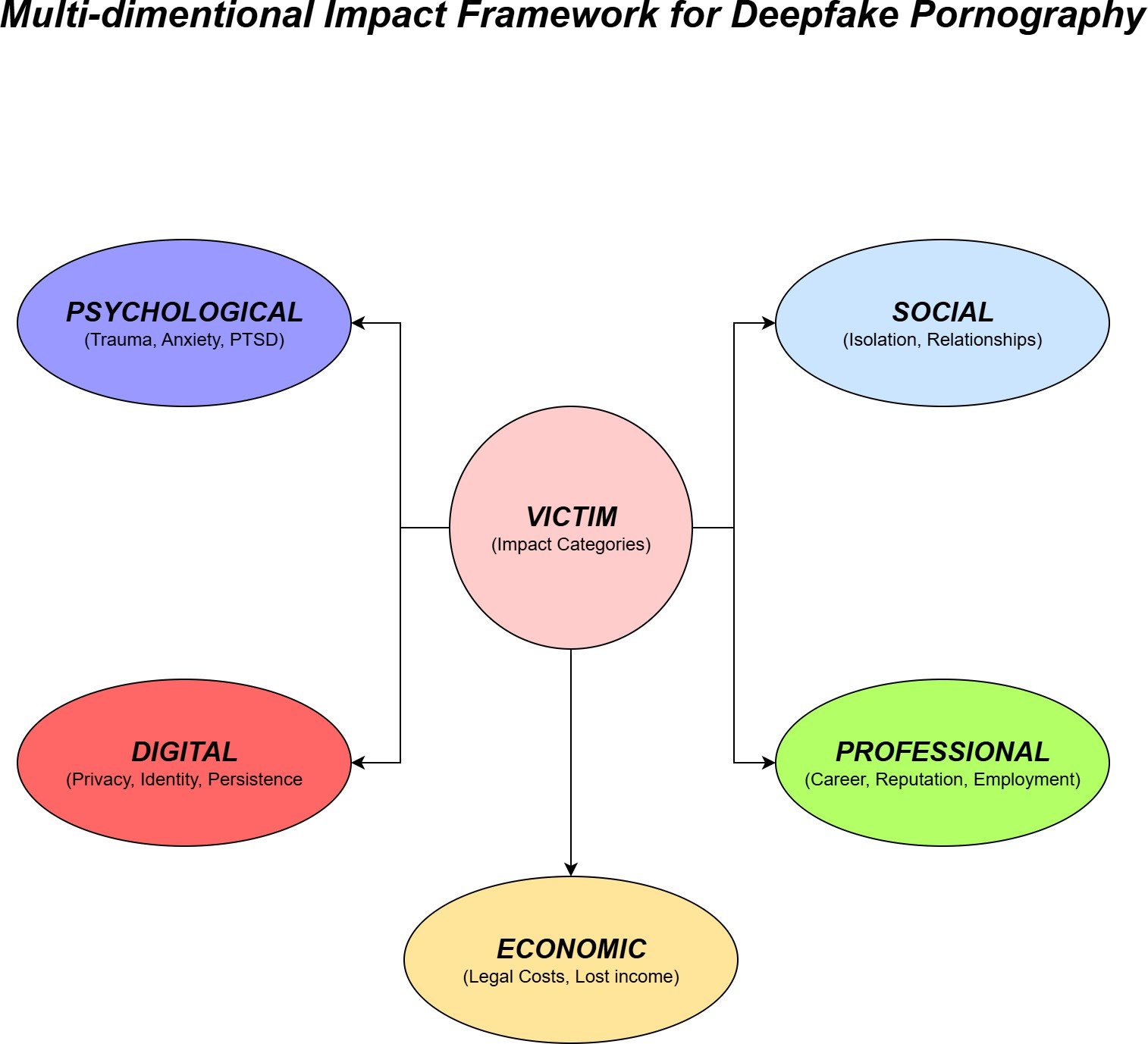

Image-Based sexual Abuse

The other form of deepfake pornography is classified as image-based sexual abuse (IBSA) [5]. This act involves locating the non-consensual deepfake pornography with other sexual abuse practices, such as the generation of revenge pornography, and sharing the non-consensual image [2]. This classification connects the psychological and social issues caused by the generation of deepfake pornography to a larger pattern of sexual exploitation and harassment, and the multidimensional impact Framework will be increased, as shown as Figure 2.

Case study analysis of Deepfake Pornography

A case study approach is used to look into the real-life scenario of deepfake pornography, which is being misused as harassment, blackmail, etc. This case study approach will be used to find the reason behind the misuse of the technologies, such as revenge, blackmail, etc [2].

Ethical and Social Implications

This method contains an ethical analysis of deepfake pornography [3], where it goes through the autonomy, privacy, dignity, and consent while checking the social normalization of the digital content. Here, the problems will be contained, like cultural perceptions toward online harassment affecting the victim and the social responses, etc [4].

Legal and policy Frameworks

A Legal analysis has been used to check how the different jurisdictions handle deepfake pornography. This reviews the existing laws, such as the U.S National Defense Authorization Act (NDAA), the EU’s Digital Services Act, and regulations on personal data processing [5]. This will also check the enforcement gaps, obstacles in identifying criminals, etc.

Platform accountability

The other front of the method is to evaluate the platform’s accountability, which includes the analysis of the role of social media companies, content-hosting platforms, and other websites in the circulation of deepfake pornography [1]. The study confirms whether all these online platforms should have compulsory detection technologies and transparency based on the reporting on synthetic media.

Preventive Measures

To give the solutions that integrate technical, legal, and ethical perspectives includes:

Human-centered AI Approaches

It minimizes the misuse of the technologies by embedding safeguards into AI design [5].

Stronger Legislation

There exists a regulation which leads to explicitly targets deepfake pornography [4].

Detection Technologies

It helps to develop reliable detection tools, which encourages collaboration between governments, researchers, and platforms.

Victim Redress Mechanisms

It makes the creation of easier reporting, support, and recovery pathways, which is mainly for the victims of the deepfake sexual abuse [2].

Conclusion

Deepfake Pornography creates a negative aspect of AI Progress and making the technology a tool for non-consensual sexual abuse and digital harassment. Even though the existing laws and policies, and Acts provide some relief, the enforcement is patchy, and the victims face a major barrier to remedy. For this challenge, a combined approach is used, such as strengthening legal safe- guards, holding platforms accountable, advancing detection technologies, and fostering social awareness. With these integrated measures, the innovation can be balanced with the protection of privacy, dignity, and consent in the digital age.

References

- Santosh Kumar. Legal implications of deepfake technology: Privacy, consent, and copyright.

- Jennifer Laffier and Aalyia Rehman. Deepfakes and harm to women. Journal of Digital Life and Learning, 3(1):1–21, 2023.

- CLARE McGLYNN and Ru¨ya Tuna Toparlak. The ‘new voyeurism’: crim- inalizing the creation of ‘deepfake porn’. Journal of Law and Society, 52(2):204–228, 2025.

- Edvinas Meskys, Julija Kalpokiene, Paul Jurcys, and Aidas Liaudanskas. Regulating deep fakes: legal and ethical considerations. Journal of Intellec- tual Property Law & Practice, 15(1):24–31, 2020.

- Chidera Okolie. Artificial intelligence-altered videos (deepfakes), image- based sexual abuse, and data privacy concerns. Journal of International Women’s Studies, 25(2):11, 2023.

- Arya, V., Gaurav, A., Gupta, B. B., Hsu, C. H., & Baghban, H. (2022, December). Detection of malicious node in vanets using digital twin. In International Conference on Big Data Intelligence and Computing (pp. 204-212). Singapore: Springer Nature Singapore.

- Lu, Y., Guo, Y., Liu, R. W., Chui, K. T., & Gupta, B. B. (2022). GradDT: Gradient-guided despeckling transformer for industrial imaging sensors. IEEE Transactions on Industrial Informatics, 19(2), 2238-2248.

- Sedik, A., Maleh, Y., El Banby, G. M., Khalaf, A. A., Abd El-Samie, F. E., Gupta, B. B., … & Abd El-Latif, A. A. (2022). AI-enabled digital forgery analysis and crucial interactions monitoring in smart communities. Technological Forecasting and Social Change, 177, 121555.

- Karasavva, V., & Noorbhai, A. (2021). The real threat of deepfake pornography: A review of Canadian policy. Cyberpsychology, Behavior, and Social Networking, 24(3), 203-209.

- Gieseke, A. P. (2020). ” The new weapon of choice”: Law’s current inability to properly address deepfake pornography. Vand. L. Rev., 73, 1479.

- Story, D., & Jenkins, R. (2023). Deepfake pornography and the ethics of non-veridical representations. Philosophy & Technology, 36(3), 56.

Cite As

Kalyan C S N (2025) Deepfake Pornography: Technology, Consent, and Digital Harassment, Insights2Techinfo, pp.1