By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

The rise in artificial intelligence (AI) has started to create realistic deepfakes where the facial appearances and the voices are appropriately altered with- out the authorization of the individuals whose features are portrayed. While the main focus is on visual realism, the importance of manipulating emotional expressions in synthetic media is less emphasized. With a well-established database, this study examines the transfer of emotional expression in face-swap- based deepfakes. This works based on photograms that are extracted from both original and manipulated videos, and the analysis of emotional expressions was tested across all performers. between the original and fake recordings, the emotional expressions are irregularly transferred, which has an impact on the generation quality and the detectability. Regarding this, we look at the emotional AI from the public perception. The technology has been designed to build a profile, interpret, and respond to human emotions. A survey that is represented nation- ally has disclosed a fear of manipulation in important areas, such as emotion manipulation on social media, which includes deepfakes, misinformation, and other conspiracy content, and the participants were concerned about building trust in the technology, and it can misuse the data that has been provided by the users. This study suggests a way to overcome these misuses of the technology regarding emotional Manipulation.

Keywords

Emotional manipulation, Fake feelings, deepfakes, synthetic media, Photograms

Introduction

The advancements of AI have led to the creation of advanced deepfakes, where the facial appearances and the voices are altered through face swapping and

other generative techniques [4]. The synthetic media that is provided by deep- fakes is nearly indistinguishable from the original data. These are created with- out the consent of the individuals, where they can be misused as blackmail, market manipulation, and harassment, etc [3]. The facial expression is the primary medium through which human emotions are shown. The facial expression recognition (FER) systems which is been using deep learning, has advanced features for detecting emotional states in images and videos [5]. The recent re- search stated that emotional expressions are inconsistently transferred between the original and manipulated recordings, with high variability that is controlled by the expressions from the performers [2].

The emotional AI technologies that detects, interpret, and respond to human emotions using computer vision, audio-video analysis, and biometric sensing which are securing a grip in social, commercial, and regulatory domains [1]. These technologies gives the advantages like improving user experience, and they also give priority to privacy, bias, and the possibility of secretly regulating the emotional and cognitive states. in this article we will go through developing efficient emotional detection techniques, protections, and legal frameworks.

Literature Review

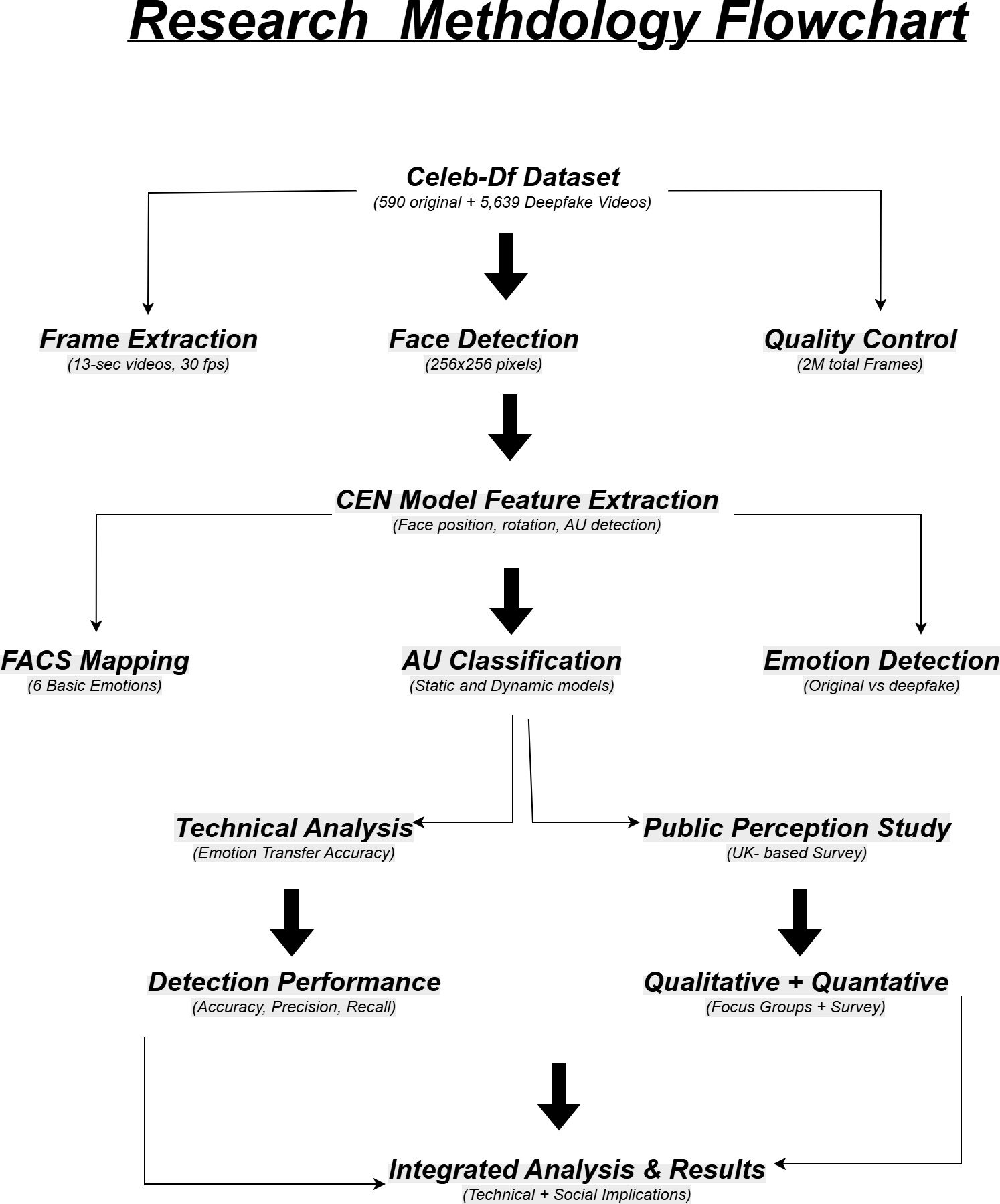

This study proposes a mixed methodology that integrates deepfake emotional expression analysis with the public perception of emotional AI’s manipulative potential [1] as shown in Figure 1, which comes under:

Materials

The dataset that is used here is Celeb-DF, which is made up of 590 original and 5,639 deepfake videos, which it is divided each video as frames of 13 seconds each. The total frames that are produced are around 2 million and have 30 fps. This Dataset contains 59 celebrities with varying genders, ages, etc [2]. The real videos are taken from YouTube, and the deepfake videos are generated through the deepfake framework, which uses the combination of Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs) with im- provements of increased 256×256 pixels, reduced color mismatches, improved face mask generation for the smooth blending, etc [4].

Emotion Representation

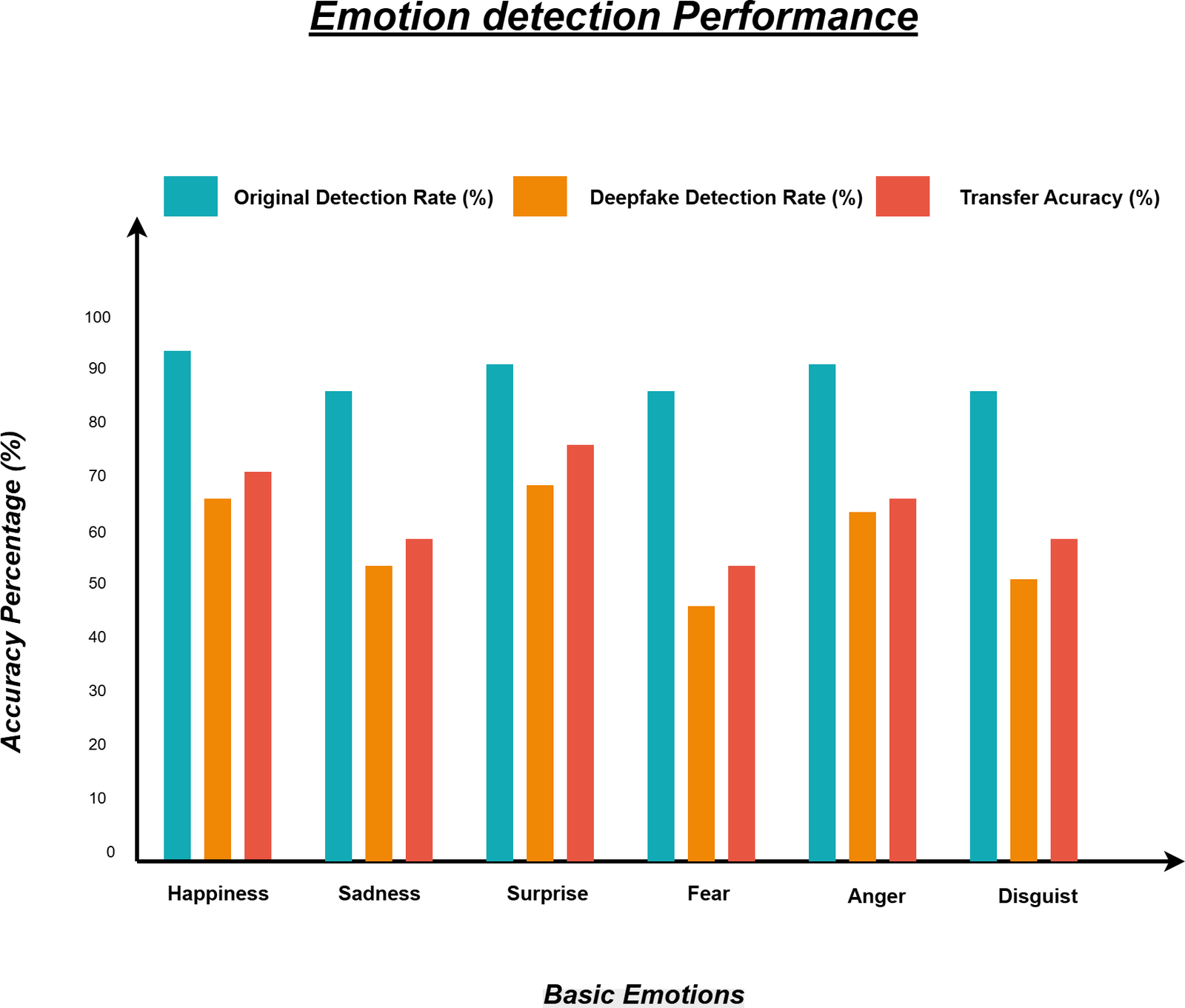

By using the Facial Action Coding System (FACS), Mapping Action Units (AUs), the Facial expressions were analyzed [2]. With this system, the de- tection of 6 basic face expressions, such as: Happiness, Sadness, Surprise, Fear, Anger, and Disgust. This is how the Representation of all the basic emotions has been done here, and the emotion transfer accuracy has been shown in Table 1, and the accuracy comparison is shown in Figure 2.

Table 1: Comparison of Emotion Transfer Accuracy

EMOTION | ORIGINAL DETECTION RATE | DEEPFAKE DETECTION RATE | CONSISTENCY SCORE |

Happiness | 94.2 | 67.8 | 0.72 |

Sadness | 89.5 | 54.3 | 0.61 |

Surprise | 91.8 | 71.2 | 0.78 |

Fear | 87.3 | 48.9 | 0.56 |

Anger | 92.1 | 63.4 | 0.69 |

Disgust | 88.7 | 52.7 | 0.59 |

Average | 90.6 | 59.7 | 0.66 |

Figure 2: Emotion transfer accuracy

procedure

The Feature Extraction is done by Using a Convolutional Experts Network (CEN) model, which will also be used to detect face position, rotation, AU presence, and AU intensity for each video frame. Here, AU Predicted mod- els, such as Static (single-frame) and dynamic (Video-calibrated), were applied. This analysis covered original and fake frames and in it provided a total of 23.4 GB of Feature Data. Based on the AU Emotional filter mapping the emotions related frames were filtered with yielding a detailed comparison. The procedure for detecting fake emotional reactions are done with these metrices [4].

Public Perception Study

To investigate the perceptions of emotional profiling Technologies, a two-stage UK-based study has been implemented [1], which involves:

Stage 1 (Qualitative)

Here are 10 online focus groups that will be using the design fiction and contravision Techniques can be used to explore the benefits and harms that will be produced mainly by Social media [1].

Stage 2 (Quantitative)

Stage 2 comes under the quantitative survey, in which the attitudes are measured using the emotional AI’s manipulative capacity. Here, the samples which are given were balanced by gender, economic status, income, and age(18+), etc.

Conclusion

This study will be the first to especially look onto the deepfakes of the facial expressions of emotions. The deepfakes, which are generated through the face-swap algorithms, are repeatedly fail to accurately replicate the emotional expressions of the original recordings. With the differences in the emotion and performers, the inconsistencies between the original and fake recordings presents a path for the deepfake detection. These results highlight the need for the detection algorithms to use the emotion-based signals as trustworthy markers of manipulating and deepfake generating Techniques to maintain the emotional accuracy.

References

- Vian Bakir, Alexander Laffer, Andrew McStay, Diana Miranda, and Lachlan Urquhart. On manipulation by emotional ai: Uk adults’ views and gover- nance implications. Frontiers in Sociology, 9:1339834, 2024.

- Juan-Miguel L´opez-Gil, Rosa Gil, and Roberto Garc´ıa. Do deepfakes ade- quately display emotions? a study on deepfake facial emotion expression. Computational intelligence and neuroscience, 2022(1):1332122, 2022.

- Kareem Mohamed and Bahire Efe Ozad. Ai-driven media manipulation: Public awareness, trust, and the role of detection frameworks in address- ing deepfake technologies yapay zeka destekli medya manipu¨lasyonu: Ka- muoyu farkındalı˘gı, gu¨ven ve deepfake teknolojilerini ele almada algılama ¸cerc¸evelerinin.

- Anwar Mohammed. Deep fake detection and mitigation: Securing against ai- generated manipulation. Journal of Computational Innovation, 4(1), 2024.

- Jamshir Qureshi and Samina Khan. Deciphering deception–the impact of ai deepfakes on human cognition and emotion. 2024.

- Lu, Y., Guo, Y., Liu, R. W., Chui, K. T., & Gupta, B. B. (2022). GradDT: Gradient-guided despeckling transformer for industrial imaging sensors. IEEE Transactions on Industrial Informatics, 19(2), 2238-2248.

- Sedik, A., Maleh, Y., El Banby, G. M., Khalaf, A. A., Abd El-Samie, F. E., Gupta, B. B., … & Abd El-Latif, A. A. (2022). AI-enabled digital forgery analysis and crucial interactions monitoring in smart communities. Technological Forecasting and Social Change, 177, 121555.

- Agrawal, D. P., Gupta, B. B., Yamaguchi, S., & Psannis, K. E. (2018). Recent Advances in Mobile Cloud Computing. Wireless Communications and Mobile Computing, 2018.

- Goyal, S., Kumar, S., Singh, S. K., Sarin, S., Priyanshu, Gupta, B. B., … & Colace, F. (2024). Synergistic application of neuro-fuzzy mechanisms in advanced neural networks for real-time stream data flux mitigation. Soft Computing, 28(20), 12425-12437.

- Li, M., Ahmadiadli, Y., & Zhang, X. P. (2025). A survey on speech deepfake detection. ACM Computing Surveys, 57(7), 1-38.

- Sharma, V. K., Garg, R., & Caudron, Q. (2025). A systematic literature review on deepfake detection techniques. Multimedia Tools and Applications, 84(20), 22187-22229.

- Kumar, A., Singh, D., Jain, R., Jain, D. K., Gan, C., & Zhao, X. (2025). Advances in DeepFake detection algorithms: Exploring fusion techniques in single and multi-modal approach. Information Fusion, 102993.

Cite As

Kalyan C S N (2025) Emotion Manipulation in Deepfakes: Faking Feelings with AI, Insights2Techinfo, pp.1