By: Akshat Gaurav, Ronin Institute, Montclair, NJ, USA

Memory management is a fundamental aspect of operating systems that encompasses various techniques, including memory paging, swapping, and segmentation. These methods allow efficient allocation and use of a computer’s physical memory, significantly impacting system performance, resource management, and application execution. Notably, they enable operating systems to run multiple processes concurrently, optimizing memory usage and reducing latency in computing environments. Each technique presents distinct advantages and challenges, making them pivotal to modern computing systems.

Memory paging divides a process’s memory into fixed-size blocks, or pages, facilitating the flexible allocation of physical memory and minimizing fragmentation. This approach enhances performance through demand paging, which loads only the required pages into RAM, optimizing memory resources. However, it can lead to issues such as thrashing when excessive page faults occur, where the system spends more time resolving these faults than executing applications. Swapping, on the other hand, involves transferring inactive processes between RAM and

disk-based swap space, which can enhance responsiveness but may also introduce latency if poorly managed. Both techniques underscore the importance of balancing immediate memory needs with long-term efficiency in resource utilization.

Segmentation further complements these methods by allowing variable-sized segments that represent logical units of a program, such as code and data. This technique provides better alignment with a program’s structure, enabling dynamic memory allocation and improved protection. However, segmentation can lead to external fragmentation, complicating memory management. Notably, modern operating systems often utilize a hybrid approach that combines paging and segmentation, optimizing memory allocation and performance by leveraging the strengths of both techniques.

The interplay between paging, swapping, and segmentation is vital in addressing the increasing demands of contemporary computing, from personal devices to enterprise applications. Understanding how these techniques work together informs developers and system administrators on effectively managing memory, ensuring high performance, and navigating potential controversies related to system efficiency and resource allocation strategies.

Memory Paging

Memory paging is a crucial memory management scheme in computer operating systems that allows non-contiguous allocation of physical memory for programs, thereby improving efficiency and system performance. This method eliminates the need for contiguous allocation, enabling the system to manage memory more flexibly by dividing it into equal-sized blocks called “pages” [1][2].

Functionality of Paging

Paging works by loading only the necessary pages of a program into RAM when needed, a technique known as demand paging. This minimizes RAM usage and optimizes performance, especially for applications with diverse memory requirements [3]. When a program requires data that is not currently in physical memory, a page fault occurs, prompting the operating system to retrieve the needed page from secondary storage [4]. The operating system uses a data structure known as a page table to map virtual pages to physical frames in memory [5].

Page Faults

A page fault is an exception raised when a process attempts to access data that is not loaded in physical memory. The handling of page faults is typically automated, allowing the operating system’s kernel to allocate or deny RAM access as necessary [4]. There are two categories of page faults: minor page faults (or soft faults), which occur when the required page is shared among processes that already have it in memory, and major page faults, which require loading the page from disk [4].

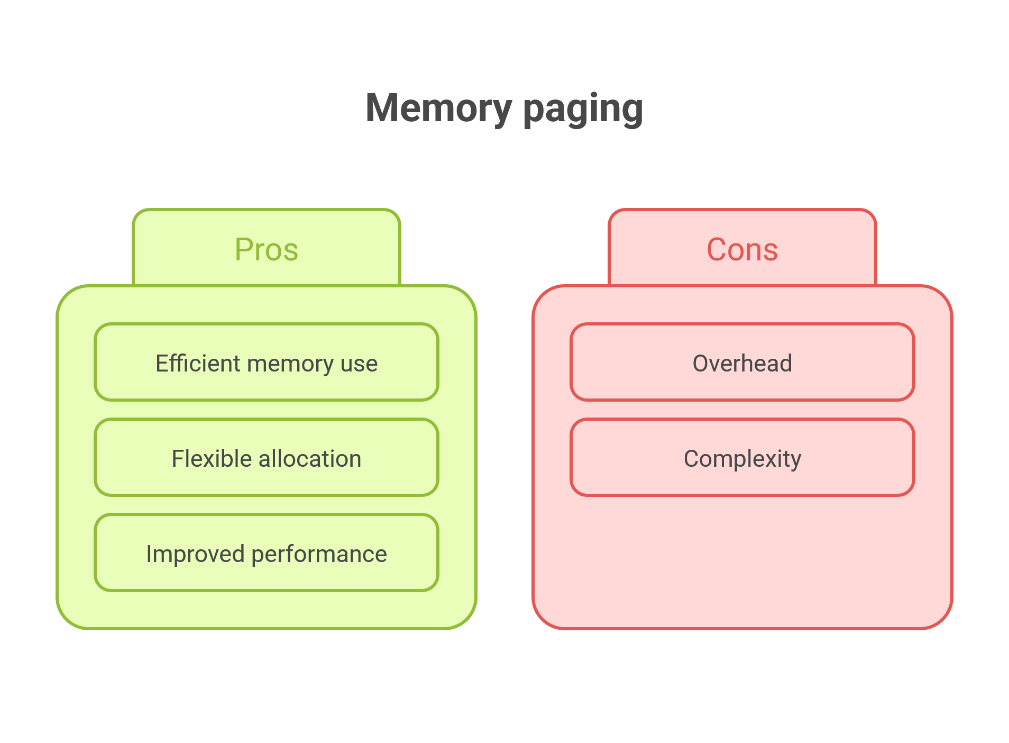

Advantages of Paging

Paging provides several significant advantages:

Reduced Fragmentation: By eliminating the need for contiguous memory allocation, paging reduces memory fragmentation and avoids the need for memory compaction [1].

Improved Performance: Paging allows multiple processes to share the same physical memory efficiently, enhancing overall system performance [5].

Increased Security: The abstraction provided by paging makes it more difficult for malicious code to access sensitive data directly in memory [5].

Challenges and Limitations

Despite its benefits, paging also has challenges. A critical issue is the potential for “thrashing,” a condition where excessive page faults occur, causing the system to spend more time resolving these faults than executing the actual program. This typically happens when a program’s working set grows large enough to exceed available physical memory [6]. Moreover, paging systems must implement page replacement algorithms to decide which page to evict when a fault occurs, which can add complexity to memory management [5].

Memory Swapping

Memory swapping is a crucial memory management technique employed by operating systems to optimize the use of physical memory (RAM) and to enhance overall system performance. It involves transferring data that is not currently being used from RAM to a designated area on disk known as swap space or swap file, thus freeing up RAM for other processes that require immediate access to memory[7][4].

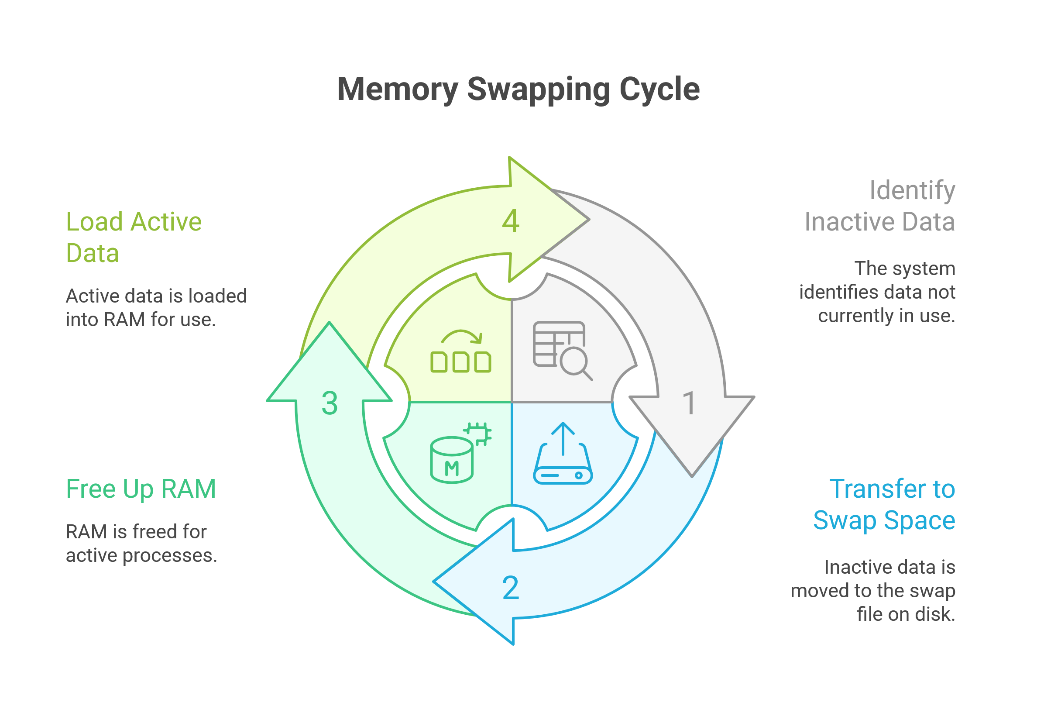

Mechanism of Swapping

The swapping process generally follows several key steps. First, when the operating system (OS) detects that the RAM is nearing capacity, it identifies inactive processes or data that have not been accessed recently[8]. These inactive memory pages are then transferred to the swap space, which may be a dedicated partition or a file on the hard disk[9]. This operation is referred to as “swap-out”. Conversely, when a process that was previously swapped out is needed again, the OS performs a “swap-in,” transferring the relevant data back into RAM while potentially swapping out other inactive processes to maintain an optimal memory balance[10][8].

Types of Swapping

Swapping can be categorized into two main actions:

Swap-In: This process involves loading data or processes from the swap space back into physical RAM for execution[11].

Swap-Out: This action refers to moving processes from RAM to the swap space to make room for new or higher-priority processes[11][10].

Advantages and Disadvantages

One of the primary benefits of memory swapping is the efficient utilization of RAM, enabling the operating system to run multiple applications simultaneously, even when they collectively exceed the physical memory capacity[4][12]. By relocating less critical processes to the swap space, the system ensures that more important tasks have the memory resources they need, which enhances responsiveness and performance during peak usage times.

However, the swapping technique is not without its drawbacks. The performance of a system can degrade if the swapping algorithm is poorly implemented, leading to excessive disk I/O operations and increased latency[11][10]. Additionally, as accessing data from a hard disk is significantly slower than accessing it from RAM, excessive swapping can result in a performance bottleneck known as thrashing, where the system spends more time swapping data than executing processes[8].

Swapping vs. Virtual Memory

While swap memory is often discussed alongside virtual memory, they are not identical concepts. Virtual memory refers to the broader abstraction that allows an OS to use disk space as an extension of RAM, whereas swap memory specifically pertains to the storage of data that has been temporarily moved out of physical memory[8][9]. The effective use of swap space is essential for systems with limited physical memory, enabling them to manage larger workloads than their RAM would otherwise allow[13].

Memory Segmentation

Memory segmentation is a memory management technique used by operating systems to divide a computer’s primary memory into multiple segments or sections, each of which can represent a different logical unit of a program such as code, data, or stack[14][15]. In a segmentation system, a memory reference consists of a segment identifier and an offset within that segment, allowing the system to efficiently map logical addresses to physical memory locations[16][17].

Characteristics of Segmentation

Dynamic Growth and Code Sharing

One of the key advantages of memory segmentation is its ability to support dynamic growth. This allows processes to allocate memory segments as needed, which is particularly beneficial for managing variable-sized data structures or adapting to changing memory requirements over time[17]. Additionally, segmentation enables multiple processes to share the same code segment, thereby reducing memory usage and enhancing overall system performance[17].

Structure and Management

In segmentation, memory is organized into segments that may vary in size. Each segment is identified by a unique segment number, and information about each segment, such as its base address, size, and access rights, is stored in a segment table[18]. A segment descriptor, which is an entry in this table, defines the characteristics of each segment, providing a level of organization and structure to memory management[17].

Benefits of Segmentation

Segmentation enhances memory protection by assigning specific access rights to each segment, which prevents unauthorized access to another process’s memory space, thereby improving system stability and security[17][15]. It also facilitates a more organized address space, allowing for distinct areas for code, data, and stack management, which simplifies memory resource handling[17].

Fragmentation Considerations

While segmentation reduces internal fragmentation by allowing for variable-sized segments, it may introduce external fragmentation. This occurs when free memory is split into small, non-contiguous blocks that are too small to be utilized for larger segment allocations[19]. Consequently, despite the availability of sufficient memory, requests for larger segments may fail if suitable contiguous space is unavailable.

Hybrid Techniques

Segmentation can be used in conjunction with other memory management techniques, such as paging. This hybrid approach, known as segmentation with paging, allows for more fine-grained control over memory allocation and access, optimizing both memory usage and system performance[20]. In this model, segments are further divided into fixed-size pages, enabling efficient memory management while preserving the flexibility offered by segmentation[20].

Comparison of Paging, Swapping, and Segmentation

Overview of Memory Management Techniques

Paging, swapping, and segmentation are three fundamental techniques utilized in memory management within operating systems. Each method offers distinct approaches to allocating and managing memory resources, catering to various performance and flexibility requirements.

Paging

Paging is a memory management scheme that divides the process’s memory into fixed-size blocks known as pages[21][22]. This technique simplifies memory management by treating the memory as a uniform address space, allowing efficient allocation and access. The page size is determined by the hardware, and since pages are of the same size, paging generally leads to faster memory access compared to segmentation[22][23]. However, paging lacks the flexibility that segmentation provides, as it does not consider the logical structure of a program.

Segmentation

Segmentation, on the other hand, divides memory into variable-sized segments, which are defined by the programmer according to the logical units of the program[20][24]. This approach offers greater flexibility and reflects the program’s structure more accurately. However, segmentation can lead to fragmentation issues, as segments may not fill the memory space efficiently[22]. The segmentation technique allows users to manage memory in a way that is more intuitive but introduces additional complexity and overhead compared to paging.

Swapping

Swapping is a memory management technique that involves moving processes between main memory and secondary memory to optimize limited memory re- sources[23][25]. This technique allows an operating system to run multiple processes by temporarily transferring lower-priority processes out of main memory, thus freeing space for higher-priority tasks. While swapping increases the number of processes that can be executed concurrently, it can also introduce latency due to the time required for transferring data, which may affect overall system performance[26][27].

Combined Techniques

In modern operating systems, paging and segmentation can be utilized together in a method known as segmentation with paging[20]. This hybrid approach combines the strengths of both techniques by dividing memory into segments, which are then further subdivided into pages. This method provides fine-grained control over memory allocation while ensuring efficient memory access and management, ultimately optimizing both memory usage and system performance.

Case Studies and Real-World Applications

Understanding Memory Management Techniques

In the realm of operating systems, memory management techniques such as paging and swapping play a crucial role in optimizing system performance and resource utilization. Real-world applications and case studies provide valuable insights into how these techniques are effectively implemented in various environments.

Case Study: Efficient Use of Paging in Modern Operating Systems

One prominent example of effective paging implementation can be found in contemporary operating systems, where demand paging is utilized to enhance memory efficiency. This technique allows systems to load only the necessary pages of a process into RAM when needed, thus minimizing RAM usage and optimizing performance for applications with diverse memory requirements[28][3]. By transferring inactive pages to disk, systems can free up space for active processes, demonstrating the adaptability and effectiveness of paging in modern computing environments.

Case Study: Swapping Mechanisms in Large Applications

In scenarios involving large applications, swapping techniques have been employed to manage memory efficiently. Swapping entails moving entire processes from main memory to a designated swap area on disk, which, while less flexible than paging, allows for the management of processes that exceed the available RAM[29][28]. A notable application of this can be observed in enterprise-level software that requires significant memory resources. By effectively utilizing swapping, these applications can maintain performance levels even under high demand, although this method carries a performance penalty due to the need to load entire processes back into RAM when activated[29][28].

Insights from A/B Testing Case Studies

Another layer of understanding can be gleaned from A/B testing in software development. While primarily focused on optimizing web applications and user interfaces, the insights from successful A/B testing case studies can parallel the efficiency gains achieved through paging and swapping. Businesses often leverage A/B testing to determine which version of a web page or application performs better, akin to how operating systems decide which pages to keep active in memory[30][31]. The scientific method of A/B testing underscores the importance of data-driven decision-making, much like the analytical approaches used in memory management to optimize system performance.

References

- : Paging and Memory Management: Enhance Computing Speed

- : Paging and Memory Management: Enhance Computing Speed

- : Is paging is still Important In Operating Systems | by manoj sharma

- : Understanding and troubleshooting page faults and memory swapping

- : Paging in Computer Architecture – Number Analytics

- : Memory paging – Wikipedia

- : Operating Systems 101: Virtualisation — Swapping(vi) – E.Y. – Medium

- : Swap Memory: What It Is & How It Works – phoenixNAP

- : Chapter 15. Swap Space | Red Hat Enterprise Linux | 7

- : Swapping in Operating System – GeeksforGeeks

- : What is Swapping in Operating Systems (OS)? – Scaler Topics

- : Difference Between Paging and Swapping in OS – Tutorials Point

- : What is a swap file? – Lenovo

- : Memory segmentation – Wikipedia

- : The Ultimate Guide to Segmentation in Operating Systems

- : Explain the concept of memory segmentation | Abdul Wahab Junaid

- : Understanding Segmentation in Operating Systems – Medium

- : Paging vs Segmentation: Core Differences Explained | ESF

- : Memory Management, Segmentation, and Paging

- : What is Segment? A Guide for Marketers | Lenovo US

- : Difference Between Paging and Segmentation – GeeksforGeeks

- : Difference Between Paging and Segmentation – Scaler Topics

- : What Is Memory Management? How It Works, Techniques, and Uses

- : What is the difference between paging and segmentation? – Krayonnz

- : Memory Management Techniques in Operating System – Shiksha

- : [Blog] SWAM: Revisiting Swap and OOMK for Improving Application …

- : Why is swap being used even though I have plenty of free RAM?

- : Paging and swapping – the Learn Linux Project

- : Swaping, Paging, Segmentation, and Virtual memory on x86 PM …

- : Case Studies On Successful Product Segmentation – FasterCapital

- : Customer segmentation in retail: 6 powerful client case studies – Lexer

- Esposito, C., Ficco, M., & Gupta, B. B. (2021). Blockchain-based authentication and authorization for smart city applications. Information Processing & Management, 58(2), 102468.

- Gupta, B. B., Gaurav, A., & Arya, V. (2023). Secure and privacy-preserving decentralized federated learning for personalized recommendations in consumer electronics using blockchain and homomorphic encryption. IEEE Transactions on Consumer Electronics, 70(1), 2546-2556.

Cite As

Gaurav A. (2025) How Operating Systems Handle Memory Paging, Swapping, and Segmentation, Insights2Techinfo, pp.1