By: Rekarius Asia University

Abstract

Dependence on mobile device usage opens the door for cybercriminals to launch cyberattacks such as phishing via SMS, which is called Smishing. Various traditional approaches and methods like rule-based and feature-based are no longer able to match the sophistication of the tactics used by attackers. To overcome the limitations of traditional methods, this paper reviews and discusses a hybrid deep learning approach that combines bidirectional Gated Recurrent Units (Bi-GRUs) and Convolutional Neural Networks (CNNs), abbreviated as CNN-Bi-GRU and the hybrid CNN-GRU.

Keywords SMS Phishing, Hybrid Deep Learning, CNN-Bi-GRU, CNN- GRU

Introduction

SMS messages are inexpensive for users to send and SMS spams are delivered over a mobile network. This makes SMS messages an appealing target for social engineers [2]. Phishing is a cybercrime technique where attackers impersonate legitimate entities to trick individuals into sharing sensitive information, such as passwords, credit card numbers, or personal details [3].The trust users place in SMS communications creates a critical vulnerability in mobile cybersecurity [4]. Various traditional approaches and methods like rule-based and feature- based are no longer able to match the sophistication of the tactics used by attackers. To overcome the limitations of traditional methods, in this paper hybrid deep learning approaches used for identification of smishing attacks.

Methodology

Hybrid CNNs and Bi-GRUs

The hybrid CNNS and Bi-GRUs is used in [5]

Datasets

The primary dataset used in this study is the SMS Phishing Collection, which is publicly available on Kaggle. This dataset contains a total of 5571 SMS messages, comprising 4824 legitimate (ham) messages and 747 phishing (smishing) attempts. For further testing, they used a second dataset Phishing detection dataset with a total of 13,320 samples, which includes 7981 spam and 5339 legitimate (ham) messages. Lastly, they leveraged a third dataset consisting of 5971 SMS messages that were originally categorized as ham, smishing, and spam. they restructured this dataset into two categories: legitimate (ham) messages totaling 4844 and smishing messages totaling 1127. They created a fourth combined dataset by merging the SMS Phishing- Collection, the Phishing detection Dataset, and the Phishing dataset. This new dataset aggregates all samples to enhance the robustness of our analysis, leading to a total of 24,862 samples, including 15,007 legitimate (ham) messages and 9855 phishing(smishing) attempts. The distribution of Ham and Smishing samples across datasets is shown in Table 1.

Dataset | Ham | Smishing | Ham to Smishing Ratio |

SMS Phishing Collection (Dataset 1) | 4824 | 747 | 6.46:1 |

Phishing detection dataset (Dataset 2) | 5339 | 7981 | 1:1.5 |

Phishing-dataset (Dataset 3) | 4844 | 1127 | 4.3:1 |

Combined Dataset (Dataset 4) | 15007 | 9655 | 1.52:1 |

Table 1: Distribution of Ham and Smishing Samples Across Datasets

Data Preprocessing

Before entering raw SMS message data into the deep learning model, prepro- cessing is necessary. The input text must be sanitized by removing unnecessary information. The widely used Word2Vec method converts text into numerical vectors by representing each word in a multi-dimensional vector space, where each word can influence the context of other words in the text. This enables the deep learning model to parse text input, recognize patterns in numerical vectors, and make accurate predictions.

Text Cleanup

Deep learning algorithms require SMS preprocessing. During this process, filler words such as ‘the’, ‘and’, ‘or’, and ‘a’, which do not significantly contribute to the text’s meaning, are removed.

Process of Tokenization

In SMS text processing, tokenization refers to the practice of segmenting raw text data into individual words or tokens. Since machine learning models require numerical input, tokenization is a crucial step in text processing.

Padding

After SMS messages have been tokenized, the resulting sequences need to be padded to a uniform length, as machine learning algorithms require inputs of the same size. Therefore, all inputs must contain the same number of features. This process, known as padding, typically involves adding zeros at the beginning or end of sequences.

Word Embedding

utilizes word embeddings, also known as vector representations, to represent words in a meaningful way. Word2Vec is commonly used to generate these word embeddings by predicting word contexts within a corpus. For example, given words like ‘prefer’, ‘tea’, and ‘and’, the Word2Vec algorithm might predict the phrase ‘I prefer tea and coffee’, based on these context terms.

Embedding Matrix

The learned word embeddings are fed into the neural network, which pro- duces an embedding matrix. This matrix has dimensions of (vocab size, embedding size), with each row representing a word from the dataset. Each cell in the matrix contains numerical values that represent the word embeddings. The embedding matrix can be used to initialize the weights of the neural network’s embedding layer.

Proposed Model Architecture

After preprocessing the SMS dataset and constructing an embedding matrix, a CNN-Bi- GRU hybrid model is trained to recognize SMS phishing. This proposed model comprises three main components: an embedding layer, a CNN layer, and a Bi-GRU layer. The embedding layer extracts syntactic and se- mantic information from the text and converts it into a fixed-length vector representation. The CNN layer applies multiple convolutional filters to the word embeddings, generating feature maps through convolutions and nonlinear activation functions. These feature maps are then integrated, normalized, and passed to the Bi-GRU layer, with max pooling preserving distinctive features while reducing spatial dimensions. The Bi-GRU layer combines outputs from both GRU units, which are then passed through a dense (fully connected) layer that applies a nonlinear activation function to produce the final output. This collaborative layering enables meaningful representations from text data, capturing pertinent information for phishing detection. For model compilation, the log loss function and the Adam optimizer are used, and a randomized search method is employed to fine-tune hyperparameters such as epochs, batch size, dropout rate, and CNN layer filters. The model’s performance is evaluated on a distinct test set, while a validation set helps identify optimal hyperparameters, assessing metrics like precision, recall, accuracy, and F1-score. Built with the Keras Sequential API, the architecture includes a 1D convolutional layer with 32 filters (kernel size of 3), an embedding layer, a max pooling layer, a dense output layer with a sigmoid activation function, and a bidirectional GRU layer with 64 units. Once the Word2Vec embeddings are generated, the model is trained iteratively on the available data, splitting them into training and validation sets. The model is trained in batches of size 128 for 50 epochs with binary cross-entropy loss and the Adam optimizer, iteratively achieving the desired accuracy level through the validation data.

Hybrid CNN-GRU

The hybrid of CNN-GRU is used in [1] research.

Dataset

The data set used in the study was obtained from the Kaggle website. The dataset contains 5,572 entries and is structured into 2 columns, labeled ”V1” and ”V2”. The ”V1” column categorizes SMS messages into two distinct groups: ”spam” and ”raw”. The column designated “V2” containsthe contents of the SMS messages. 75% of the data set was used as training data and 25% as test data. There are 4,825 messages classified as ”Raw” and 747 messages classified as ”Spam” in the data set.

Preprocessing

Lemmatization

With this process, the roots and suffixes of the words are separated from each other, and the semantic analysis of the word is ensured. The goal of this analysis is to improve the precision of detection and analysis efforts by forming semantic links with words that share similar roots.

Stopwords Removal

stopwords were removed from the data set in order not to unnecessarily crowd the data set and not to negatively affect learning at the stage when the data set was trained.

Tokenization

With tokenization, textual data that the algorithm cannot detect and there- fore cannot produce results are converted into numerical data that the algorithm can understand. Through this process, the text undergoes transformation into numerical data by assigning specific numerical values to each word.

Embedding vectors

For training the model, it’s crucial that all input data have the same word size. To achieve this, the entire dataset was converted into embedding vectors based on the sentence with the maximum number of tokens.

CNN-GRU

Following preprocessing of the dataset, the model was sequentially constructed, beginning with the creation of an embedding layer designed to compress the in- put dataset into a more compact space. Subsequently, to extract spatial features from the data, a CNN layer was added as the second layer. The activation func- tion ReLU (Rectified Linear Unit) was chosen for this CNN layer to introduce non-linearity into the network. Next, a maximum pooling layer was introduced. To expedite computations while conserving memory resources, two GRU layers

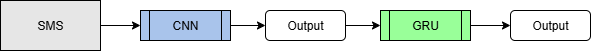

Figure 1: Flowchart of CNN-GRU model

were incorporated into the model. Following the GRU layers, a dropout layer was included to mitigate overfitting. In the dense layer, the activation function was once again selected as ReLU. Given that the dataset comprises two classes: ”Spam” and ”Raw”, a ”sigmoid” activation function was employed in classification layer for binary classification. The Adam algorithm was chosen as the optimization algorithm, with accuracy serving as the performance metric. Following all these steps, the dataset was trained for 10 epochs. The flowchart given in Fig. 1, illustrates the application designed to classify SMS messages as spam or not using the Hybrid CNN-GRU model developed with the dataset. In the depicted process, the system is initially provided with input data for training. The output from the input, processed through the CNN layer, is subsequently fed into the GRU layer. Within this layer, the input, post-training, undergoes activation functions within the dense layer, ultimately concluding the classification process. During the model development, the output from the input, initially processed through the CNN layer, was fed into the GRU layer. Within this layer, the trained input passed through activation functions in the Dense layer, finalizing the classification process. The training of the model was carried out with 10 epochs and its performance was evaluated with the accuracy score.

Conclusion

The outcome of training hybrid deep learning CNN-GRU model revealed a success rate of 99.96% in discerning whether the SMS content is spam or not. The outcomes, aligning with the model’s performance and conducted tests, indicate the successful accomplishment of the intended smishing detection. The hybrid deep learning CNN-Bi-GRU model demonstrated superior performance in terms of accuracy, precision, recall, and F1-score, significantly out- performing traditional classifiers such as KNN, decision tree, random forest, SVM, AdaBoost, LSTM, and GRU. With an accuracy of 99.82% and a high F1-score of 98.56%, the CNN-Bi-GRU model proved to be an effective tool for enhancing cybersecurity in the context of SMS phishing detection.

References

- Zeynep Aslanpen¸cesi, Muhammet Baykara, and Talha Burak Alaku¸s. Sms phishing detection with hybrid cnn-gru. In 2024 7th World Symposium on Communication Engineering (WSCE), pages 52–56. IEEE, 2024.

- Zeynep Aslanpen¸cesi, Muhammet Baykara, and Talha Burak Alaku¸s. Sms phishing detection with hybrid cnn-gru. In 2024 7th World Symposium on Communication Engineering (WSCE), pages 52–56, 2024.

- Ruth Korede Ayeni, Ayodele Ariyo Adebiyi, Julius Olatunji Okesola, and Ennmanuel Igbekele. Phishing attacks and detection techniques: A system- atic review. In 2024 International Conference on Science, Engineering and Business for Driving Sustainable Development Goals (SEB4SDG), pages 1–17. IEEE, 2024.

- Nippon Datta, Tanjim Mahmud, Mohammad Tarek Aziz, Rahul Kanti Das, Mohammad Shahadat Hossain, and Karl Andersson. Emerging trends and challenges in cybersecurity data science: A state-of-the-art review. In 2024 Parul International Conference on Engineering and Technology (PICET), pages 1–7. IEEE, 2024.

- Tanjim Mahmud, Md Alif Hossen Prince, Md Hasan Ali, Mohammad Sha- hadat Hossain, and Karl Andersson. Enhancing cybersecurity: Hybrid deep learning approaches to smishing attack detection. Systems, 12(11):490, 2024.

- Elechi, P., Ekolama, S. M., Okowa, E., & Kukuchuku, S. (2025). A review of emerging technologies in wireless communication systems. Innovation and Emerging Technologies, 12, 2550005.

- Muthusamy, P. D., Govindasamy, B. R., Thandavan, S., V, G., & Savarimuthu, N. (2025). Smart MedDB chain—An intelligent approach for data sharing in medical systems. Innovation and Emerging Technologies, 12, 2540001.

- Kanthavel, R. R., & Dhaya, R. (2025). Federated learning (FL)-driven real-time decision support for intraoperative cardiovascular surgery: A privacy-preserving AI framework. Innovation and Emerging Technologies, 12, 2550025.

Cite As

Rekarius (2025) Hybrid Deep Learning CNN-GRU and CNN-Bi-GRU Approaches to Smishing Detection, Insights2Techinfo, pp.1