By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

Motion transfer and puppet Mastering techniques allow for modifying the performance of one Actor’s features to another’s, such as facial expressions, lip movements, and certain muscle motions, which will be replicated with high realism. With the use of deep-generating models, recent techniques success- fully ”animate” a target image while maintaining the identification clues by mapping the facial characteristics from the source video into the latter. The real-world deployment faces challenges like video compression artifacts, a lack of synchronized audio, etc. To overcome these challenges, research has explored facial muscle motion modeling, audio mapping techniques, and motion magnifying techniques to find the sub-muscular originality signals that are not in the synthetic content. These methods provide a signal or cues to detect deepfake content across the source generators. These approaches can be used to detect the motion transfer of deepfakes.

Keywords

Motion Transfer, Puppet Mastering, Facial Muscle Motion, Motion Magnifica- tion, Deepfake Detection

Introduction

With the rapid growth of social media platforms, the creation and sharing of video content has increased. This is a major reason for the growth of using deepfake technology, in which the combination of deep learning and face manipulation is used, leading to the creation of realistic deepfake videos by transferring one person’s features to another [4]. Although the advantages of using these in- crease in the field of film, education, and healthcare, its misuse can make the public trust go away. For detecting this kind of deepfake, this article focuses on the motion-based deepfake generation and detection using the Monkey-Net. method [1], which will animate the source image with the motion that is extracted from a driving video. Using motion transfer techniques [2], this study improves the realism of generated material and the flexibility of the deepfake detection in real-world video streams.

Methodology

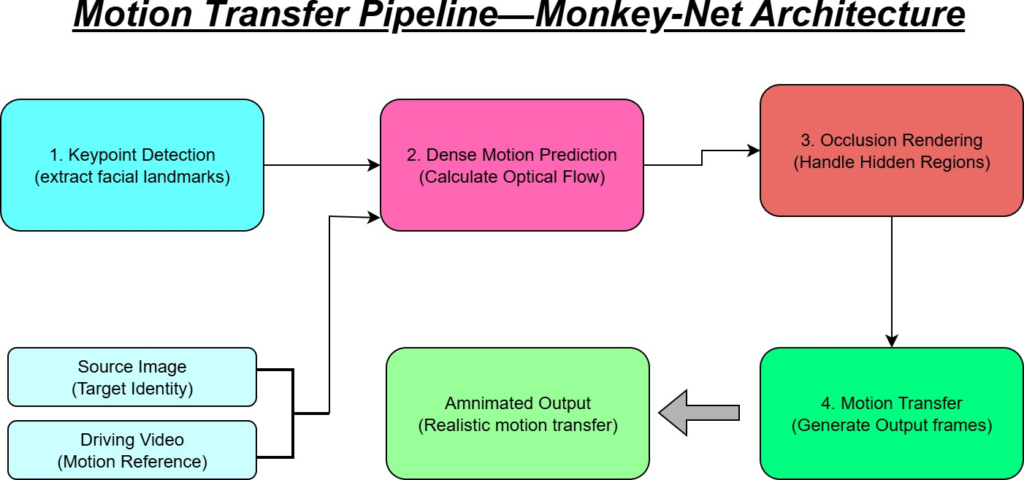

The proposed methodology is based on the Monkey-Net architecture [1], which is designed to animate the source image using the motion information from a driving video, as shown in Figure 1. The steps for implementing this approach are as follows:

Keypoint Detection

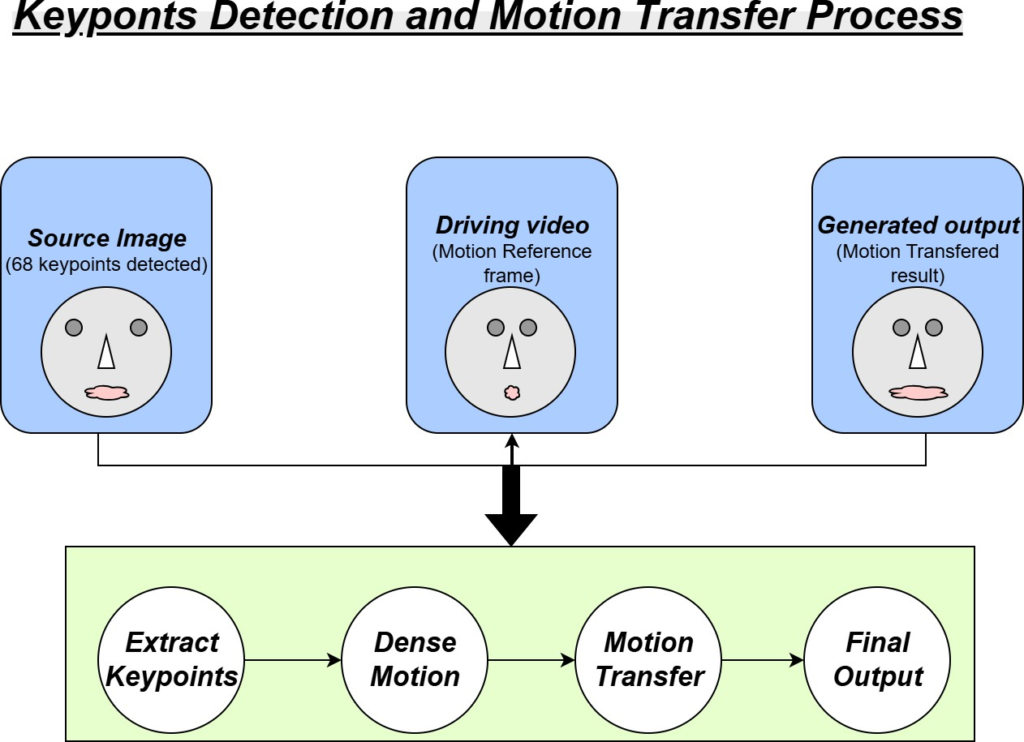

This is an encoder-decoder based keypoint detector, as shown in Figure 2,

which is used to extract the sparse motion-relevant keypoints from the source image and the driving video. The key points have been chosen based on the high movement probability [5], such as facial regions, human joints, which are used to produce the movement and shape of objects [1].

Dense Motion Prediction

The Dense Motion network uses the keypoints that are extracted to calculate the dense optical flow between the source image and the driving video. To align Motion around each keypoint and its neighbors, it contains a local affine transformation [3]. Here, the accurate alignment of the source image to the driving video’s pose is made possible by the dense motion field.

Image Generation

Here the animated output frame is generated by using the Motion Transfer Network, which combines the aligned force features with the dense motion maps to generate the output.

Occlution-Aware Rendering

Here, an Occlusion mask will be placed in the Dense Motion Network to make the system aware of the hidden parts of the image during the motion transfer and to address the missing or misaligned regions. By using this, we can easily restore the missing details, which leads to improved realism and the structural consistency of the output [6].

Final Motion Transfer

For each driving frame, the motion between the initial and current frames has been applied to the source image. This maintains the object shape and identity through the sequence while assuring a suitable motion transfer as mentioned in Table 1. The main benefits of the motion transfer are : It is fully self-supervised training, with no need for manual annotations, and handles the occlusions accordingly [1].

Table 1: Monkey-Net Architecture Components

| COMPONENT | INPUT | OUTPUT | PARAMETERS |

| Keypoint Detector | image + Driving video | Sparse Keypoints | Encoder-Decoder |

| Dense Motion Framework | Keypoints | Dense Optical flow | Local Affine Transform |

| Motion Transfer Network | Dense Motion + Features | Animated Frame | CNN based |

| Occlusion Mask | Motion field | Visibility Map | Binary Mask |

Conclusion

Every deepfake that is present wont pose a risk, but the misuse of this technology will lead to serious psychological, financial, and emotional harm of the person who is affected. in this article we saw the principles behind the deepfakes and the approach to detecting the deepfakes with the creation of the Monkey-Net architecture, which demonstrates how the motion transfers from a driving video to a static image, which is achieved through self-supervised learning. The goal of the future work will be to improve the motion transfer methods while making sure to have protections against the misuse of deepfakes.

References

- Jhosiah Felips Daniel et al. First order motion model for image animation and deep fake detection: Using deep learning. In 2022 International Con- ference on Computer Communication and Informatics (ICCCI), pages 1–7. IEEE, 2022.

- Ilke Demir and Umur Aybars C¸ iftc¸i. How do deepfakes move? motion mag- nification for deepfake source detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 4780–4790, 2024.

- Xin Liao, Yumei Wang, Tianyi Wang, Juan Hu, and Xiaoshuai Wu. Famm: Facial muscle motions for detecting compressed deepfake videos over social networks. IEEE Transactions on Circuits and Systems for Video Technology, 33(12):7236–7251, 2023.

- Yushaa Shafqat Malik, Nosheen Sabahat, and Muhammad Osama Moazzam. Image animations on driving videos with deepfakes and detecting deepfakes generated animations. In 2020 IEEE 23rd International Multitopic Confer- ence (INMIC), pages 1–6. IEEE, 2020.

- Ekta Prashnani, Michael Goebel, and BS Manjunath. Generalizable deep- fake detection with phase-based motion analysis. IEEE Transactions on Image Processing, 2024.

- Haichao Zhang, Zhe-Ming Lu, Hao Luo, and Ya-Pei Feng. Restore deepfakes video frames via identifying individual motion styles. Electronics Letters, 57(4):183–186, 2021.

- Gupta, B. B., Gaurav, A., Arya, V., & Alhalabi, W. (2024). The evolution of intellectual property rights in metaverse based Industry 4.0 paradigms. International Entrepreneurship and Management Journal, 20(2), 1111-1126.

- Zhang, T., Zhang, Z., Zhao, K., Gupta, B. B., & Arya, V. (2023). A lightweight cross-domain authentication protocol for trusted access to industrial internet. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-25.

- Jain, D. K., Eyre, Y. G. M., Kumar, A., Gupta, B. B., & Kotecha, K. (2024). Knowledge-based data processing for multilingual natural language analysis. ACM Transactions on Asian and Low-Resource Language Information Processing, 23(5), 1-16.

- Siarohin, A., Lathuilière, S., Tulyakov, S., Ricci, E., & Sebe, N. (2019). Animating arbitrary objects via deep motion transfer. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 2377-2386).

- Ren, J., Chai, M., Tulyakov, S., Fang, C., Shen, X., & Yang, J. (2020, August). Human motion transfer from poses in the wild. In European Conference on Computer Vision (pp. 262-279). Cham: Springer International Publishing.

- Zhou, Y., Wang, Z., Fang, C., Bui, T., & Berg, T. (2019). Dance dance generation: Motion transfer for internet videos. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops (pp. 0-0).

Cite As

Kalyan C S N (2025) Motion Transfer and Puppet Mastering: Turning One Actor Into Another, Insights2Techinfo, pp.1