By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

The rapid advancement in Artificial Intelligence (AI) have provided a path to create manipulated or synthetic movies that appear like the real ones. Un- like the text manipulations, the video deepfakes will differ in the technology skills and the complexity of the model, which produces a unique behavioral effect. Deepfakes take advantage of visual communication, with the emotional responses they can create stronger fake content than before, with good accuracy. The political deepfakes do not always succeed in deceiving the audience, as they simply won’t trust the truth and the falsity that has been said due to the manipulation of the speeches. due to these acts, the trust in digital news and social media becomes less and leading to amplifying democratic vulnerabilities. To classify the political deepfakes as a separate issue, this paper goes through the role in weakening the public trust and advancing propaganda in the digital environment.

Keywords

Political Deepfakes, Visual Disinformation, Artificial Intelligence (AI), Deepfake Technology, Social media, Digital Environment.

Introduction

Due to the fast-growing advancements of digital technology, the issues of spreading manipulated content are also increasing in modern political communications. While the major focus is on the textual and audio-based deepfake detection, the misinformation provided by the visual content has increased. Compared to the audio deepfakes, the visuals carry a bigger impact, where it will be the moving images which is done from the simple alterations to highly altered deepfake content [1]. The visual manipulations have the power of showing more emotions than the audio and text-based deepfakes, in which it tends to be more effective to mislead the public [2]. The political deepfakes, which are generated using Artificial intelligence, is been the most concerning form of spreading fake information. Other than creating false information on the speeches, the deepfakes are capable of making the public trust go away on democratic communication. Deepfakes do not fool the viewers always, but they do create confusion about which information is true and which is fabricated. In this article we will go through the generation and the impacts of the spreading of visual political deepfakes, which is a serious threat to the digital environment [5].

Proposed Methodology – 1

The first method proposes a theoretical Framework in which it can go through the visual disinformation by using two dimensions, such as Modal richness and Manipulative sophistication, to determine whether the fake content is a low-level or a high-level. The visual framework has been explained in detail below:

Categorization of Visual Disinformation

The Visual Deepfakes will come under 2 parts, such as:

Modal Richness

The Modal richness will check whether the produced content is a static (image) or dynamic (video) based sample [5].

Manipulative Sophistication

The manipulative Sophistication is used to classify whether the deepfake, which is present in the content, is a low-level or a high-level framework [1].

Production of Actor Typologies

The 3 main Actor types which is present are political, Media, and private actors. In political actors use images as propaganda or warfare tools, the Media actors will spread fake news unintentionally when the fast checking process is done, and the private actors will be motivated by the financial gains and other virality that they have [3], and the production of actor topologies has been shown in Table 1.

Table 1: Multi-model Framework Components and Technologies

Actor Type | Primary Motivation | Methods Used | Impact |

Political Actors Media Actors Private actors | Political warfare Content Creation financial Gain | opposition Targeting Unintentional spread due to fast checking Social media engagement | Influence elections Spread misinformation generate revenue through fake content |

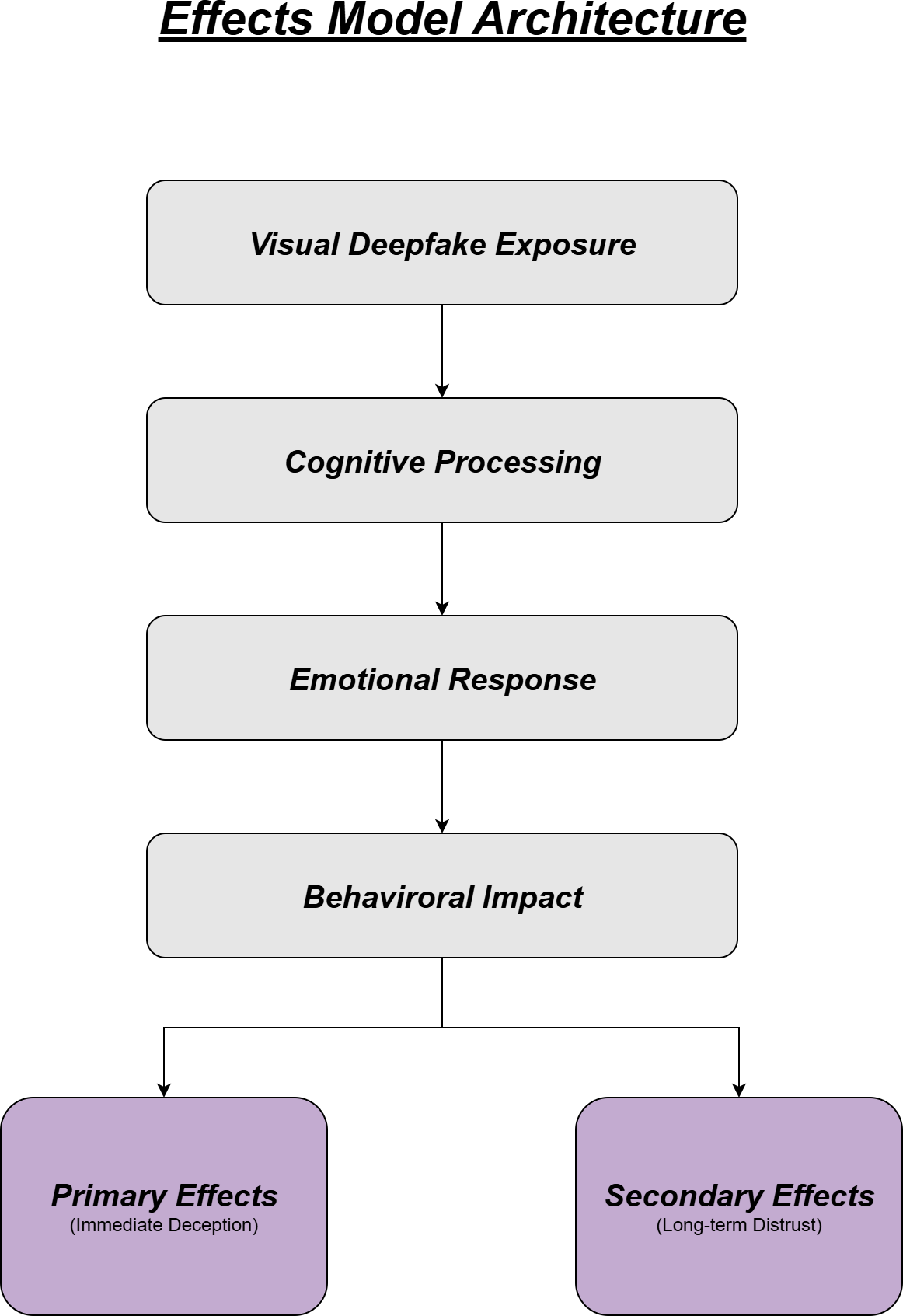

Processing and Effects Model

The processing models check how the users cognitively and effectively receive the visual deepfakes, where the visual content will be appear more credible than words. Due to this spread of deepfakes, such as in Figure 1,

The public trust is decreasing, and the psychological consequences are increasing. This method will be used to go through the primary effects, such as the immediate deception, and the secondary effects, such as the long-term distrust among the public [5].

Proposed Methodology – 2

The second method uses the experimental design to test how the political deep- fakes affect people’s point of view. This experimental design has been explained below in detail:

Experimental Design

With the online experiment conducted with 2,000 UK Participants, we used them to test different types of deepfake video content [4].

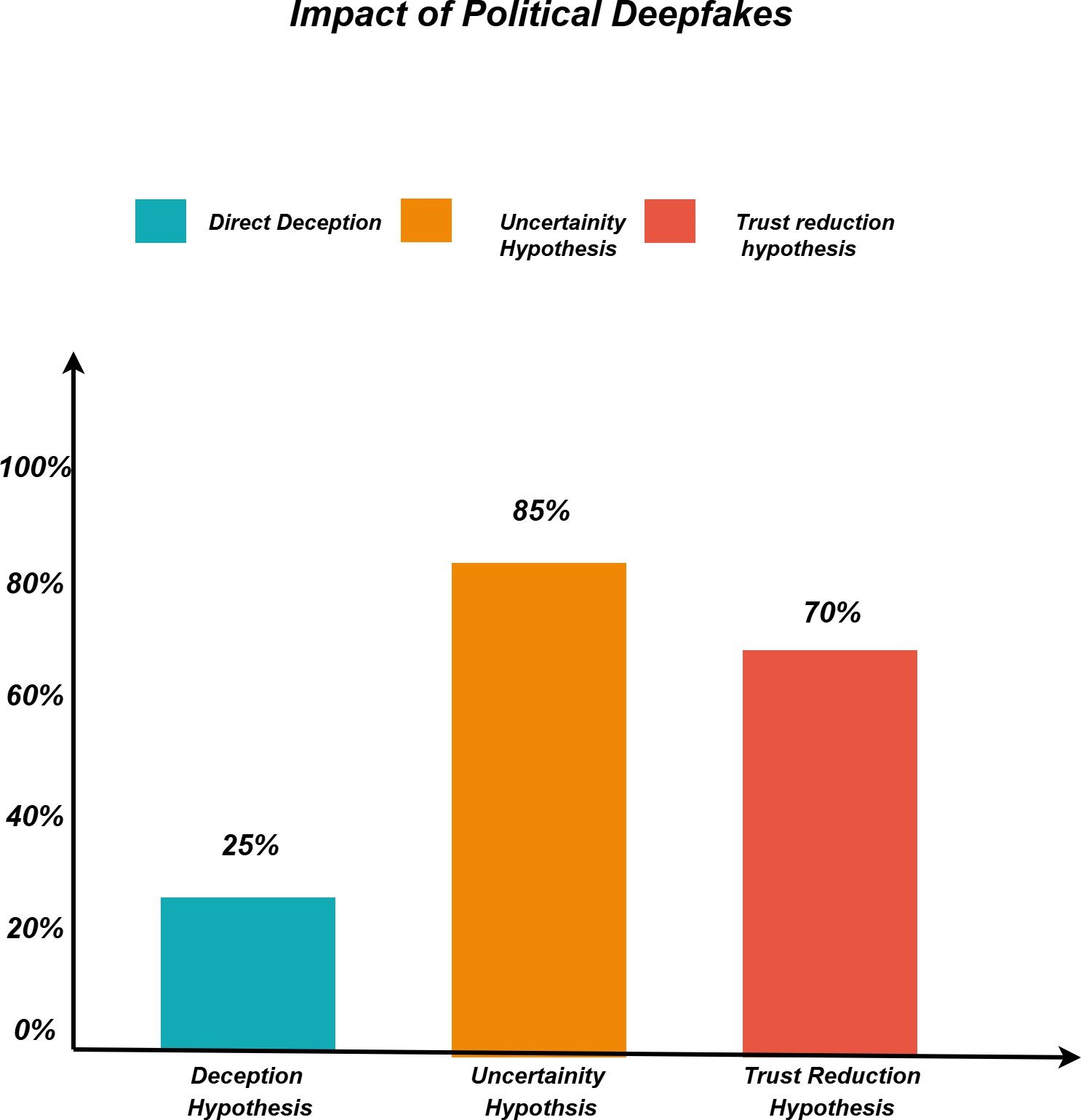

Key – Hypothesis Tested

The Key Hypotheses Tested are shown below:

Deception Hypothesis

The deception hypothesis will come under the deepfakes, which will mislead people to believe the false statements [4].

Uncertainty Hypothesis

The uncertainty hypothesis falls under deepfakes, where it is not fully fake content, but rather makes people think whether the content is true or false [3].

Trust Hypothesis

The trust hypothesis states that the reduction of people’s trust in the digital media is due to the deepfake content generated through social networks, the experimental findings, and the impact of political deepfakes, as shown in Figure 2.

Findings and implications

Deepfakes rarely fool people, but they always confuse participants, making it unclear whether the news is real or fake. The confusion caused a decrease in trust in the context of hearing the news on social media. These will be the main findings and implications of the second proposed method [3].

Conclusion

The political deepfakes represent the critical phase of sharing the disinformation, with the combination of the technology of visual media and Artificial Intelligence. The visual deepfakes will be more realistic than compared to audio and text manipulations, which make them unique in misleading the public. The theoretical framework stress them based on the modality, but the proof state that their primary impact is confusing, which makes the trust go off from the political news that is being displayed on the digital media. The political deepfakes are more of a tool for generating misinformation and an instrument to reduce the public’s trust in the news, which is circulated through digital media. The future research should be on developing the detection tools, legal frameworks, and media literacy programs.

References

- Daniele Battista. Political communication in the age of artificial intelligence: an overview of deepfakes and their implications. Society Register, 8(2):7–24, 2024.

- Andreas Jungherr. Political disinformation:“fake news”, bots, and deep fakes. 2025.

- Thomas Paterson and Lauren Hanley. Political warfare in the digital age: cyber subversion, information operations and ‘deep fakes’. Australian Jour- nal of International Affairs, 74(4):439–454, 2020.

- Cristian Vaccari and Andrew Chadwick. Deepfakes and disinformation: Ex- ploring the impact of synthetic political video on deception, uncertainty, and trust in news. Social media+ society, 6(1):2056305120903408, 2020.

- Teresa Weikmann and Sophie Lecheler. Visual disinformation in a digital age: A literature synthesis and research agenda. New Media & Society, 25(12):3696–3713, 2023.

- Gupta, B. B., Gaurav, A., Arya, V., & Alhalabi, W. (2024). The evolution of intellectual property rights in metaverse based Industry 4.0 paradigms. International Entrepreneurship and Management Journal, 20(2), 1111-1126.

- Zhang, T., Zhang, Z., Zhao, K., Gupta, B. B., & Arya, V. (2023). A lightweight cross-domain authentication protocol for trusted access to industrial internet. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-25.

- Jain, D. K., Eyre, Y. G. M., Kumar, A., Gupta, B. B., & Kotecha, K. (2024). Knowledge-based data processing for multilingual natural language analysis. ACM Transactions on Asian and Low-Resource Language Information Processing, 23(5), 1-16.

- Appel, M., & Prietzel, F. (2022). The detection of political deepfakes. Journal of Computer-Mediated Communication, 27(4), zmac008.

- Dobber, T., Metoui, N., Trilling, D., Helberger, N., & De Vreese, C. (2021). Do (microtargeted) deepfakes have real effects on political attitudes?. The International Journal of Press/Politics, 26(1), 69-91.

Cite As

Kalyan C S N (2025) Political Deepfakes: The New Age of Propaganda and Disinformation, Insights2Techinfo, pp.1