By: C S Nakul Kalyan, CCRI, Asia University, Taiwan

Abstract

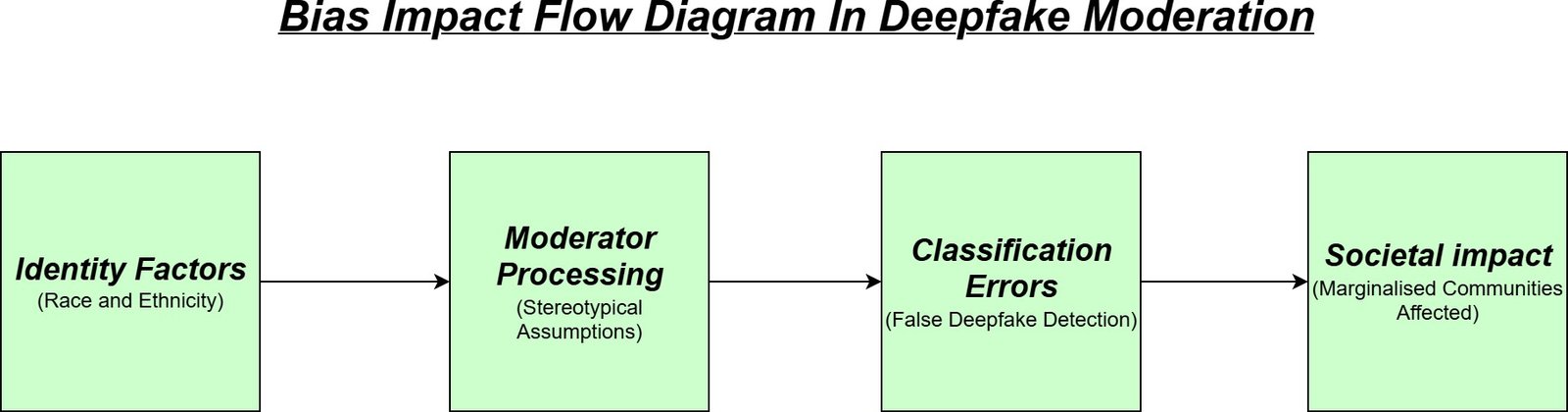

The growth of Artificial Intelligence (AI) and Deep- learning (DL) has enabled the creation of deepfakes, which produce highly realistic artificial media that raise ethical, social, and security concerns. In recent days, the creation of deep- fake content has been raised in race and ethnicity alterations in these media, which overlaps with bias and discrimination. In this study, we will go through the ethical minefields produced by race and ethnicity-based deepfakes, getting information from a user study of human moderation of LinkedIn profiles, on an analysis on deepfake misuse. This information reveals that the decisions of intermediators are made by identity factors, shared group membership, and profile components which is relied on unimaginable expectations of race and gender. Such classifications can lead to misclassifications of authentic profiles as manufactured, which affects poor people. In this study, we will go through on the risks of reinforcing racial and ethnic damages done through deepfakes and content moderation practices.

Keywords

Race and Ethnicity, Bias in stereotypes, deepfakes, ethical implications, and Artificial Intelligence.

Introduction

The development of AI and deep learning has paved the way for the manipu- lation of digital content, such as deepfake technology, which utilizes techniques like Generative Adversarial Networks (GANs) to create realistic images, videos, and texts. Deepfakes have been misused in many areas, such as identity theft, cyber harassment, and political manipulation [3]. Social media has taken the initiative by banning artificially generated and deepfake content by using automatic detection technologies and human moderation [4], which enforces their standards. Recent studies has shown that the moderation errors are influenced by racial and gender biases. Human moderators will misclassify the profiles from the marginalized communities due to often usage of stereotypes and heuristics [5]. Similarly, the alteration of race and ethnicity in deepfakes leads to the dam- aging of narratives and increases systemic inequalities. In this article, we will go through the implications of race and ethnicity-based deepfakes, the need for fair moderation practices, and bias-aware detection systems in which to safeguard digital trust and protect the exposed groups [1].

Proposed Methodology

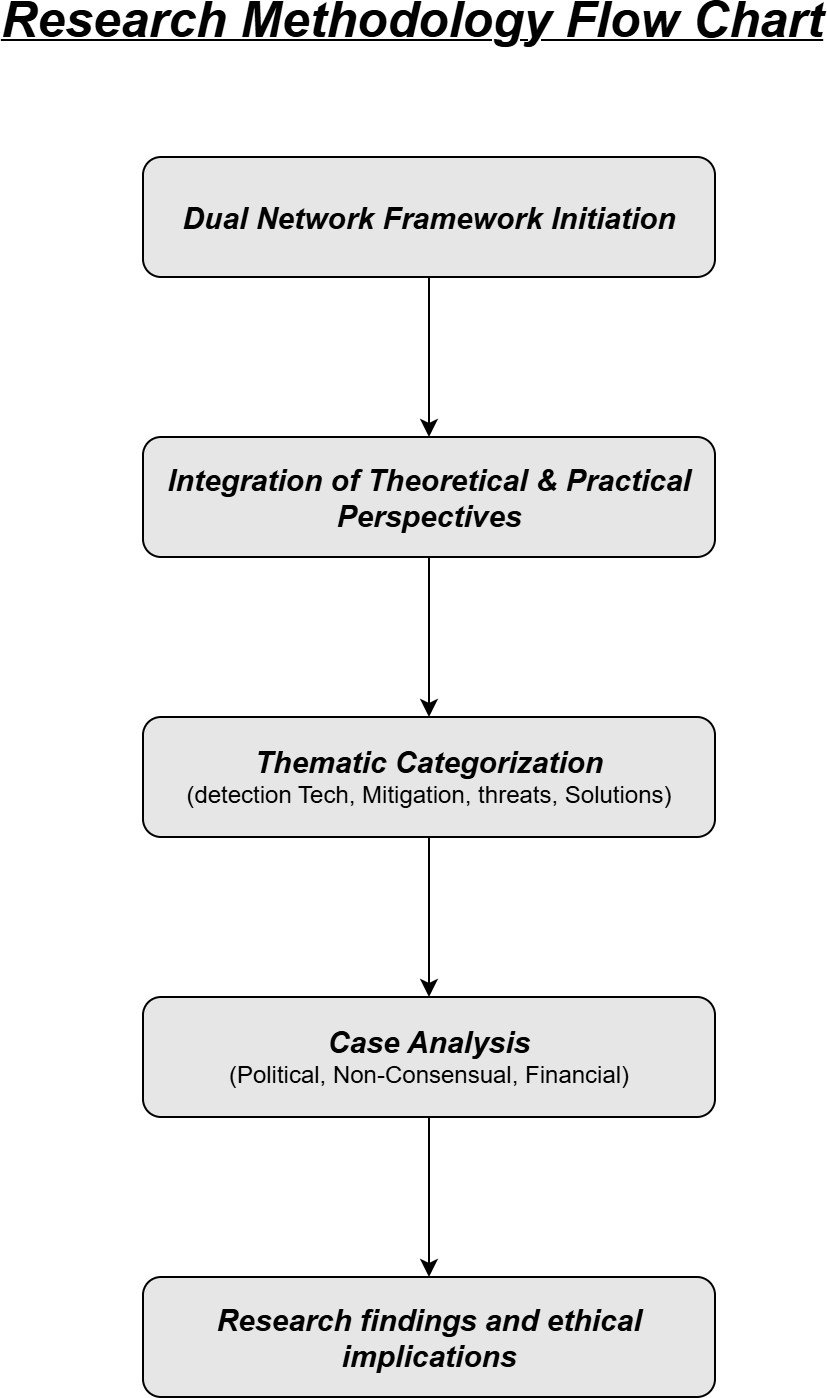

This study presents a dual network framework, consists of a literature review with thematic content analysis and a case study approach. This integration gives a good understanding of the issues related to deepfakes and insights through real-world examples.

Research Design

The research design is aimed at investigating the ethical, technological, and societal implications of deepfakes, in which the use of an integrative framework ensures that the theoretical and the practical perspectives have been included [3] as shown in Figure 1.

Research Objectives

The Main Research Objectives are:

Ethical and social risks

The model analyzes the Possible harms caused by the deepfake technologies, such as privacy violation, misinformation, and loss of reputation [4].

Counter measures evaluation

It is used to reduce deepfake issues by identifying the technological and regulatory strategies [3].

Real world impacts

The model understands the real-world cases by examining the case studies to understand the consequences of deepfake content on digital trust [5].

Data Sources and Selection Criteria

The data sources and the inclusion criteria have been through the following steps such as:

Sources

The sources for this topic and the model has been collected from the peer- reviewed journal articles, conference proceedings, industry reports, and credible news outlets [2].

Databases

The databases from which the references are taken are IEEE Xplore, ScienceDirect, Research Gate, and Google Scholar.

Analytical Framework

The Analytical process is done by the following measures such as:

AI-Based Detection Analysis

It uses a deep learning architecture, such as Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs) in which are used for image and video synthesis [1]. These architectures are used for the identification of facial misexpressions, temporal inconsistencies, and audio-video mismatches.

Digital Watermarking

For the process of tagging the original media and its validity verification after the distribution, the analysis of watermarking systems is done.

Blockchain-Based Authentication

It will check the decentralized ledger systems for capturing the digital content information and to check its originality.

Colloborative Detection initiatives

Here the Large scale datasets and challenges, such as the Deepfake Detection challenge (DFDC), will be reviewed for the benchmarking of the detection models [5].

Thematic Categorization

For the thematic categorization, the insights were categorized into 4 parts, such as detection Technologies, mitigation techniques, evolving generative threats, and producing interdisciplinary solutions [2].

Case Study Approach

For theoretical findings, the second stage will examine the real-world case studies of deepfake usage by the process shown below.

Case Selection

The criteria of the case selection will come under the high-impact incidents, which are reported on global media such as:

Political Manipulation

This Manipulation is done by the deepfake videos, which are made to spread misinformation during the election process [3].

Non-consensual Deepfake pornography

This is done to target the female celebrities and other ordinary individuals, to reinforce gender exploitation [4].

Financial Fraud

Here, the deepfake audio and video are being used to scam financial organizations.

Analysis Process

Each cases were analyzed under four dimensions, such as: Nature of Deepfake Misuse, Ethics Implications, Societal Implications, and Technical Responses [5] as shown in Table 1 and Diagramatic representation in Figure 2.

Table 1: Case Study analysis Matrix

Case Type | Misuse Types | Ethical implementation | Societal Consequences |

Political Manipulation Non-consensual Deepfake Pornography Financial fraud | Fabricated candidate revenge exploitation Fraudulent video calls | Democratic integrity consent violations Financial consent breach | Election interference career damage economic losses millions |

Conclusion

This article presents that racial and ethnic identification leads to affecting the judgment of profile authenticity, which causes the model to make biased deci- sions. This highlights the need for detection and moderation systems that are sensitive to identity-based bias. To maintain equity in digital settings, these difficulties must be identified by the combination of AI innovation, ethical mon- itoring, and regulatory measures for awareness.

References

- Ahmad Alnaser. The impact of ai-augmented image and video editing on so- cial media engagement, perception, and digital culture. Journal of Computa- tional Intelligence, Machine Reasoning, and Decision-Making, 9(10):15–32, 2024.

- James Baker and Ammandeep K Mahal. “i have always found the whole area a minefield”: Wikidata, historical lives, and knowledge infrastructure. International Journal of Digital Humanities, pages 1–20, 2024.

- Yuran Dong and Jie Guo. The perils of bias: Navigating ethical challenges in ai-driven politics. Administration & Society, 57(5):749–773, 2025.

- Saiful Islam. Unethical use of artificial intelligence: The phenomena of deepfakes.

- Jaron Mink, Miranda Wei, Collins W Munyendo, Kurt Hugenberg, Ta- dayoshi Kohno, Elissa M Redmiles, and Gang Wang. It’s trying too hard to look real: Deepfake moderation mistakes and identity-based bias. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, pages 1–20, 2024.

- Gupta, B. B., Gaurav, A., Arya, V., & Alhalabi, W. (2024). The evolution of intellectual property rights in metaverse based Industry 4.0 paradigms. International Entrepreneurship and Management Journal, 20(2), 1111-1126.

- Zhang, T., Zhang, Z., Zhao, K., Gupta, B. B., & Arya, V. (2023). A lightweight cross-domain authentication protocol for trusted access to industrial internet. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-25.

- Jain, D. K., Eyre, Y. G. M., Kumar, A., Gupta, B. B., & Kotecha, K. (2024). Knowledge-based data processing for multilingual natural language analysis. ACM Transactions on Asian and Low-Resource Language Information Processing, 23(5), 1-16.

- Li, M., Ahmadiadli, Y., & Zhang, X. P. (2025). A survey on speech deepfake detection. ACM Computing Surveys, 57(7), 1-38.

- Sharma, V. K., Garg, R., & Caudron, Q. (2025). A systematic literature review on deepfake detection techniques. Multimedia Tools and Applications, 84(20), 22187-22229.

- Pham, L., Lam, P., Tran, D., Tang, H., Nguyen, T., Schindler, A., … & Vu, H. C. (2025). A comprehensive survey with critical analysis for deepfake speech detection. Computer Science Review, 57, 100757.

Cite As

Kalyan C S N (2025) Race and Ethnicity Alteration in Deepfakes: An Ethical Minefield, Insights2Techinfo, pp.1