By: C S Nakul Kalyan; CCRI, Asia University, Taiwan

Abstract

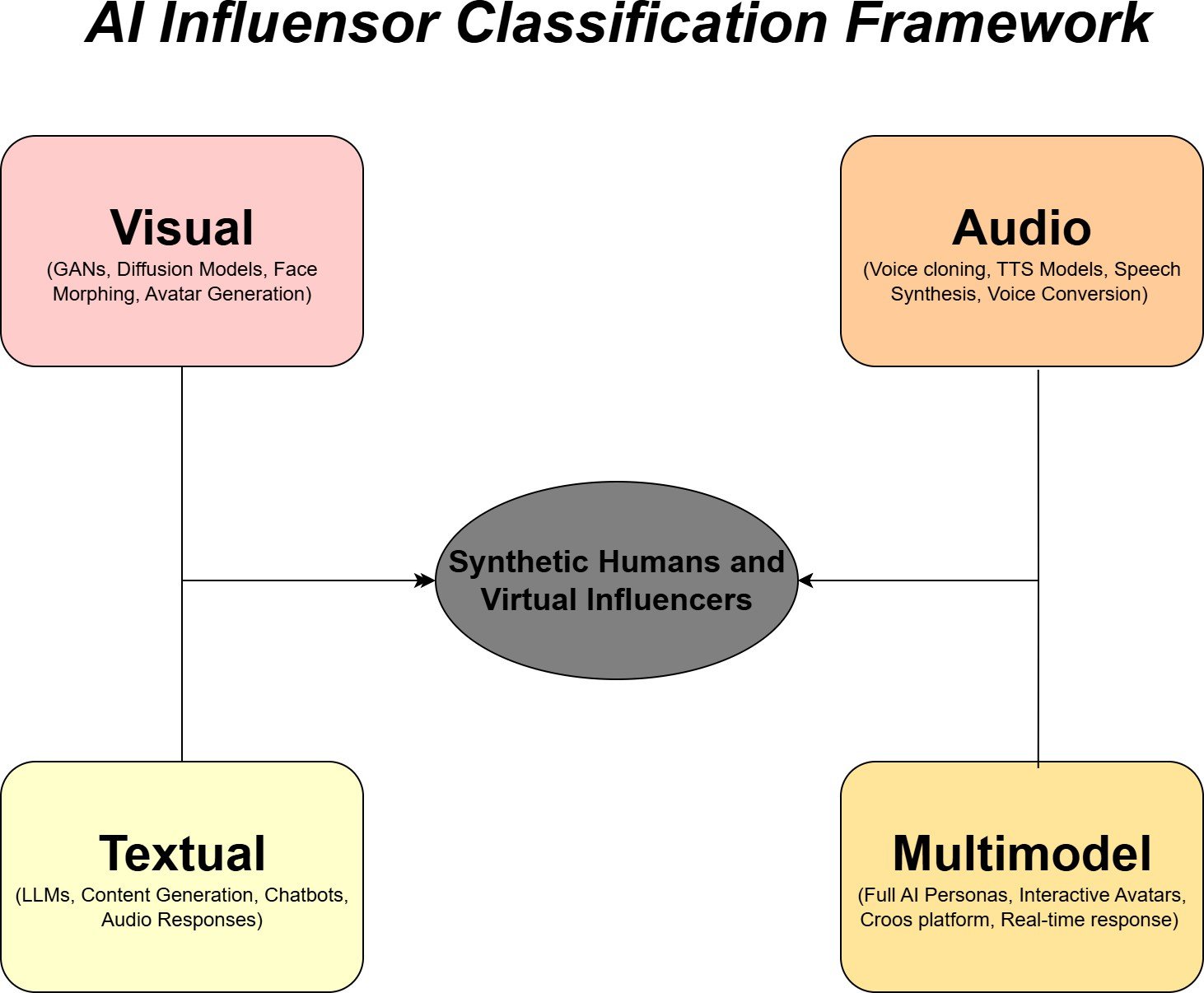

This article focuses on the advancements in Synthetic humans and virtual influencers (VIs) driven by Artificial intelligence (AI) and Deepfake Technologies, which analyze the impact on digital content authentication, audience trust, and other marketing strategies. The generative models, such as GANs, VAEs, and diffusion models, along with text-to-voice conversion models, function as independent Digital celebrities which is capable of shaping the customers’ perceptions and decisions that they make. This study suggests a new way of classifying AI influencers, such as visual, audio, textual, and multimodal, where it investigates the audience motivation to interact with such entities, which focuses on mystery and authenticity. here it highlights the challenges posed by non-human influencers and get the idea of multimodal detection systems, content labelling, and expanded media literacy initiatives.

Keywords

Synthetic humans, Virtual influencers (VIs), Digital Celebrities, Deepfake Technologies, Multimodal Detection systems.

introduction

The Combination of Artificial Intelligence (AI) and Social Media has led to the rise of Synthetic humans and Virtual Influencers (VIs), which are generated by using Deep Fake technologies [4][5]. These AI-driven parts are created using generative adversarial networks (GANs), variational autoencoders (VAEs), diffusion models, and voice conversion techniques, which are gradually rising in marketing, entertainment, and educational contexts [1][5]. Their capability to copy human appearances, behavior, and communication enables them to be occupied at a certain level. However, they also raise threats regarding the authenticity of digital content and the audience’s trust [2][4].

Present research on this topic has multiple domains where marketing studies look into the potential and audience’s appeal of Virtual Influencers (VIs), particularly among youngsters [1][2]. These technical studies focus on the creation, detection, and confirmation of synthetic content as shown in (Figure 1) [4][5], while legal research examines regular frameworks, copyright, and platform liability, and the ethical analysis to explore the implications of AI-generated roles. Other than these contributions, there is a lack of interdisciplinary integration and limited empirical data on user perceptions and cross-cultural analyses [3]. In this article, we will go through the identification and systematize the techno- logical, social, and legal proportions of using Deepfake-based virtual influencers

[1] [5], which is used to assess the impact on audience trust [2] and propose a unified technique combining multimodal detection methodology with a governed measure to disclose the difficulties of authenticity [4].

Figure 1: Classification Framework

Methodology

This study combines the Technical Detection strategies for synthetic data with the Legal and Regulatory measures, which form a dual-framework approach to reduce the risks, which is caused by the Deepfake-based Virtual influencers [4][5].

Technical Detection Approaches

The technical approach is mainly used for Synthetic content detection in which consists of:

Visual Deepfake Detection

One of the visual detection is Feature-engineered Methods, in which the detection method is based on the deviation in shadows, optical flow, blinking frequency, facial anatomy, and background illumination.

The other method is a Deep-feature Method, which is the use of advanced neural networks (CNNs), CNN-LSTM hybrids, attention mechanisms, and autoencoders for automatic feature extraction and classification [4][5].

Synthetic Speech Detection

One of the Synthetic speech methods is the handcrafted method, where it is an analysis of acoustic properties, which it includes log melspectrograms and breathing-speech-silence patterns, to identify the irregularity in the voice generation

The other method comes under the Deep-learning method in which is an ap- plication of convolutional and recurrent models (ResNet, EfficientCNN), which is used to detect the neural”fingerprints” and other active anomalies [4].

AI Generated Text Detection

One of the AI-generated text Detection methods is Lexical-Statistical Classifiers, which uses support vector machines (SVM) and logistic regression, and uses TF- IDF and such frequency-based features.

Another method is Fine-Tuned Language Models, which is a Transformer- based classifier used to detect human-written and AI-generated text [4].

Legal and Regulatory approaches

Here, we can go through the legal approaches which is taken to reduce the misuse of the tool, and we can go through the Act’s which is taken in different countries as follows:

United States

The key act which is taken in the US is the Deepfake Report Act(2019), Section 230 CDA, laws on defamation, and right of publicity.

The limitations are the difficulty in proving the intent and the causation.

European Union

The key acts here is the Digital Services Act and, AI Act proposal.

The limitations are that there is no any specific Act on the misuse of AI.

United Kingdom

The key Act here is the Online Safety Bill, which obligates illegal content removal and produces amendments on Deepfake pornography as mentioned in (Table 1).

Table 1: Comparison of Face morphing attack detection Techniques

COUNTRY | KEY LEGISTRATION | LIMITATIONS | ENFORCEMENT |

United States Europian Union United Kingdom | Deepfake Report Act(2019) Digital service Act Online safety bill | Proving intent and causion No specific deepfake provisions Limited scope | Limited Developing Active |

Recommended Integrated Measures

Due to this misuse of Synthetic humans and Virtual Influencers [1][4], some of the measures are recommended as follows:

Manditory Digital Labelling

It is Recommended that using watermarks or metadata be used to differentiate authentic from synthetic media [4].

Algorithmic Transparency

It is recommended that public documenting the AI models, their architectures, and risk profiles [4]

Media Literacy Initiatives

It will be so useful if the educational programs enhances the public awareness and critical evaluation skills [3][4].

Conclusion

The rise of deepfake-driven Virtual influencers, which are driven by advanced generative models such as GANs, VAEs, and diffusion models, reshapes the land- scape of digital influencers. These synthetic personas offer various marketing opportunities like overcoming geographical constraints, getting high engagement rates than the human influencers, and enabling brand strategies. With these, there come some challenges, which include the fading of public trust, risks to spread misinformation, and the vulnerabilities growing from deepfake-enabled fraud.

The perception of the audience remains a serious factor, where some of the users appreciate the mystery and responsiveness of Virtual influencers. These technologies are often used by teenagers and millennials, where they emerge as the most using users, underscoring the generational dynamics of this phase.

The future initiatives should be concentrating on creating adaptive multimodel detection frameworks, reevaluating platform liability in order to preserve the potential of AI-generated influencers as a useful marketing and educational tools. It will require a balanced combination of technology protection, legal restrictions, and media literacy programs to maintain the trust and properly leveraging the influence of these ”Authentically fake” Digital celebrities.

References

- Muskan Arora, Kaushal Kishore Mishra, Mandeep Singh, Praveen Singh, and Rashmi Tripathi. Deepfake technology and its implications for influencer marketing. In Navigating the World of Deepfake Technology, pages 66–90. IGI Global, 2024.

- Chen Lou, Siu Ting Josie Kiew, Tao Chen, Tze Yen Michelle Lee, Jia En Ce- line Ong, and ZhaoXi Phua. Authentically fake? how consumers respond to the influence of virtual influencers. Journal of Advertising, 52(4):540–557, 2023.

- Sarah Sanders. “fake, faker, fakest”, an understanding of the driving forces to engage with virtual influencers, from a user persepctive.

- Samoilenko Vladyslava. Ai and deepface influencers: The challenge of au- thenticity in the online space. Universal Library of Engineering Technology, 2(2), 2025.

- Lucas Whittaker, Kate Letheren, and Rory Mulcahy. The rise of deepfakes: A conceptual framework and research agenda for marketing. Australasian Marketing Journal, 29(3):204–214, 2021.

- Gupta, B. B., Gaurav, A., Arya, V., & Alhalabi, W. (2024). The evolution of intellectual property rights in metaverse based Industry 4.0 paradigms. International Entrepreneurship and Management Journal, 20(2), 1111-1126.

- Zhang, T., Zhang, Z., Zhao, K., Gupta, B. B., & Arya, V. (2023). A lightweight cross-domain authentication protocol for trusted access to industrial internet. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-25.

- Jain, D. K., Eyre, Y. G. M., Kumar, A., Gupta, B. B., & Kotecha, K. (2024). Knowledge-based data processing for multilingual natural language analysis. ACM Transactions on Asian and Low-Resource Language Information Processing, 23(5), 1-16.

- Arya, V., Gaurav, A., Gupta, B. B., Hsu, C. H., & Baghban, H. (2022, December). Detection of malicious node in vanets using digital twin. In International Conference on Big Data Intelligence and Computing (pp. 204-212). Singapore: Springer Nature Singapore.

- Sands, S., Ferraro, C., Demsar, V., & Chandler, G. (2022). False idols: Unpacking the opportunities and challenges of falsity in the context of virtual influencers. Business Horizons, 65(6), 777-788.

- Byun, K. J., & Ahn, S. J. (2023). A systematic review of virtual influencers: Similarities and differences between human and virtual influencers in interactive advertising. Journal of Interactive Advertising, 23(4), 293-306.

- Conti, M., Gathani, J., & Tricomi, P. P. (2022). Virtual influencers in online social media. IEEE Communications Magazine, 60(8), 86-91.

Cite As

Kalyan C S N (2025) Synthetic Humans and Virtual Influencers: The AI Behind Digital Celebrities, Insights2Techinfo, pp.1