By: C S Nakul Kalyan, Asia University, Taiwan

Abstract

The emergence of deepfake news anchors, capable of delivering news content with human-like speech and expressions, has been evolving due to the advancements of Artificial Intelligence (AI) in the journalism field. Although these advancements can decrease the production cost, they also raise queries such as public trust and the challenges of credibility. In this study we will go through three methodologies, such as analysis of AI-based anchors in broadcasting, an experimental study on the audience perceptions from the AI-generated news, and the modals for fake news detection using a hybrid deep learning architecture and Explainable AI. Even if the AI generated news results are accurate, people trust human reporters more than AI to reduce the risk of false information. This work provides an insight into the need for transparency, accountability, and safeguards in synthetic media, while also explaining how AI is transforming journalism.

Keywords

AI News Anchors, Synthetic Journalism, Artificial Intelligence (AI), Fake News Detection, Deep Learning.

Introduction

AI has rapidly evolved from supporting the content creation to creating the news and delivering it in a human-like manner. One of the major advancements of AI here is the rise of AI-based news anchors, which is a combination of Natural Language Processing, Machine Learning, and computer vision, where it will replicate human-like presentation with containing multiple languages and various platforms [1]. Even though this technology is efficient and cost-reducing, the concerns that this system raises about the reduction of human presence in journalism and the production of fake news, where it is possible to misuse the technology and spread misinformation [5]. Even though the AI generates the text and news content like humans, people will say the AI-produced content is less accurate and it’s not as trustworthy as the human-authored content [4]. At the same time the spread of misinformation on digital platforms shows the importance of the detection systems. The detection framework models, such as hybrid deep learning approaches, which use CNN-LSTM architectures, and transformer models have the best performance in detecting fake news [2][3]. Explainable AI techniques also provide interpretability to these predictions. To check how the AI is changing the news ecosystem, and to state the necessity of transparency, accountability, and ethical protections, this article takes the ideas from the combination of broadcasting practice and computational detec- tion techniques.

Proposed Methodology

This article has an integrated methodology that combines AI-based news anchoring analysis, audience perception experiments, and fake news detection. Here, every step has been combined into stages, with each stage containing approaches from the 3 methods that have been given below:

Data Collection

AI News Anchoring

To map the deployments of the AI anchors, such as Lisa(Odisha TV), Maya(Big TV), etc [1]. Secondary sources such as industry reports, media coverage, and academic articles have been grouped, and each case has been recorded the characteristics such as platform, language, and launch year.

Audience perception

The real news headlines were taken and cross-checked against Snopes.com, and from here, both the real and fake headlines were taken with their publisher IDs removed, and it is combined with neutral images.

Fake News detection

Here, three datasets with balanced data of real and fake news were used, such as WELFake, FakeNewsNet, and FakeNewsPrediction [3].

Data Pre-Processing

AI News Anchoring

In this case, the attributes were coded systematically, in which the realism (low/medium/high), language support, and the use of AI (yes/no). and the content delivery style has been preprocessed here.

Audience perception

In the audience’s perception, the headlines were classified as ”AI” or ”Human,” depending on the condition. To make sure the uniformity remains, the neutral images were included for consistency.

Fake News detection

The text data will undergo a cleaning process such as lowercasing, removal of punctuation, and duplicates, and it further undergoes the process such as tok- enization, stop-word removal, and lemmatization. Maintaining the transformer- based models (BERT, RoBERTa) requires only tokenization and casing as pre- processing [2][3].

Feature Representation

AI News Anchoring

Here the descriptive features such as Speech synthesis quality, expression mimicry, and cost efficiency and performance has been collected for the comparative analysis.

Audience perception

Here, each headline will be tagged with a condition, such as (AI vs. Human), and veracity, such as (True vs False), will be calculated, and these evaluations will be done both between the subjects.

Fake News detection

The fast text embeddings were used to find the vocabulary terms by capturing their semantic meanings, including the subword information [2]. By using both supervised and unsupervised learning, the textual data is represented in a vector space.

Experimental and Analytical Design

AI News Anchoring

A comparative analysis of the case study was done to find the socioeconomic impact, operational efficiency, and the engagement of the audience with AI anchors.

Audience perception

Experiment 1: Here, the first experiment is done between the subjects where the participants only view the AI-labelled or only human-labeled headlines.

Experiment 2: The second experiment was done between the subjects where the participants evaluated both AI and Human-labelled headlines in a direct comparison of them.

Fake News detection

In the Fake news detection, a hybrid modeling Pipeline was applied, such as:

Traditional ML: Here, the Support Vector Machines (SVM), Random For- est (RF), and logistic Regression were used to analyze the modal [2][3].

Deep Learning: The CNN, LSTM, and GRU Techniques were used to analyze the modal [3].

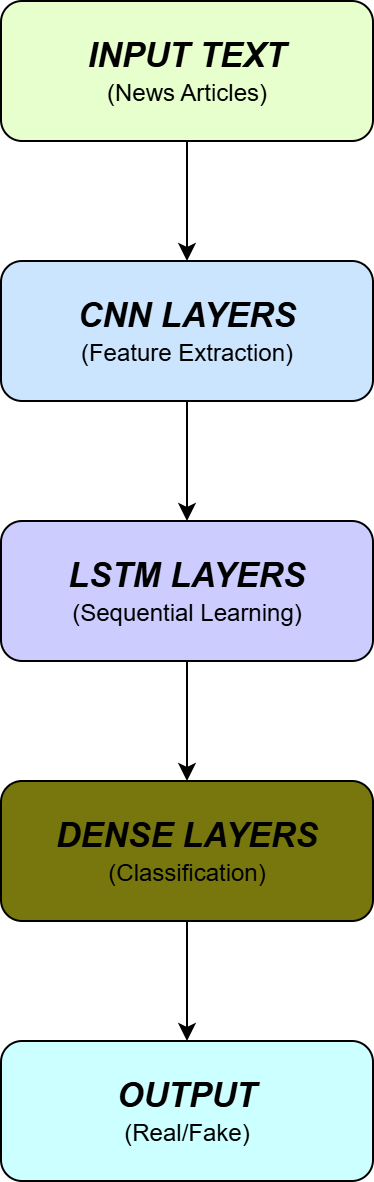

Hybrid CNN-LSTM modal: The hybrid modals were used as a support for the local and sequential dependencies, such as to analyze the modal [2]. The architecture of our hybrid model is illustrated in Figure 1.

Figure 1: Hybrid CNN-LSTM Architecture Diagram

Evaluation Metrics

AI News Anchoring

The outcome that has been produced from AI News anchoring, has been assessed in terms of efficiency, audience engagement, and accessibility.

Audience perception

In the audience perception, the outcomes were produced as:

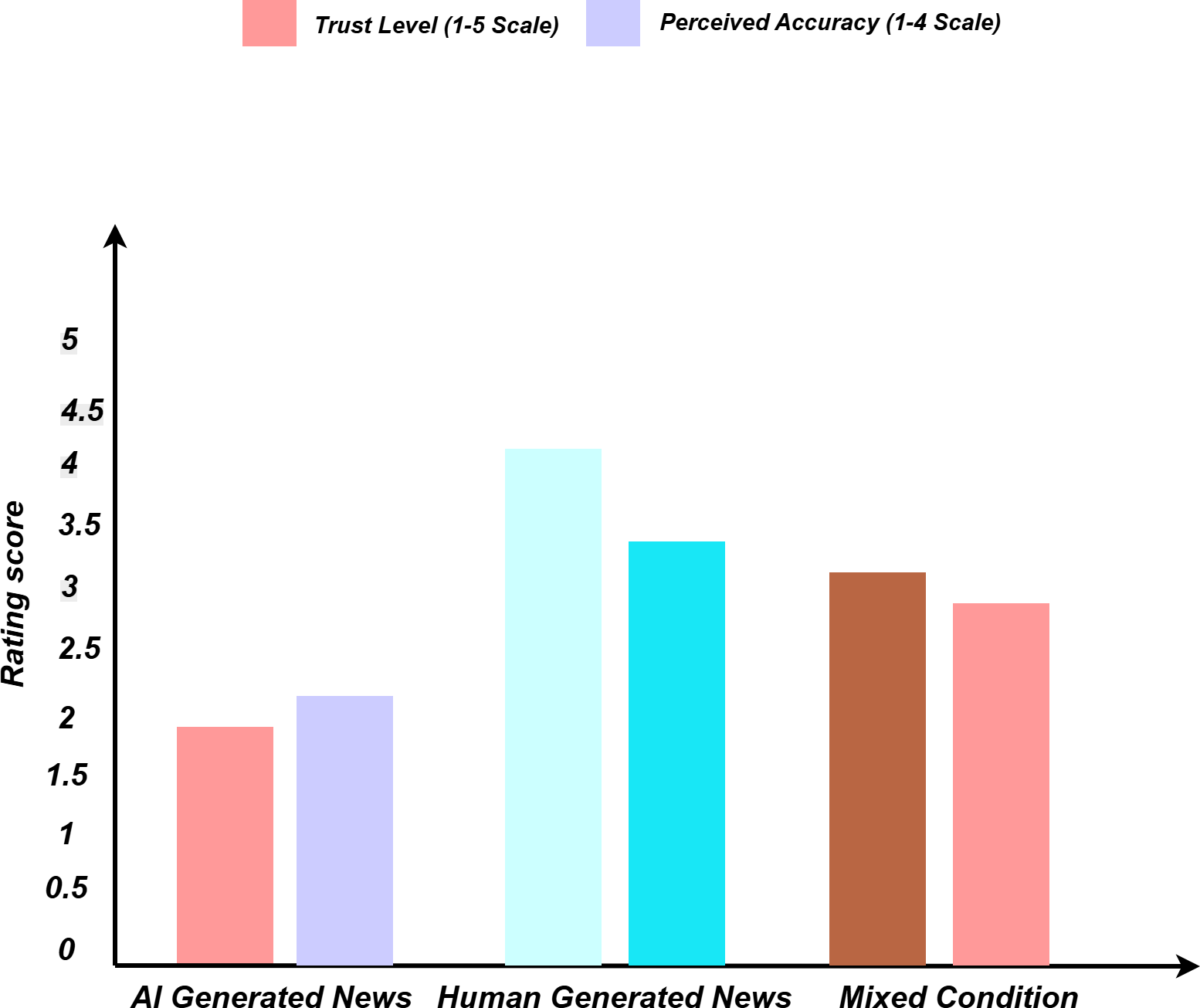

Primary Outcome: The primary outcome contains the perceived accuracy of the headlines, such as in the (1-4 Scale).

Secondary Outcome: The secondary outcome contains the precision of the trust in AI vs human reporters on a (1-5 scale) . The results of the audience perception experiments have been summarised in Table 1,

Table 1: Audience Perceptions Study results

Condition | Sample Size | Perceived Accuracy (1-4 scale) | Trust level (1-5 Sacale) | Credibility Rating | statistical Significance |

AI-labeled news | 247 | 2.3+0.7 | 2.1+0.8 | Low | p ¡ 0.001 |

Human-Labeled news | 253 | 3.4+0.6 | 4.2+0.7 | High | p ¡ 0.001 |

Mixed Condition | 198 | 2.8+0.8 | 3.1+0.9 | Medium | p ¡ 0.05 |

Figure 2 below represents the differences in Trust Levels Between AI and Human-Generated news.

Figure 2: Public Trust in AI vs Human News Reporting

Fake News detection

In this detection Framework, the models are assessed based on the Accuracy, precision, recall, F1-score, and confusion matrix. The CNN-LSTM and Trans- former models has got a 0.97-0.99 F1-score across the datasets according to the benchmark scores [2][3].

Explainability and interpretation

AI News Anchoring

Here, the broader implications, such as the ethical risks of dehumanization, and the cost-benefit analysis, have been analyzed through the review of case examples.

Audience perception

Here, the trust accuracy ratings of news with AI labels have been investigated us- ing mediation analysis. The causal Forest was used to analyze the heterogeneous effects across the demographics, which were given by the audience perception.

Fake News detection

By using the Local Interpretable Modal-Agnostic Explanations (LIME), the false classification drivers at the token level have been found. The clusters in the misclassified news, such as those in the political, medical, and financial domains, have been found using the Latent Dirichlet Allocation (LDA) [3].

Conclusion

For the digital Media ecosystem, the integration of Artificial Intelligence (AI) in journalism through the deepfake news anchors is both innovative and as well as challenging. The existing case studies state their abilities to broadcast continuously in multiple languages at a reasonable cost. The experiment studies show that the public still does not trust the AI-generated news, considering that the news provided by AI is less reliable and less accurate, and the chances of fake news are there compared to human journalists. At the same time, using hybrid deep learning and transfer-based models provides an effective method to identify and interpret fake or misleading news which are backed by explainable AI. These results, which are acquired, demonstrate a dual nature of synthetic journalism where AI will improve the scalability and productivity, and its output is relied on to maintain the ethical responsibility in news production.

References

- Amit M Bhattacharya. A study on ai-based news-anchoring on electronic media. International Research Journal of Economics and Management Stud- ies IRJEMS, 3(5).

- Ehtesham Hashmi, Sule Yildirim Yayilgan, Muhammad Mudassar Yamin, Subhan Ali, and Mohamed Abomhara. Advancing fake news detection: Hy- brid deep learning with fasttext and explainable ai. IEEE Access, 12:44462– 44480, 2024.

- Fiza Gulzar Hussain, Muhammad Wasim, Seemab Hameed, Abdur Rehman, Muhammad Nabeel Asim, and Andreas Dengel. Fake news detection land- scape: Datasets, data modalities, ai approaches, their challenges, and future perspectives. IEEE Access, 2025.

- Chiara Longoni, Andrey Fradkin, Luca Cian, and Gordon Pennycook. News from generative artificial intelligence is believed less. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, pages 97–106, 2022.

- Christopher Whyte. Deepfake news: Ai-enabled disinformation as a multi- level public policy challenge. Journal of cyber policy, 5(2):199–217, 2020.

- Al-Ayyoub, M., AlZu’bi, S., Jararweh, Y., Shehab, M. A., & Gupta, B. B. (2018). Accelerating 3D medical volume segmentation using GPUs. Multimedia Tools and Applications, 77(4), 4939-4958.

- Gupta, S., & Gupta, B. B. (2015, May). PHP-sensor: a prototype method to discover workflow violation and XSS vulnerabilities in PHP web applications. In Proceedings of the 12th ACM international conference on computing frontiers (pp. 1-8).

- Gupta, S., & Gupta, B. B. (2018). XSS-secure as a service for the platforms of online social network-based multimedia web applications in cloud. Multimedia Tools and Applications, 77(4), 4829-4861.

Cite As

Kalyan C S N (2025) AI News Anchors and Synthetic Journalism: When Fake News Gets a Face, Insights2Techinfo, pp.1