By: Praneetha Neelapareddigari, Department of Computer Science & Engineering, Madanapalle Institute of Technology and Science, Angallu (517325), Andhra Pradesh. praneetha867reddy@gmail.com

Abstract

Currently, Artificial Intelligence (AI) is applied to cybersecurity to increase threat identification and combating effectiveness. Nonetheless, the use of AI increases essential ethical concerns mostly because of its impact on individuals. The main challenge is that an AI may learn to bias, which will then bring about unfair or prejudice Security measures. Besides, while the artificial intelligence system takes the necessary security decisions fully automatically, the aspect of responsibility can be blurred as well. Moreover, AI is a potent weapon in the field of cybersecurity and its application may take into consideration the violation of privacy since most of these systems preferably need the massive data gathering to work efficiently. In this abstract, the author looks at the relationship between artificial intelligence and ethics dealing with the subject of cybersecurity while stressing on the need to ensure that the application of artificial intelligence is done so responsibly.

Keywords: Artificial Intelligence, Cybersecurity, Machine Learning, Ethical Implications

Introduction

AI security is now a key driver of a major change that uses big data analysis models for threat detection and automatic response in organizations[1]. AI solutions are some of the best ways of preventing the threats as they become more advanced and challenging to overcome[2]. The application of the AI in the cybersecurity promotes efficiency, reduces human factors that are likely to lead to vulnerability, and lastly drives the ability to prevent new models of attacks that are still unknown. However, the analysis of AI’s role in cybersecurity contributes to elaborating a large set of ethical issues that requires further attention[3].

Algorithmic fairness is, therefore, one of the major ethical issues in AI cybersecurity system. AI systems especially those containing decision making processes are normally initialized with the available data. If this data has biases, which include, but are not limited to, the race or gender of the people in question, the AI system can simply reinforce these biases and even possibly keep intensifying them, all in a bid to deliver what it considers to be fair and just results. In cybersecurity this could work out in a skewed risk analysis and / or uneven application of security measures which discriminates against specific categories of people. The opaqueness of AI decision-making that is a subject of the “black box” problem does not help to remedy these biases either.

Also, the prevalence of artificial intelligence as a means of boosting cybersecurity brings up the issue of responsibility and control. As the AI systems are given more duties that were previously accomplished by human security personnel, the issues of legal responsibility get complicated. Another issue arises when there is a risk or hack on the AI, or an unfavourable decision has been made by the systems[4]. This shift also presents questions on the depersonalization of human decision-making on security sensitive portion of AI where there are likelihoods that decision making will be offloaded to the AI systems and human operators may become complacent or lack the skills to invert technical issues. All these ethical implications adopted in the paper’s points to the fact that, any developing of AI in cybersecurity should not only embrace advancement in technology, but also put in check factors such as fairness, transparency and accountability.

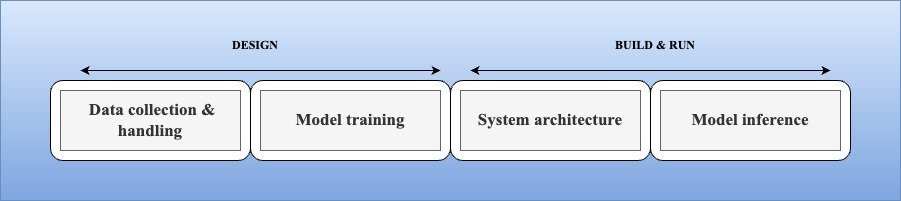

1. The Intersection of AI and Cybersecurity

AI cybersecurity is a revolution in terms of approaches to countering threats in the sphere of cybersecurity[5]. AI has emerged quickly as a core component as the composition of new, more elaborate, cyber threats and countermeasures that go beyond conventional technologies. Structurally, AI could incorporate precise patterns of threat and risk the machine learning algorithm and prediction analysis could detect a dangerous situation much faster than the actual human operating the system. Thus, AI remains a vital tool when it comes to the continuous analysis of large volumes of data and its adaptability feature proof when it comes to the changing threat lands that exist in the ongoing struggle to safeguard critical data[6]. With the advancement of these cyber threats hence becoming more and more strategic, intense, and more frequent, the use of AI in enhancing an organization’s cybersecurity becomes not just an advantage but an imperative need in the bull’s eye of protecting all organizational assets in the contemporary dynamic electronic system[7].

2. Privacy Concerns and Data Handling

Ethical issues influence the undertaking of integrating AI in the cybersecurity through privacy and data handling issues[8]. AI structures are usually trained with huge datasets, and these realities provoke questions about how the data is gained, preserved and applied. About ethical considerations, one is the subject’s right to privacy, which may be violated when completing personal information forms. Also, capacity for surveillance using artificial intelligence also increases the probability of it being abused in other capacities other than security, thus the powers may be abused and the liberties of the citizens compromised. This brings the issue of how to harness the power of AI in strengthening security and conversely maintain individual rights on personal information as a delicate subject that requires set rules and strict policies to avoid AI going overboard and ruining people’s privacy.

3. Bias and Discrimination in AI Algorithms

Biases and discriminations in AI algorithms present some severe ethical threats including inequitable treatment of persons in cybersecurity affairs[9]. Unfortunately, the use of AI systems that were trained on the parameters of biased data leads to the reinforcement of biases and can even contribute to the growth of inequality, leading, for example, to individual threats being rated higher or lower, or unequal security measures being applied to individuals or groups based on the AI system’s assessment. For example, the cybersecurity tools that are prejudiced towards the programs may create a bias in detecting processes and label specific demographic groups as high risk, warranting more attention or even unfair treatment. These are some of the issues that raise the question of responsibility and answerability of AI, to distinguish the bias patterns, and to explain decision-making made by AI systems. Lack of such measures results in AI application in cybersecurity strengthening prejudices and making people distrust the digital protection systems.

4. Security of AI Systems Themselves

The first kind of security threat is regarded to be direct security of AI systems In some cases, these very systems, intended to safeguard networks and applications from cyber threats, may emerge as the targets of attacks. The internal weakness of AI relies on what is used to make them, for instance; adversarial attack where the input is adjusted to deceive the AI system could hinder the effectiveness of AI security. If the adversaries exploit aspects which correspond to these weaknesses, then it seems that the tools meant for defence of digital resources may be threatened[10]. The ethical issues that arise when guarding AI products are, the stabilization of the strength, certainty, and encyclopaedia of those systems. This rests from a wide scope touching on such areas as testing, monitoring, and even designing measures that would protect a guard an AI system from adversarial manipulation. The general concept of AI is to maintain it shielded from cyber threats not only the protection of the technology but also the credibility of the overall security state.

5. Balance of Security with Ethical Principles

It is important to ensure that ethical frameworks remain intact in the utilization of AI in order the achievements in this sphere of cybersecurity are not reached to the detriment of people’s rights. To ethically apply AI in cyber security, there is a need to attain three objectives such as protecting users’ privacy, minimizing prejudice, and preserving human control while effectively combating cyber threats. Nonetheless, it remains quite challenging to strike this balance, as illustrated by the cases of AI-based surveillance technologies where large populations engender more concern for the surveillance of citizens’ privacy. In other fields, for instance, decision making in cybersecurity has been done digitally, yet it has moved to a level of justifying injustice to the people by things such as biased algorithms that either isolate people or deploy them unfairly based on algorithms alone[11]. These examples explain the necessity to develop ethical norms or values in the protection of human rights, and the conceptualization of the need to work toward the common weal, and against any phenomena that are unethical in the use of AI in cybersecurity.

6. Regulations and Ethical Standards for AI in Cybersecurity

Rules and standards are necessary for the legislation of the use of AI in cybersecurity, to make sure that these powerful tools are used according to the best interest of the society and international human rights[12]. Thus, as the Advanced Intelligence advances there is an appreciation for efficient sets of guidelines to govern the practice of appropriate utilization of this intelligence as it relates to the ethic of security in the cyberspace. They should include main issues like data protection, bias reduction, disclosure, and reckoning and must outline the recommended best practice for developers and organizations regarding the utilization of AI. Current approaches include EU GDPR and the proposed AI Act as they set up regulative standards for AI, focusing on the importance of individuals’ rights and requirements for explainability of AI decisions. Furthermore, practice guidelines include specific industrial standards like the NIST AI Risk Management Framework that deals with the aspect of guaranteeing secure, trustworthy AI, and compliance with ethical standards. However, due to the progressive nature of AI technology, there is a continuous growth of improved and new regulations to overcome ethical problems and the development of international guidelines applicable for the righteous utilization of AI in cybersecurity.

7. The Future Trends

Thus, further development of AI technology, its prospects and impact on cybersecurity, will mean new ethical issues arising that need to be addressed and predicted in advance. The latest seems to be the applications like autonomous threat response systems and very advanced and complicated predictive analytics, which have a great potential for the future, at the same time presenting several highly questionable ethical considerations. For example, the rise in use of AI can make the decision-making process fully automated, which would certainly not be comfortable to the public because of considerations such as responsibility and consequences that result from the decision made. Moreover, as AI System become part of International Security Networks the threats of bias, invasion of civilians’ privacy, and misuse of AI as tools for surveillance and repression increase significantly.

Conclusion

Thus, it is possible to sum up the note that there are wide opportunities for the application of AI technology in the sphere of cybersecurity to enhance the efficiency of protection and stability of digital systems concerning the existing risks. But with this there were so many ethical implications that were because of the advancement that cannot be overlooked. Besides the benefits of AI in cybersecurity, there are as many questions as possible of an ethical nature: From possible algorithmizing of discrimination and violation to the threats that are at the core of the AI matrices. According to the new and ever-changing forms of cyber threats, AI shall continue to be an integral component of global defences while, at the same time, there should be ethical frameworks and legal requirements stated and complied with to the letter. The above steps will assist in eliminating the misuse of Artificial Intelligence to perpetrate violations of human rights, unfair or lacks accountability. Therefore, anticipating the ethical challenges defined above, it is possible to utilize AI for cybersecurity and avoid the exploitation of such technologies and the breach of the principles that must be the foundation of justice and equality in the electronic environment.

References

- D. Aoyama, K. Yonemura, and A. Shiraki, “Effective Methods in Cybersecurity Education for Beginners,” in 2024 12th International Conference on Information and Education Technology (ICIET), Mar. 2024, pp. 372–375. doi: 10.1109/ICIET60671.2024.10542828.

- P. Pappachan, Sreerakuvandana, and M. Rahaman, “Conceptualising the Role of Intellectual Property and Ethical Behaviour in Artificial Intelligence,” in Handbook of Research on AI and ML for Intelligent Machines and Systems, IGI Global, 2024, pp. 1–26. doi: 10.4018/978-1-6684-9999-3.ch001.

- Y. Hu et al., “Artificial Intelligence Security: Threats and Countermeasures,” ACM Comput Surv, vol. 55, no. 1, p. 20:1-20:36, Nov. 2021, doi: 10.1145/3487890.

- I. H. Sarker, M. H. Furhad, and R. Nowrozy, “AI-Driven Cybersecurity: An Overview, Security Intelligence Modeling and Research Directions,” SN Comput. Sci., vol. 2, no. 3, p. 173, Mar. 2021, doi: 10.1007/s42979-021-00557-0.

- A. D. Sontan, S. V. Samuel, A. D. Sontan, and S. V. Samuel, “The intersection of Artificial Intelligence and cybersecurity: Challenges and opportunities,” World J. Adv. Res. Rev., vol. 21, no. 2, Art. no. 2, 2024, doi: 10.30574/wjarr.2024.21.2.0607.

- M. Ramzan, “Mindful Machines: Navigating the Intersection of AI, ML, and Cybersecurity,” J. Environ. Sci. Technol., vol. 2, no. 2, Art. no. 2, Dec. 2023.

- A. Sokolov, “Defending the Digital Realm: The Intersection of Cybersecurity and AI,” MZ Comput. J., vol. 4, no. 2, Art. no. 2, Nov. 2023, Accessed: Aug. 09, 2024. [Online]. Available: https://mzjournal.com/index.php/MZCJ/article/view/104

- V. Wylde et al., “Cybersecurity, Data Privacy and Blockchain: A Review,” SN Comput. Sci., vol. 3, no. 2, p. 127, Jan. 2022, doi: 10.1007/s42979-022-01020-4.

- E. Loza de Siles, “Artificial Intelligence Bias and Discrimination: Will We Pull the Arc of the Moral Universe towards Justice?,” J. Int. Comp. Law, vol. 8, p. 513, 2021.

- N. Moustafa, “A new distributed architecture for evaluating AI-based security systems at the edge: Network TON_IoT datasets,” Sustain. Cities Soc., vol. 72, p. 102994, Sep. 2021, doi: 10.1016/j.scs.2021.102994.

- B. D. Alfia, A. Asroni, S. Riyadi, and M. Rahaman, “Development of Desktop-Based Employee Payroll: A Case Study on PT. Bio Pilar Utama,” Emerg. Inf. Sci. Technol., vol. 4, no. 2, Art. no. 2, Dec. 2023, doi: 10.18196/eist.v4i2.20732.

- D. T. Eze, ECCWS 2021 20th European Conference on Cyber Warfare and Security. Academic Conferences Inter Ltd, 2021.

- Gupta, B. B., & Narayan, S. (2021). A key-based mutual authentication framework for mobile contactless payment system using authentication server. Journal of Organizational and End User Computing (JOEUC), 33(2), 1-16.

- Vajrobol, V., Gupta, B. B., & Gaurav, A. (2024). Mutual information based logistic regression for phishing URL detection. Cyber Security and Applications, 2, 100044.

Cite As

Neelapareddigari P. (2024) Ethical Implications of AI in Cybersecurity, Insights2Techinfo, pp.1