By: K. Sai Spoorthi, Department of computer science and engineering, Student of computer science and engineering, Madanapalle Institute of Technology and Science, 517325, Angallu, Andhra Pradesh.

Abstract

Generative AI has advanced at a high rate and was integrated into various industries and gives rise to fundamental questions and concerns regarding data privacy. The objective of this paper is to discuss the connection between generative AI applications and privacy, with special regard to the data acquisition processes. Nevertheless, scholars established that the current law and ethics (GDPR and sectoral legislation) do not help when it comes to the questions related to data ownership and users’ rights in the context of generative AI use. The paper also spurs the generation of more elaborate laws and ethical structures that would capture the progress made in innovation, all without dismissing right to privacy. It also extended such an approach with special regard to its further stochasticity as well as with regard to the leading notion according to which the formulation of policies in this regard cannot and should not be attempted without the assistance of technologists; that is, AI should be developed and deployed in the most secure and ethical way if the public is to remain assured of the developments within this discipline.

Key words: Generative AI, Lega; Framework, Privacy by design, Data Privacy, Innovation, GDPR, Ethical AI

Introduction

In the recent years, these fast-growing Ai technologies have set in motion modifications in the following in different fields. Such developments and particularly in the case of generative AI have raised the debate about the role of innovation and personal data. This is because they are sophisticated instruments that are applied to enhance operating results and can also be applied to support individual service delivery, concerns of privacy and use of data have emerged strongly. Problems arise since generative AI models function based on big datasets, which, in all likelihood, involve personal data gained involuntarily from public or even subterranean web sources. Coordinating these concerns becomes quite crucial as corporations, governments, right down to individual users are compelled to ask the question: How does one use such technologies without apparently violating privacy? Therefore, it is necessary to look at the opportunities offered by generative AI and the factors of data privacy which can become a major disadvantage of such a kind of technology.[1]

Generative AI and its Applications

Recently, new developments in the field of generative AI had major shifts and proved that this technology can revolutionise more than one field. Generative AI, in its turn, is capable of creating new content, both, in text and images or even in music and code with the realism of life and improved algorithms of deep learning. This has espoused especially in areas like entertainment, healthcare and marketing where organizations have been able to develop ways of automating processes and also where the quality of services for the customers or output of content to certain specified people has been enhanced. For instance, script writing and specials effects of a new movie might be today reliant on generative AI and healthcare data synthesis might depend on it for better diagnosis. Thus as the applications stay rather uncharted and continue to evolve and expand, it is even more crucial to understand intersections of such applications with data privacy and protection.

The Mechanisms of Data Collection in Generative AI

One of the key principles of generative AI is data accumulation processes that are essential for its functioning and that cause huge privacy concerns. [2]These systems usually assimilate a vast amount of data from disparate and sometimes even public and social media platforms, commercial and research databases and other relevant well-known sources and databases, therefore require a subtle outlook on data origin and ethicality. The methods used like generative adversarial networks and large language models require constantly feeding the model with diverse data inputs for training purposes and this in effect creates a loop hole for over collection or misuse of sensitive data. Moreover and as voiced in the recent future discussions rightfully emphasizing equality in an era of AI, a substantial problem is concerned with evaluation of perceived intelligences that form part of such AI-based architectures — a notion which itself rests on data feeding these models. Lastly, the problem of striking a proper balance between the new opportunities that data provide and the rights of individuals, who may be exposed to prejudice as a result of generative AI’s work, remain a matter of concern for further debate around the subject.

Legal and Ethical Frameworks Surrounding Data Privacy

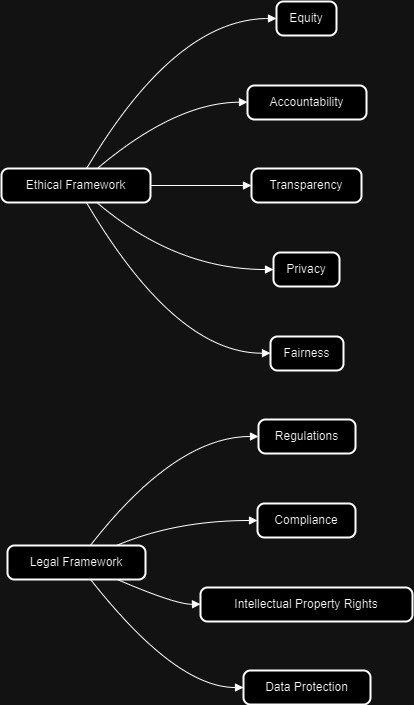

The ever-growing prominence of technology in our daily lives has raised the legal/ethical conflict between data privacy and generated models and a closer look at the existing legal obligations is therefore warranted. The legal framework as shown in fig1, to protect the rights of Americans predominantly contains sector-specific statutes that are different laws, such as the laws on the protection of data related to health insurance and accountability that is HIPAA and laws on the protection of online data collected from children through websites that is, COPPA. Concurrently, the ethical concerns have grown, making people doubt about consent, responsibility, and bias in the AI material produced hence the need for a coherent set of ethics beyond current legislation.[3] As more organisations adopt generative AI, they have to do so in an environment that lacks coherence in the legal regulation of the technology and with rising public debates on data ownership and user rights, thus, necessitating demand for legal and ethical reforms in the use of the technology.

Current Regulations and Their Effectiveness in Addressing Generative AI Challenges

It have been observed that the current regulatory framework is not enough to resolve the problems which generative AI expose and hence there is require an enhance type of frame work on the same. That is why current legal tools, such as the General Data Protection Regulation (GDPR), which, though unique in their focus on data protection, cannot cope with the further development of artificial intelligence. They still do not define how the use of contents produced by AI will affect the intellectual property rights and consent of the users and the developers involved. The vagueness of the ordinances presents a problem since the provisions are only sporadically implemented, and practitioners are allowed to provide cross-situational compliance that goes against the very principles that the regulations purport to support. In addition, the development of AI is distributed and fragmented, which makes it even more challenging for regulatory bodies to control and restrain new generative models from exceeding the legal boundaries because many of those models do not have limited geographical access. These deficiencies prove the need to establish new international cooperation in order to develop the set of requirements as complete as possible, which would allow addressing the challenges of the generative AI technology.[2]

Future for Balancing Innovation and Data Privacy in Generative AI

The more fields turn to generative AI to affect their operations as well as outcomes, there must be a more complex set of rules that will aim at protecting data while at the same time encouraging the advancement of generative AI. A promising one is connected to enhancement of data management rules that require firms to report how they use data and that they follow the highest standards. This could include the use of the privacy by design approach, ensuring that models employ data that can be anonymized to protect the identity of individuals; it would equally ensure that the use of AI is honourable –in terms of efficiency.[4] Besides, the collaboration between IT developers and policymakers can be observed in the policies which regulate the competitive advantage revealed by the generative AI and the deficits of the instrument that compromises people’s privacy. Therefore, it is possible to state that through the effective, secure, and ethical implementation of artificial intelligence, the stakeholders can stay on ‘the road map’ to gain public trust – which may be vital in the progression of such an innovative sector. As it is concluded at the end, it was pointed out that this symbiotic approach with solve the privacy issue and also open the gate for the innovation of safe generative AI.[5]

Conclusion

Analysing potential threats of generative AIs for data privacy it is possible to notice that the topic is very multi-faceted and requires careful approach. To that extent, drastic barriers arise as organisations seek to incorporate these sophisticated tools, specifically regarding the ethical dimensions and legal frameworks. This research finds itself in a race against time for educational institutions to set appropriate guidelines to address the issues raised by generative AI, notably on the subject of dishonesty and appropriate use . Also, it brings attention to the need to develop a space for trust and transparency to make a change in students’ perceptions of AI in tools and still keep the academic integrity. Lastly, there is the absolute need to engage multiple stakeholders from a broad spectrum of academics, industry stakeholders, and the regulators. Since it is possible to actively regulate generative AI and, thanks to AI- assisted algorithms, gain a decentralized access to the market, it remains only to responsibly turn to data privacy and think about further development in the field of artificial intelligence.

References

- Y. Chen and P. Esmaeilzadeh, “Generative AI in Medical Practice: In-Depth Exploration of Privacy and Security Challenges,” J. Med. Internet Res., vol. 26, no. 1, p. e53008, Mar. 2024, doi: 10.2196/53008.

- Y. Shi, “Study on security risks and legal regulations of generative artificial intelligence,” Sci. Law J., vol. 2, no. 11, pp. 17–23, Nov. 2023, doi: 10.23977/law.2023.021104.

- M. Rahaman, C.-Y. Lin, and M. Moslehpour, “SAPD: Secure Authentication Protocol Development for Smart Healthcare Management Using IoT,” Oct. 2023, pp. 1014–1018. doi: 10.1109/GCCE59613.2023.10315475.

- P. Pappachan, Sreerakuvandana, and M. Rahaman, “Conceptualising the Role of Intellectual Property and Ethical Behaviour in Artificial Intelligence,” in Handbook of Research on AI and ML for Intelligent Machines and Systems, IGI Global, 2024, pp. 1–26. doi: 10.4018/978-1-6684-9999-3.ch001.

- P. Hacker, A. Engel, and M. Mauer, “Regulating ChatGPT and other Large Generative AI Models,” in Proceedings of the 2023 ACM Conference on Fairness, Accountability, and Transparency, in FAccT ’23. New York, NY, USA: Association for Computing Machinery, Jun. 2023, pp. 1112–1123. doi: 10.1145/3593013.3594067.

- Sedik, A., Maleh, Y., El Banby, G. M., Khalaf, A. A., Abd El-Samie, F. E., Gupta, B. B., … & Abd El-Latif, A. A. (2022). AI-enabled digital forgery analysis and crucial interactions monitoring in smart communities. Technological Forecasting and Social Change, 177, 121555.

- Mourelle, L. M. (2022). Robotics and AI for Cybersecurity and Critical Infrastructure in Smart Cities. N. Nedjah, A. A. Abd El-Latif, & B. B. Gupta (Eds.). Springer.

- Keesari, T., Goyal, M. K., Gupta, B., Kumar, N., Roy, A., Sinha, U. K., … & Goyal, R. K. (2021). Big data and environmental sustainability based integrated framework for isotope hydrology applications in India. Environmental Technology & Innovation, 24, 101889.

Cite As

Spoorthi K.S. (2024) Generative AI and Data Privacy Concerns, Insights2Techinfo, pp.1