By: Rekarius, Asia University, Taiwan

Abstract

Voice phishing, commonly known as vishing, is a technique where fraudsters exploit telecommunication channels. This paper discusses two real-time approaches for detecting voice phishing: a machine learning-based approach and a BERT-based approach.

Keywords Real Time Detection, Voice Phishing, Machine Learning, BERT

Introduction

Voice phishing, commonly known as vishing, is a technique where fraudsters exploit telecommunication channels[3] to deceive individuals into divulging sensitive information, such as bank credentials, passwords, and personal identification details. To prevent damage through vishing, several scholars have made notable efforts to use computational approaches to detect such events [5]. This researcher [5] introduced a vishing detection system, which includes several natural language processing (NLP) techniques that consider white and blacklists. As another example, [1] proposed a protection platform by combining several notable features, such as user behavior profiles. This paper will summarise the methods used to detect voice phishing in real time.

Method

Machine Learning Approaches

Dataset

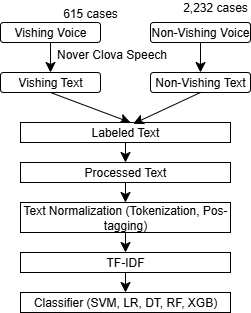

They collected a vishing dataset, which was organized as vishing-labeled (47 h) and non-vishing-labeled speech (500 h), from the transcripts. We employed 615 vishing cases after eliminating personal information and annotating the collected datasets for each vishing case.

Non-vishing labeled cases were collected by the NationalInstitute of Korean Language. The cases covered approximately 500 h of daily conversation by 2739 speakers. In each case, the conversation had a duration of approximately 15 min and was on one of 15 topics (sports, travel, weather, company/school, food, broadcasting, movies, health, gifts, goals, dating, pets, part-time jobs, personality, and family). After removing any personal information, we employed 2232 non-vishing cases organized using paired speech and text data. Figure 1 shows the workflow of data processing and classification

Preprocessing

They converted the vishing-label cases into text using the Google Cloud Speech- to-Text API and Naver Cloud Platform Clova Speech API. The results were then compared to validate whether the converted texts matched the original speech cases. They selected the results of the Naver Cloud Platform Clova Speech API, which outperformed the Google Cloud Speech-to-Text API and was suitable for the Korean language. We employed a dataset of 2847 cases (full dataset: 615 vishing and 2,232 non-vishing). Table 3 summarizes the collected and applied datasets.

In addition to the full dataset, we created a smaller dataset by excluding cases with more than 5000 words, called the Top-5000 dataset, to address the imbalance in the length distributions between vishing and nonvishing cases. Each word was then tagged by dividing each text into morpheme units. We used open Korean text (OKT) from Korean natural language processing in Python (KoNLPy), and Khaiii (Kakao hangul analyzer), which are widely applied in the Korean NLP process. The nouns, adjectives, and verbs that remained were employed in the analysis. They then used the TF-IDF vectorizer for feature extraction. Classification experiments were conducted after feature extraction. In addition to the features, the paired POS tags for each word were analyzed to better understand the context of the text.

Machine Learning Models

The full dataset and Top-5000 dataset were randomly divided at a ratio of 8:2 as the training and test datasets, respectively. Five machine learning models, namely, SVM, LR, RF, DT, and extreme gradient boosting (XGB) models, were used in this study. Table 1 presents the number of cases employed in the training and testing.

Table 1: Dataset for training and testing

Vishing | Nonvishing | Vishing | Nonvishing | |

Average per case (voice) | 275.46 s | 848.12 s | 269.22 s | 788.22 s |

Average per case (text) | 1691 words | 4864 words | 1030 words | 4021 words |

Training cases | 507 cases | 1770 cases | 465 cases | 881 cases |

Testing cases | 108 cases | 462 cases | 104 cases | 233 cases |

BERT Approaches

The BERT approach is summarised from this research [2].

VOSK Speech-to-Text Model

Vosk is an open-source speech recognition toolkit that efficiently converts spoken language into text [2]. The Vosk model offers several advantages that make it suitable for real-world applications. It does not require an active internet connection, allowing offline speech recognition, which is crucial for privacy-focused applications.

Vosk’s architecture builds upon Kaldi’s hybrid system, which combines deep neural networks with hidden Markov models (HMM). The system is primarily composed of two main components:

-

- Acoustic Model: The acoustic model maps audio features (such as MFCC – Mel- Frequency Cepstral Coefficients) to phonetic units, ensuring robust sound- to-text conversion. Deep neural networks (DNNs) process audio waveforms and predict probabilities of phonemes.

- Language Model: The language model analyzes the sequence of phonemes and pre- dicts meaningful words and sentences. It incorporates statistical or n-gram models to ensure the output text aligns with natural language grammar and context.

The speech-to-text process in Vosk involves several steps:

- Audio Input: The input audio signal is captured and preprocessed by segmenting it into frames and extracting relevant features (MFCCs).

- Acoustic Model Processing: The acoustic model converts these features into probabilistic phoneme predictions using deep neural networks.

- Decoding with Language Model: The predicted phonemes are passed through the language model, which decodes the phonetic sequences into mean- ingful text.

- Final Output: The system generates the transcribed text, optimizing ac- curacy through alignment algorithms like WFST (Weighted Finite State Trans- ducers)

The Vosk model is integrated into the system to transcribe incoming phone call audio into text in real time. The accurate English transcription serves as input for the BERT model, which further analyzes the text to determine the probability of phishing.

BERT

BERT (Bidirectional Encoder Representations from Transformers) is a transformer- based natural language processing (NLP) model developed by Google. It is pre-trained using large corpora of text data and is designed to understand the contextual meaning of words in a sentence.

BERT is fine-tuned on the phishing dataset to differentiate between legiti- mate conversations and phishing attempts. This fine-tuning process customizes the pre-trained model to accurately detect fraudulent intent within transcribed speech. BERT’s classification head outputs a scam probability score, enabling the model to determine how likely a given text contains phishing content.

The architecture of BERT:

Input Representation

Input Representation: BERT transforms input text into a structured format suitable for the transformer model. It utilizes a combination of token embed- dings, segment embeddings, and positional embeddings.

Multi-Layer Transformer Encoders

Multi-Layer Transformer Encoders: The core of BERT’s architecture con- sists of Multi- Layer Transformer Encoders [10] stacked on top of each other. These layers are responsible for processing the input embeddings and capturing context from the entire sequence.

Transformer encoder’s two key components:

Multi-Head Self-Attention Mechanism This mechanism allows BERT to at- tend to all tokens in the input simultaneously, regardless of their position.

Feed-Forward Neural Network

Following the attention mechanism, the output is passed through a position- wise feed-forward neural network. This network applies non-linear transformations to each token’s embedding through two fully connected layers, incorporating a ReLU activation function between them.

Bidirectional Contextual Representation

This approach enables BERT to generate deep contextual representations, where each word’s embedding is influenced by its surrounding words on both sides. This is crucial for detecting subtle cues in phishing text, where context often reveals fraudulent intent.

Classification Layer

For scam detection, a classification layer is added on top of the pre-trained BERT model. The [CLS] token, placed at the start of each input sequence, serves as a comprehensive representation of the entire sentence. The final hidden state of the [CLS] token is passed through a dense layer with a softmax activation function to produce the classification output.

Pre-training and Fine-tuning

Pre-training: BERT is first trained on vast amounts of unlabeled text data (such as Wikipedia and BookCorpus) using two key objectives: Masked Lan- guage Model (MLM) and Next Sentence Prediction (NSP). Before being fed into BERT, 15of the words in each sequence are replaced with a [MASK] to- ken. The objective of the model is to predict the original words that have been masked, leveraging the context provided by the remaining unmasked words in the sequence.

Fine-tuning: For phishing detection, the pre-trained BERT model is fine- tuned on a labeled dataset of phishing transcripts and legitimate call texts. The model’s weights are adjusted to optimize for the binary classification task, allowing it to learn phishing specific language patterns and intent.

Conclusion

The fine-tuned BERT model [2] demonstrated high performance on the test set, achieving an accuracy of 94.3%. The model achieved precision and recall scores of 92.8% and 95.1%, respectively, demonstrating its proficiency in correctly detecting phishing cases while minimizing false positives and negatives. The F1-Score, which combines precision and recall into a single metric, was 94.0%, reinforcing the model’s reliability in phishing detection. By leveraging a BERT- based model, the system effectively analyzes call transcripts to identify potential phishing threats with high accuracy. Machine Learning Approach [4], Almost all classifiers achieved 100% ac- curacy when using the full dataset. In particular, the SVM classifier showed the highest performance in both morpheme analyses, OKT and Khaiii, with 100% accuracy. The LR classifier reported the fastest training time of 2.223 s, followed by the SVM models. The SVM classifier again achieved the highest ac- curacy(100%) with both morpheme analyses when using the Top-5000 dataset. Other machine learning classifiers, such as RF and XGB, also showed 100% accuracy, unlike the full dataset. In addition, the Top-5000 dataset required a relatively shorter training time than the full dataset. The Khaiii tagging LR classifier was the fastest with a training time of 0.244 s. The detection time was presented. The fastest case was the LR method with OKT tagging applied to the Top-5000 dataset: a speed of 0.03 ms for the testing of each case was achieved.

References

- Phoebe A Barraclough, M Alamgir Hossain, MA Tahir, Graham Sexton, and Nauman Aslam. Intelligent phishing detection and protection scheme for online transactions. Expert systems with applications, 40(11):4697–4706, 2013.

- Alba Benny, Ann Maria Saji, Chemmanoor Josephine Joseph, PB Christina, and M Anly Antony. Real-time voice phishing detection using bert. In International Conference on Artificial Intelligence and Smart Energy, pages 410–426. Springer, 2025.

- Devendra Sambhaji Hapase and Lalit Vasantrao Patil. Telecommunication fraud resilient framework for efficient and accurate detection of sms phishing using artificial intelligence techniques. Multimedia Tools and Applications, 83(41):89111–89133, 2024.

- Minyoung Lee and Eunil Park. Real-time korean voice phishing detection based on machine learning approaches. Journal of Ambient Intelligence and Humanized Computing, 14(7):8173–8184, 2023.

- Manh-Hung Tran, Trung Ha Le Hoai, and Hyunseung Choo. A third-party intelligent system for preventing call phishing and message scams. In Inter- national Conference on Future Data and Security Engineering, pages 486– 492. Springer, 2020.

- Gupta, B. B., Gaurav, A., Arya, V., & Alhalabi, W. (2024). The evolution of intellectual property rights in metaverse based Industry 4.0 paradigms. International Entrepreneurship and Management Journal, 20(2), 1111-1126.

- Zhang, T., Zhang, Z., Zhao, K., Gupta, B. B., & Arya, V. (2023). A lightweight cross-domain authentication protocol for trusted access to industrial internet. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-25.

- Jain, D. K., Eyre, Y. G. M., Kumar, A., Gupta, B. B., & Kotecha, K. (2024). Knowledge-based data processing for multilingual natural language analysis. ACM Transactions on Asian and Low-Resource Language Information Processing, 23(5), 1-16.

Cite As

Rekarius (2025) Real-Time Voice Phishing Detection Using Machine Learning And BERT Approaches, Insights2Techinfo, pp.1