By: K. Sai Spoorthi, Department of computer science and engineering, Student of computer science and engineering, Madanapalle Institute of Technology and Science, 517325, Angallu, Andhra Pradesh.

Abstract

This paper focuses on the technical issues of generative AI models where, despite remarkable development and usage in different domains, exist significant problems. These are data dependency whereby models develop prejudiced or unrelated content owing to the training data type and quality, and quality and quantity of accessible training data which influences the model’s performance and speed. Constraints in model architecture also present themselves as a problem which requires great effort to overcome when creativity need to be maximized and scalability has to be achieved. As well, due to the complexity of these models, they are computationally expensive and there is a question of replicability which limits practical utility. The paper also discusses some of these technical constraints and posits that there is need to re-fashion models for better generative practices. The research thus calls for the consideration of the above challenges as a way of enhancing the use of generative AI for the intended best result while being ethical.

Key words: Generative AI, Technical Limitations, Computational Complexity, Data dependency, Scalability, Ethics in AI

Introduction

In the recent years as development of the artificial intelligence progresses, there has been much interest across various industries especially on the improvements of the generative models. However, such passion quite often overlooks securities that remain central to the nature of such technologies. While, depending on the type of model, one can observe perfectly reasonable produced text, artwork or synthesis of given data, such models give some outlook of narrow technical constraints. However, it is necessary to cherish these limitations as the guideline to develop the suitable and right implementation methods; this is crucial for effective practice and correct application in different places. This subject will try to explain many small but intricate technical details invisible to the public but which, if not solved, may cancel out the benefits of generative AI. Looking at all the points that contribute to the formation of such systems, how they work, and the data on which all this is based, one will be able to form a objective assessment of the current state and, perhaps, the prospects for the development of these technologies. To advance the field at a reasonable rate of risk, these limitations must overcome.

Generative AI Models and Their Applications

In the past few years, Generative AI models have proved useful in enhancing several areas which are mainly affiliated to the engineering discipline and, specifically, the discipline of materials science. Some of these materials include generative adversarial networks and the genetic algorithm where the models get a chance to produce new structures, and, train the materials with desired characteristics. This capability is highly beneficial in use cases like mechanical as well as bio-inspired material science, which, with the help of generative AI alongside AM, help researchers to translate the concepts and knowledge from biology to engineering. However, usage of generative AI is growing so would the matters of ethical use and the academic fraud primarily in the light of learning. Therefore, it has been established that many students have used the AI chatbots to do their assessments with reference on the internet and many of these students evidently are not conscious that what they are doing is Cheating and hence the need for policies and guidelines in the use of AI technologies in the establishments.[1] Therefore, the connection between the new possibilities resulting from generative AI and corresponding risks is at the core of a new paradigm in the field.

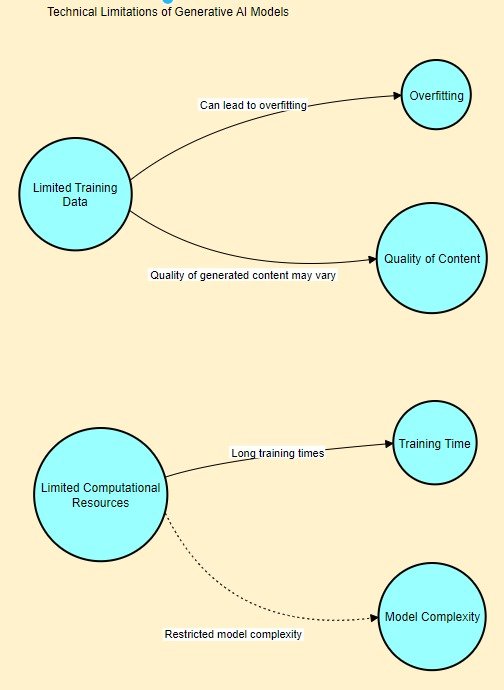

Data Dependency

Due to the large datasets used in training generative AI systems, data dependency becomes an essential problem, and a main challenge of generative AI models. This is evident in the manner the models pervert the outcomes to the extent of harnessing available information while restricting their functionalities in various contexts. For example, as shown in fig 1, if a model was trained on a specific type of music or people from a given ethnicity, it means that its ability to create music content that is culturally or contextually relevant will be impaired thus yielding biased or irrelevant content. In addition, accuracy and credibility of the training data set underpin the process, for errors can get amplified throughout the model, thereby making the issues of misleading information and realities twisted even worse. Therefore, handling data dependency is paramount, not only in dealing with generative AI performance but also in the context of maintain the models’ ethical behaviour to provide fair and fast generation content depending on the application areas.

Limitations of Training Data Quality and Quantity

A major limitation that directly affects the learning process of the generative AI models is the quantity and the quality of a training data set. Lack of balanced or inadequate data can cause imbalanced results and reduce the model’s efficiency; this is a significant problem in applications where proximity to actual results is critical, like in retail domain. [2]Analysing the literature available on citizen data scientists, there are major issues of misinformation and biased content generation especially when generative AI models are used to boost data skills in organisations. Similarly, while generative AI can be applied to the production of new materials or determining the properties of new products, mechanical, and bioinspired materials are highly dependent on the data quality that feeds the models. Train data might be low quality and cannot capture relationships of fields, so these technologies cannot help innovating science. Hence, improvement in the limitation of the training data set becomes the critical factor for determining the advances in Generative AI.

Model Architecture Constraints

The explanation of some of the main features of model architecture states that some of the constraints are related to the use of generative AI solutions. For the remaining issues of the graph structures, specific problems of certain strong models can be discussed, including the limitations relative to the scale and the kind of questions and volumes that transformers, for example, can resolve. For example, if the number of layers is upticked and has a high depth and width to handle more attributes of the data, then the number of computations to be performed sky rocket and this in turn making the training time and resources to go up as well. Also, they lessen the factor of creativity and flexibility, and introduce effect, known as the law of diminished returns, according to which, if the incidents in structure are made more complicated, the qualitative improvement of output is marginally increased. Fixed architectures also keep the model from exchanging knowledge between two domains, or excel a specific task with good results and performs poorly in another, this part going entirely against how people’s minds function. The implication of this inherent constraint can be seen as calling for more flexible and efficient model designs as a way of achieving better generative solutions.

Challenges in Scalability and Complexity of Generative Models

On this background, the key limitations derived from the scalability standpoint pertain to generative models: these two types of generative models are considered to require big data and computation. Although they are also more sophisticated, they demand a more extensive training sample and higher power computing materials, which are still issues in the practicable implementation. Furthermore, the applied architectures, which are often much more complex compared to the ones defined, for instance, deep neural networks, are usually required to be fine-tuned. [3]This not only gives an opportunity to over fit the model, but also makes it very cumbersome when it comes to reproducing the results as minimal variations in the configurations yield drastically different results. Therefore, the researchers are left with the problem of a compromise between the model that fulfils the requirements to be designed for solving a certain problem, and the model that can actually be implemented in practice; it is for this reason that generative models face scalability problems in real-life applications.[4] For that matter, new ideas, which are produced constantly and again put into practice, change the context and the basic framework of what is still considered a good model, which overall, turns the entire subject into a question mark, or a need for rethinking of the given capacities and models.

Conclusion and Implications of Technical Limitations on Future Developments

The assessment of the generative AI models is done side-by-side with some of the technical limitations that needs to be pointed out. However, the future of these models that has the capability to revolutionize fields like material science or education is not without drawbacks or vices and such things are things like coding bugs, no understanding of the context. For example, the suitability of generative AI categorically, in designing materials — as generative and AI topics, as generative adversarial networks, or topology optimisation described earlier — and it was earlier shown that while the generative AI’s effectiveness may be lacking in some areas, it cannot reproduce complex biological processes with any reliability. Furthermore, as soon as the introduction of the AI tools, such as ChatGPT, are brought to the educational field and the evidence of the substantial benefits during the integration of the tools mentioned are provided, the further discussion of the growth of the application of the tools brings critical questions concerning the ethicality of the mentioned type of assessment where, perhaps, the potential of the student’s real creativity and critical thinking can be masked. [5]Last but not the least, such technicalities need to be acknowledged to unleash the true potential on the one hand as well as to set standard for the A. I generation techniques on the other hand in the world of technology as well as in the field of education.

References

- K. Kenthapadi, H. Lakkaraju, and N. Rajani, “Generative AI meets Responsible AI: Practical Challenges and Opportunities,” in Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, in KDD ’23. New York, NY, USA: Association for Computing Machinery, Aug. 2023, pp. 5805–5806. doi: 10.1145/3580305.3599557.

- F. Fui-Hoon Nah, R. Zheng, J. Cai, K. Siau, and L. Chen, “Generative AI and ChatGPT: Applications, challenges, and AI-human collaboration,” J. Inf. Technol. Case Appl. Res., vol. 25, no. 3, pp. 277–304, Jul. 2023, doi: 10.1080/15228053.2023.2233814.

- A. Z. Jacobs and A. Clauset, “A unified view of generative models for networks: models, methods, opportunities, and challenges,” Nov. 14, 2014, arXiv: arXiv:1411.4070. doi: 10.48550/arXiv.1411.4070.

- M. Rahaman et al., “Utilizing Random Forest Algorithm for Sentiment Prediction Based on Twitter Data,” 2022, pp. 446–456. doi: 10.2991/978-94-6463-084-8_37.

- L. Triyono, R. Gernowo, P. Prayitno, M. Rahaman, and T. R. Yudantoro, “Fake News Detection in Indonesian Popular News Portal Using Machine Learning For Visual Impairment,” JOIV Int. J. Inform. Vis., vol. 7, no. 3, pp. 726–732, Sep. 2023, doi: 10.30630/joiv.7.3.1243.

- P. Chaudhary, B. B. Gupta, K. T. Chui and S. Yamaguchi, “Shielding Smart Home IoT Devices against Adverse Effects of XSS using AI model,” 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 2021, pp. 1-5, doi: 10.1109/ICCE50685.2021.9427591.

- Li, K. C., Gupta, B. B., & Agrawal, D. P. (Eds.). (2020). Recent advances in security, privacy, and trust for internet of things (IoT) and cyber-physical systems (CPS).

- Chaudhary, P., Gupta, B. B., Choi, C., & Chui, K. T. (2020). Xsspro: Xss attack detection proxy to defend social networking platforms. In Computational Data and Social Networks: 9th International Conference, CSoNet 2020, Dallas, TX, USA, December 11–13, 2020, Proceedings 9 (pp. 411-422). Springer International Publishing.

Cite As

Spoorthi K. S. (2024) The Technical Limitations of Generative AI Models, Insights2Techinfo, pp.1