By: Vanna karthik; Vel Tech University, Chennai, India

Abstract

Deepfake technology which employs artificial intelligence has progressed rapidly during recent years so that identifying real substances from artificially modified content has become much harder. Current cyber attackers take advantage of this technology by creating AI-generated content which they use to phish victims through simulations of trusted individuals across phishing attacks. Deepfake phishing has become a leading focus of this article which covers its present-day trends alongside cybersecurity consequences and specific real-time examples of deepfakes and their prevention methods. The sophistication of attacks makes traditional phishing safeguards ineffective, so businesses need both modern detection systems and training programs to stop developing cyber threats.

Introduction

Criminals use phishing attacks as their main weapon to acquire sensitive data since the tactic has existed for many years. These attacks from the past used deceptive messages or emails to obtain victims’ authentication information. Deepfake technology has created a novel threat of deception after its arrival. Confidence-scams using AI-produced voice and video handle forged executives and workplace and governmental official impersonations[1]. Contemporary attacks against credit institutions and individual subjects generate severe dangers to both organizations and countrywide security policies. Because deepfake technology is becoming steadily easier to access small attackers now possess the capability to create complex phishing schemes. To survive the threat, organizations need to maintain leadership positions through early detection and constant education of their employees.

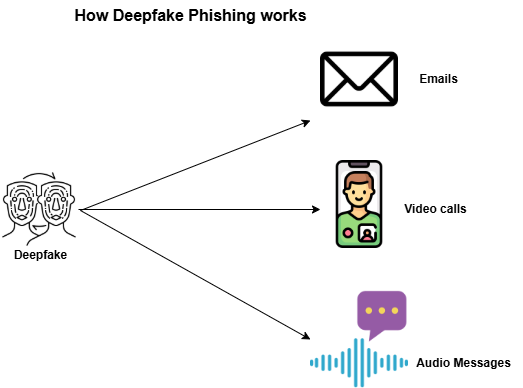

How Deepfake Phishing Works

The content in deepfake phishing attacks emerges from artificial intelligence and machine learning which produces realistic audio and video material. The standard operating sequence in such attacks includes these elements:

1. Cybercriminals who want to create these attacks retrieve available data through scraping social media platforms for voice and video materials[2]. They particularly focus on public content such as social media records and interview materials.

2. Deep learning models enable attackers to train AI algorithms, so they duplicate target voice patterns as well as facial movements during AI training sessions[3].

3. Deepfake videos and audio clips enable fraudsters to impersonate people during their communications through emails and phone calls and video conferencing[3].

4. Victims end up performing money transfers and handing over confidential details along with executing malicious commands after believing in the legitimacy of the source[2].

Real-World Cases of Deepfake Phishing

Deepfake phishing threatens actual businesses because it has already led to severe monetary losses and credibility harm. Some notable cases include:

– CEO Fraud in the UK (2019):

Mimicked by the AI-generated audio of their CEO, a fraudulent employee fell prey to the club since it enabled attackers to steal €220,000 ($243,000)[4].

– Fake Video Calls:

Some cybercriminals utilize doctored video recordings to create false impressions during video conferences which lead trusted executives to give fake instructions that harm employees indirectly[5].

– Government Scams:

The use of deepfakes enables criminal actors to create fake representations of political officials which leads to spreading fabricated information and extracting money payments from victims[5].

The Challenges of Detecting Deepfake Phishing

Several factors make detection of deepfake phishing attacks exceptionally difficult because:

The artificial intelligence process creates synthetic media content that very closely resembles authentic original recordings.

Rapid technological advancements in AI help models achieve better performance thus outdating current detection systems before their implementation or maintenance period expires.

Employees along with other individuals remain vulnerable to deepfake threats because they lack sufficient understanding of these threats.

Deepfake phishing escapes standard detection tools which make them different from conventional phishing emails and prevents current email authentication and spam filter security from identifying them.

Strategies to Combat Deepfake Phishing [6]

Deepfake phishing threats require proactive countermeasures that organizations and individuals should establish to stay protected. Some key strategies include:

1. Organizations need to use AI-driven deepfake detection software enabling system analyses of audio and video contents for evidence of manipulation.

2. The organization needs to organize special cyber security training classes which teach workers about recognizing deep-fake phishing attempts along with reporting procedures.

3. Employees need to verify their identity with additional security methods that involve both biological features and secure personal identification numbers to access corporate accounts.

4. The organization should implement verification protocols enabling staff members to verify potential threats through safe communication platforms.

5. The government should pass stronger laws to combat deepfake technology misuse while implementing appropriate penalties against the offenders using it illegally.

Conclusion

Traditional phishing attacks merge with AI capabilities to form deepfake phishing which represents an upcoming leading cyber danger. Advanced deepfake attacks demand growing business and individual attention resulting in the requirement of advanced technologies and extensive awareness initiatives to fight risks successfully. The battle against deepfake phishing demands organizations to implement superior AI-based security measures and regulatory boundaries while offering ongoing educational practices against cybercriminals. The global community can reduce deepfake phishing risks by developing partnerships between cybersecurity specialists and law enforcement agents and technology organizations.

References

- “AI and Actors: Ethical Challenges, Cultural Narratives and Industry Pathways in Synthetic Media Performance.” Accessed: Feb. 10, 2025. [Online]. Available: https://journals.sagepub.com/doi/epub/10.1177/27523543241289108

- M. F. B. Ahmed, M. S. U. Miah, A. Bhowmik, and J. B. Sulaiman, “Awareness to Deepfake: A resistance mechanism to Deepfake,” in 2021 International Congress of Advanced Technology and Engineering (ICOTEN), Jul. 2021, pp. 1–5. doi: 10.1109/ICOTEN52080.2021.9493549.

- M. Masood, M. Nawaz, K. M. Malik, A. Javed, A. Irtaza, and H. Malik, “Deepfakes generation and detection: state-of-the-art, open challenges, countermeasures, and way forward,” Appl. Intell., vol. 53, no. 4, pp. 3974–4026, Feb. 2023, doi: 10.1007/s10489-022-03766-z.

- “Unusual CEO Fraud via Deepfake Audio Steals US$243,000 From UK Company | Trend Micro (US).” Accessed: Feb. 10, 2025. [Online]. Available: https://www.trendmicro.com/vinfo/us/security/news/cyber-attacks/unusual-ceo-fraud-via-deepfake-audio-steals-us-243-000-from-u-k-company

- H. Farid, “Creating, Using, Misusing, and Detecting Deep Fakes,” J. Online Trust Saf., vol. 1, no. 4, Art. no. 4, Sep. 2022, doi: 10.54501/jots.v1i4.56.

- M. Mustak, J. Salminen, M. Mäntymäki, A. Rahman, and Y. K. Dwivedi, “Deepfakes: Deceptions, mitigations, and opportunities,” J. Bus. Res., vol. 154, p. 113368, Jan. 2023, doi: 10.1016/j.jbusres.2022.113368.

- Zou, L., Sun, J., Gao, M., Wan, W., & Gupta, B. B. (2019). A novel coverless information hiding method based on the average pixel value of the sub-images. Multimedia tools and applications, 78, 7965-7980.

- Alweshah, M., Khalaileh, S. A., Gupta, B. B., Almomani, A., Hammouri, A. I., & Al-Betar, M. A. (2022). The monarch butterfly optimization algorithm for solving feature selection problems. Neural Computing and Applications, 1-15.

- Spoorthi K.S. (2024) Generative AI in Personalized Medicine, Insights2Techinfo, pp.1

Cite As

Karthik V. (2025) Deepfake Phishing : When AI Voices and videos Trick You, Insights2techinfo pp.1