By: Ankita Manohar Walawalkar, Department of Business Administration, Asia University; ankitamw@ieee.com

Abstract:

This article explores the theoretical idea of AI legal, analysing European and U.S. practices. It outlines criteria for determining AI’s legal status, stress rejection of legal person for military AI. For civil claims, AI can be a legal person or an agent. Acknowledgement by society is vital, and legislators must deliberate impacts on current rights. The study proposes granting legal status to androgenic robots to deter unlawful acts. The article concludes that societal reactions, legal system changes, and requirement will shape acceptance, accenting acknowledgment, manageable effects, and assistances to the realm and society.

Introduction:

The question of AI legal status, though presently theoretical, enthrals the global scientific communal. While intellectual robots aren’t ordinary yet, it seems the matter will change from theory to practice in the next decade. The practical implications of AI legal personhood are obvious in the thoughtful societal changes driven by digital technologies [1]. This shift stimuli a re-examination of the legal system, raising questions about its arrangement with moral rules and influence on the economy. The analysis encompasses to other subjects like corporations and animals, prompting a reconsideration of their legal personhood. Prominently, this review makes us for rapid vicissitudes, get ahead the swift introduction of highly intelligent schemes with significant economic benefits. The article aims to discourse how to assess AI applicants for legal personhood, contribution a list of criteria for making informed decisions in the nonappearance of a detailed methodology [2] [3].

Formal Aspect:

a. No Formal obstacles to AI Personhood: Theoretically, there are no legal difficulties to conceding legal personhood to self-directed machines. Preceding recognitions of legal persons, such as corporations and environmental features, prove the flexibility of legal systems [4] [5].

b. Formal Aspects of Legal Personhood: Legal personhood, in a formal intellect, involves being a carrier of rights and duties. Though the criteria for legal personhood differ, possession rights and the capacity to sue and be sued are usually related through it [3].

c. Human Distinctions in Legal Personhood: Legal structures have factually developed based on human appearances, such as feelings, purposes, and awareness. Arguments against AI personhood often rotate around the nonappearance of critical human potentials [1].

d. European Union’s Perspective: The European Union is actively deliberating the legal status of AI, particularly in civil law. Though not openly sanctioning AI personhood, there is acknowledgment that AI may convert an independent focus in civil law, contributing in business transactions [2].

e. United States’ Position: The U.S. Department of Défense highlights that legal purposes are made by humans, not AI weapons. Meanwhile, the National Artificial Intelligence Research and Development Strategic Plan inspires the expansion of robots stick to to ethical and legal rules [2].

f. Social Recognition as a Factor: The acknowledgement of legal persons, counting AI, by society is critical. Equivalent cases involving ecological features and idols recommend that social recognition is pivotal for practical protection of legal benefits [1][6].

g. Proxy Representation for AI: As AI lacks human-like purposes and requirements, the role of a demonstrative becomes vital for legal personhood. The proxy assists to signify the AI’s will and intentions, reflecting past cases of legal person acknowledgement [5] .

h. Legal Pluralism and Cultural Norms: Social recognition, even by a marginal, plays a important role in legal diversity. Shifts in legal personhood are often prejudiced by altering cultural norms, and state acknowledgement is stimulating without wider social receipt[4] .

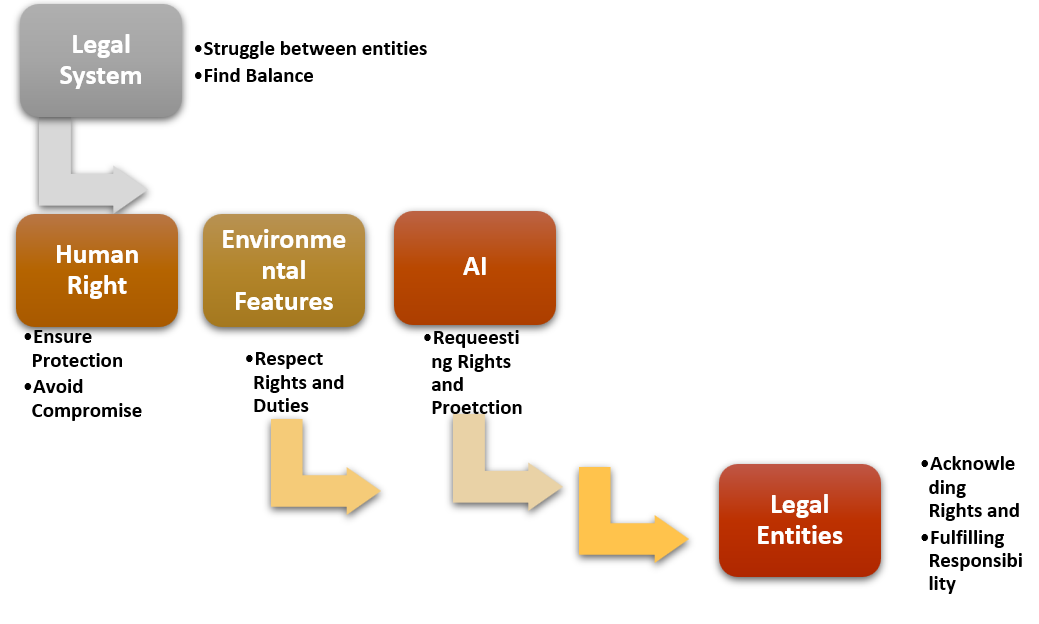

Legal System:

Giving way legal personhood to AI systems raises fundamental questions inside the systematic structure of law. As a legal entity, AI would request rights and protection, redesigning the legal landscape [4]. The acknowledgement of environmental features as legal persons, as seen in Ecuador, founds a precedent where respecting their rights becomes a responsibility. The development of legal systems, often marked by struggles between different legal entities, needs a steady process to find balance. Presenting AI as a legal person acts as a transformative force, affecting organizations and principles within the legal context. However, policymakers must prudently steer this development, ensuring the rights of existing topics, especially fundamental human rights, are not cooperated but rather increased over period [6].

Conclusion:

The dominant finding of this study is the absence of conclusive strategies or algorithms for defining AI legal personhood. As a substitute, decisions on this matter are integrally case-specific, demonstrated by the outright denial of personhood for firm categories like autonomous weapons [7]. Along with this, limitation in this study observed that lack of a unified concept for AI confuses decision-making, especially in cases with unclear grounds for legal personhood, such as androgenic intellectual robots. Further research paths comprise in-depth societal impact studies to comprehend how insights shape the recognition of AI as legal persons, study into evolving dynamics within legal systems familiarizing to AI personhood, and examination of ethical implications in diverse cultural situations[2].

Reference:

- R. Rodrigues, “Legal and human rights issues of AI: Gaps, challenges and vulnerabilities,” J. Responsible Technol., vol. 4, p. 100005, Dec. 2020, doi: 10.1016/j.jrt.2020.100005.

- R. Dremliuga, P. Kuznetcov, and A. Mamychev, “Criteria for Recognition of AI as a Legal Person,” J. Polit. Law, vol. 12, p. 105, Aug. 2019, doi: 10.5539/jpl.v12n3p105.

- D. Chen, S. Deakin, M. Siems, and B. Wang, “Law, trust and institutional change in China: evidence from qualitative fieldwork,” J. Corp. Law Stud., vol. 17, no. 2, pp. 257–290, Jul. 2017, doi: 10.1080/14735970.2016.1270252.

- E. O’Donnell and A. Arstein-Kerslake, “Recognising personhood: the evolving relationship between the legal person and the state,” Griffith Law Rev., vol. 30, no. 3, pp. 339–347, Jul. 2021, doi: 10.1080/10383441.2021.2044438.

- “What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry – ScienceDirect.” Accessed: Mar. 03, 2024. [Online]. Available: https://www.sciencedirect.com/science/article/abs/pii/S0747563221001783\

- Gaurav, A., Gupta, B. B., Hsu, C. H., Castiglione, A., & Chui, K. T. (2021). Machine learning technique for fake news detection using text-based word vector representation. In Computational Data and Social Networks: 10th International Conference, CSoNet 2021, Virtual Event, November 15–17, 2021, Proceedings 10 (pp. 340-348). Springer International Publishing.

- “The Rights of Nature as a Legal Response to the Global Environmental Crisis? A Critical Review of International Law’s ‘Greening’ Agenda | SpringerLink.” Accessed: Mar. 03, 2024. [Online]. Available: https://link.springer.com/chapter/10.1007/978-94-6265-587-4_3

- R. Rodrigues, “Legal and human rights issues of AI: Gaps, challenges and vulnerabilities,” J. Responsible Technol., vol. 4, p. 100005, Dec. 2020, doi: 10.1016/j.jrt.2020.100005.

Cite As

Walawalkar A M (2024) Directing the Legal Landscape: Establishing Measures for AI as a Legal Person, Insights2Techinfo, pp.1