By: Himanshu Tiwari, International Center for AI and Cyber Security Research and Innovations (CCRI), Asia University, Taiwan, nomails1337@gmail.com

Abstract:

Federated Learning (FL) has received vast interest as a privacy-retaining technique for schooling gadget gaining knowledge of fashions across decentralized gadgets and information assets. However, as the dimensions and complexity of FL packages boom, so do the issues about privacy and communique performance. In this article, we discover the integration of Differential Privacy and Model Compression techniques to deal with these challenges and in addition enhance the software of FL while preserving records privacy.

Introduction:

Federated Learning [1], as a decentralized education approach for device gaining knowledge of fashions, offers a promising technique to the hassle of keeping user privacy. It permits records to remain on individual devices at the same time as models are skilled collaboratively, with out centralizing touchy statistics. However, FL isn’t without its demanding situations, together with the need to improve privateness ensures and verbal exchange performance. In this newsletter, we delve into two key techniques, Differential Privacy and Model Compression, to enhance the effectiveness of FL.[1][2]

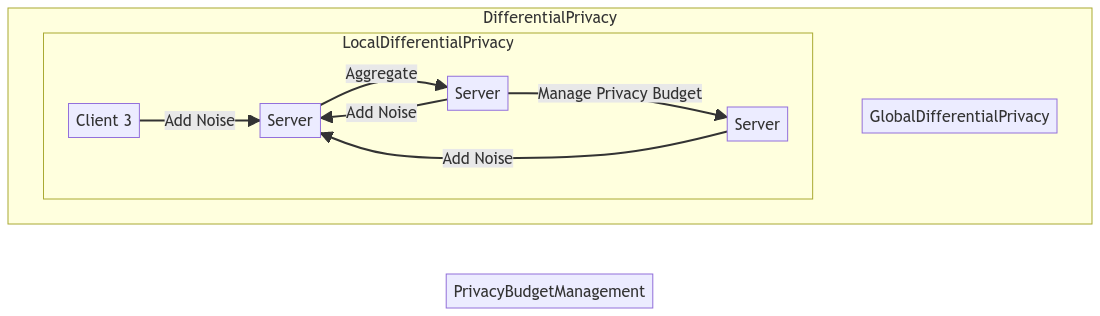

Differential Privacy[3] is a concept that introduces noise to the schooling system, making it extra difficult for an attacker to deduce particular information about any person’s facts. By integrating Differential Privacy into FL, we can notably decorate the privateness guarantees of the federated studying manner. This method involves the subsequent steps:

Local Differential Privacy[4]: Each taking part tool or patron adds noise to the gradients or model updates before sending them to the server. This system guarantees that no touchy facts is leaked at some point of the training.

Global Differential Privacy[5]: The server aggregates the noisy model updates the use of a differentially non-public aggregation algorithm, accordingly maintaining privateness at the worldwide level.

Privacy Budget Management: Careful control of privateness budgets is vital to control the amount of noise delivered and the general privateness guarantees.

2. Model Compression in Federated Learning:

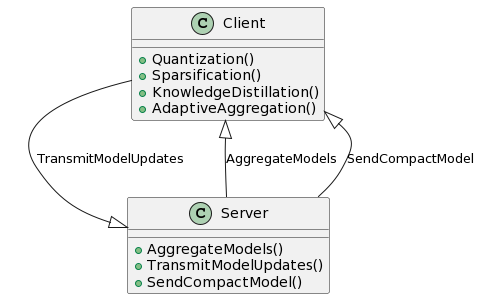

Model Compression is another crucial approach to enhance the performance of FL. This method aims to lessen the communique overhead related to transmitting version updates between customers and the server. Several tactics may be employed [6]:

Quantization: By quantizing version parameters, because of this representing them with fewer bits, the scale of transmitted updates can be drastically decreased.

– Sparsification: Sparse models, wherein many parameters are zero, may be correctly transmitted, saving bandwidth and lowering communication prices.

– Knowledge Distillation: The server can ship a compact trainer model to customers, which enables them replace their models extra correctly.

– Adaptive Aggregation: Dynamically determining which customers to consist of in every round of aggregation, based on their overall performance or contribution, can lessen conversation overhead.

Conclusion:

Federated Learning has the ability to revolutionize machine gaining knowledge of by way of keeping privacy and decentralizing model schooling. However, addressing the troubles of privateness and communique efficiency is vital for its big adoption. The integration of Differential Privacy and Model Compression techniques provides a strong framework for achieving those goals. As FL applications continue to grow in complexity and scale, those strategies will play a essential position in shaping the future of privacy-preserving device studying.

As research in this subject progresses, new improvements in privacy-enhancing strategies and conversation efficiency techniques will in addition solidify the position of Federated Learning as a cornerstone of steady, collaborative system learning. The aggregate of Differential Privacy and Model Compression opens up interesting possibilities for improving the privateness and efficiency of FL, making it an exciting location for researchers and practitioners alike.

References

- Zhang, Z., Pinto, A., Turina, V., Esposito, F., & Matta, I. (2023, October). Privacy and Efficiency of Communications in Federated Split Learning. IEEE Transactions on Big Data, 9(5), 1380–1391. https://doi.org/10.1109/tbdata.2023.3280405

- Li, A., Zhang, L., Wang, J., Han, F., & Li, X. Y. (2022, October 1). Privacy-Preserving Efficient Federated-Learning Model Debugging. IEEE Transactions on Parallel and Distributed Systems, 33(10), 2291–2303. https://doi.org/10.1109/tpds.2021.3137321

- Ouadrhiri, A. E., & Abdelhadi, A. (2022). Differential Privacy for Deep and Federated Learning: A Survey. IEEE Access, 10, 22359–22380. https://doi.org/10.1109/access.2022.3151670

- Zhao, Y., Zhao, J., Yang, M., Wang, T., Wang, N., Lyu, L., Niyato, D., & Lam, K. Y. (2021, June 1). Local Differential Privacy-Based Federated Learning for Internet of Things. IEEE Internet of Things Journal, 8(11), 8836–8853. https://doi.org/10.1109/jiot.2020.3037194

- Choudhury, O., Gkoulalas-Divanis, A., Salonidis, T., Sylla, I., Park, Y., Hsu, G., & Das, A. (2019, October 7). Differential Privacy-enabled Federated Learning for Sensitive Health Data. arXiv.org. https://arxiv.org/abs/1910.02578v3

- Yang, Q., Liu, Y., Chen, T., & Tong, Y. (2019, January 28). Federated Machine Learning. ACM Transactions on Intelligent Systems and Technology, 10(2), 1–19. https://doi.org/10.1145/3298981

- Deveci, M., Gokasar, I., Pamucar, D., Zaidan, A. A., Wen, X., & Gupta, B. B. (2023). Evaluation of Cooperative Intelligent Transportation System scenarios for resilience in transportation using type-2 neutrosophic fuzzy VIKOR. Transportation Research Part A: Policy and Practice, 172, 103666.

- Gupta, B. B., Gaurav, A., Panigrahi, P. K., & Arya, V. (2023). Analysis of artificial intelligence-based technologies and approaches on sustainable entrepreneurship. Technological Forecasting and Social Change, 186, 122152.

- Li, S., Gao, L., Han, C., Gupta, B., Alhalabi, W., & Almakdi, S. (2023). Exploring the effect of digital transformation on Firms’ innovation performance. Journal of Innovation & Knowledge, 8(1), 100317.

- Malik, M., Prabha, C., Soni, P., Arya, V., Alhalabi, W. A., Gupta, B. B., … & Almomani, A. (2023). Machine Learning-Based Automatic Litter Detection and Classification Using Neural Networks in Smart Cities. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-20.

Cite As

Tiwari H. (2023) Enhancing Privacy and Efficiency in Federated Learning through Differential Privacy and Model Compression Techniques Insights2Techinfo, pp.1