By: 1Diksha & 2Deepika Goyal

1,2Department of CSE, Chandigarh College of Engineering and Technology, Chandigarh, India

ABSTRACT

Serverless computing or Function-as-a-Service (FaaS) is now playing a very important role in the cloud computing field and contributes to the innovation of development and deployment of applications. This article examines serverless system architecture with a focus on its event-driven nature, ease of scaling, and pricing patterns that offer both low cost and enhanced quality. It will highlight key benefits such as lower overhead for administrative work, reduced time-to-market, improved resource utilization rate, but will also address challenges related to state management and counter cold start delays. The primary aim of this research is to provide a comprehensive framework that organizations can utilize while adopting serverless computing for digital transformations.

KEYWORDS: Cloud Computing, Machine learning, IoT

INTRODUCTION

In the era of serverless computing[1], it has gained momentum as a disruptive factor that is largely transforming the cloud computing platform and an approach to software development, implementation, and management. Function-as-a-Service (FaaS) [2] is also called serverless computing because in this new way, developers do not have to create virtual machines, since they can send their functions to servers for processing using APIs. As a result of abstracting infrastructure considerations as well as moving into an event-driven model, serverless architecture[3] presents excellent scalability, ease of use, and low cost over conventional server-based architectures.

In this study, we will provide a detailed analysis of serverless computing, including its main principles, structural elements, benefits, limitations, and some possible uses.

FOUNDATION OF SERVERLESS COMPUTING

Serverless computing marks a significant change in cloud technology [4] , revolutionising how applications are developing, deployed, and managed. This particular section delves into the core concepts and architectural principles underpinning serverless computing [5].

Serverless computing is fundamentally rooted in an event-driven architecture. Unlike traditional server setups, serverless applications respond to specific events such as HTTP [6] requests, database modifications, and file uploads. This event-driven approach optimises resource usage by activating computing resources only when needed, resulting in cost efficiency and improved scalability [7].

Automatic scaling is another key aspect of serverless computing. Cloud providers automatically adjust resources to meet incoming event demands, ensuring seamless scalability as needed. This dynamic scaling eliminates the need for manual capacity planning and provisioning, allow developers to focus maily on coding without infrastructure worries.

Additionally, serverless computing adopts pay-as-you-go pricing model. The users are charged based on actual resource consumption by their functions, rather than paying for pre-allocated capacity. This consumption-based pricing eliminates costs associated with idle resources and offers flexibility for users with fluctuating workloads.[8]

Comprehending these foundational principles empowers readers to leverage the full potential of serverless computing. Upcoming sections will delve into practical implications, including advantages, obstacles, and real-world applications across various industries.

APPLICATION OF SERVERLESS COMPUTING

In Web Development, serverless architectures have become widespread in the creation of web apps, APIs, and microservices [9]. They enable coders to focus on coding itself without having to worry about infrastructure, which means you will get a solution with great flexibility, scalability, and at an affordable price.

Serverless computing is considered a data processing solution because it has gained popularity as an effective means of managing ETL, real-time analytics, and batch processing for data[10]. Thus, organizations have the capacity to manage large data volumes and extract useful insights to enhance their decision-making at a lower cost.

In the context of IoT (Internet of Things) applications, serverless computing emerges as the ideal platform for their development, enabling the processing and analysis of data derived from IoT devices in real-time [11]. Moreover, this technology operates autonomously, flexibly adapting to variations in device connections and data streams, which contributes to the creation of highly adaptive and scalable IoT solutions.

Cloud-based machine learning techniques have been emerging in the field of machine learning applications such as model inference, data pre-processing, and real-time prediction [12]. The benefits of these practices are that companies can implement and manage scalable artificial intelligence systems more effectively, which translates to cost savings and faster time to deliver value.

ARCHITECTURE OF SERVERLESS COMPUTING

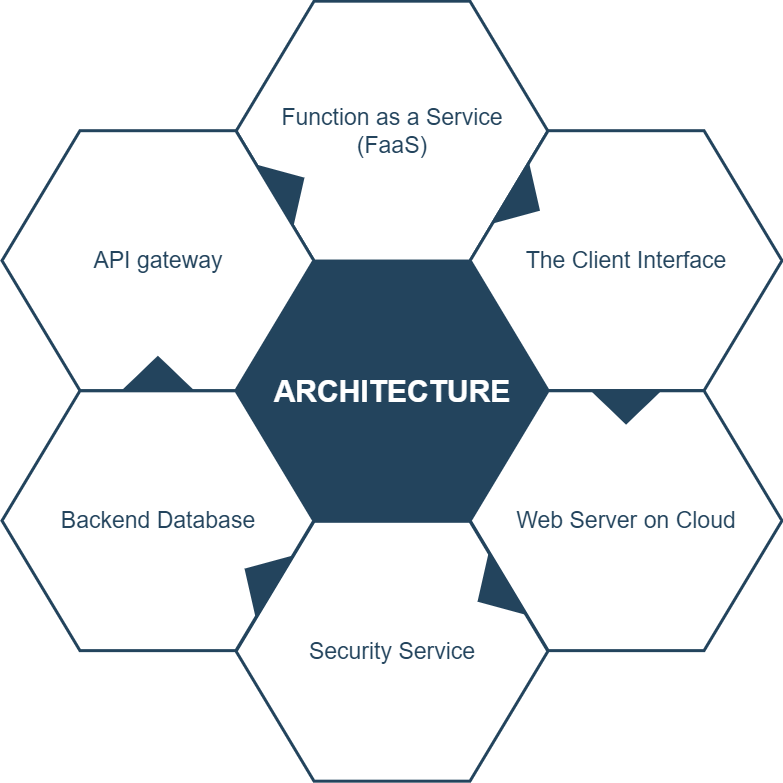

One of the new architectures that revolutionizes traditional server management is serverless computing, with FaaS, which enables developers to concentrate on coding and abstracts away infrastructural details. From the user’s end, there will be a user-friendly interface through which applications can be accessed using servers. Web servers hosted on the cloud are responsible for resource scaling according to demand, while security services protect applications from all possible threats. Backend databases are the main system where all data is stored and managed, then API gateways play a role as communication facilitators between clients and serverless functions. The given architecture provides an opportunity for agile, scalable, and low-cost development and delivery in the cloud.

Fig 1: Key components of serverless architecture.

BENEFITS OF SERVERLESS COMPUTING

In terms of cost efficiency, serverless computing operates on a pay-per-use basis by charging users only for the resources their functions utilize. This eliminates expenses that result from idle infrastructure to ensure effective IT spending as well as proper resource allocation.

The serverless architecture scales out on its own because cloud providers help to assign resources based on the demand [13]. With this kind of dynamic scalability, there will be a high level of service quality and a great user experience, which are not limited by capacity restrictions.

Serverless computing masks the infrastructure intricacies and helps in quick and easy development as well as deployment. This speeds up time-to-market of a new product or functionality, stimulating more rapid innovations and solutions to market needs.

The simplification of maintenance is achieved by going serverless[14]; thus, organizations can avoid infrastructure management and trust it to the cloud service provider to take care of all provisions, scaling, and monitoring aspects. It enables the use of resources in business processes and encourages creative ways of doing innovation instead of plain maintenance work.

CHALLENGES AND CONSIDERATIONS IN SERVERLESS COMPUTING

According to organizations that use only one cloud provider to compute in a serverless way, they face problems with vendor lock-in. The dependency, in this case, can interfere with migration to other solutions or the adoption of a multi-cloud strategy that, in turn, may negatively impact system flexibility and scalability.

The issue of cold start latency should be addressed in serverless computing since, due to a long absence of function invocations and system downtime[15], the response time may be rather slow. These delays can affect the application efficiency, so optimization strategies are needed to address this problem.

Serverless architectures and state management have an uneasy relationship due to the stateless nature of functions. Difficulty may arise in applications that need consistent storage or keeping track of information over time. Often, solutions using external storage services or implementing a stateful function design pattern are employed to tackle this challenge.

In the context of serverless applications, the observability and debugging tasks tend to be more challenging because such applications are distributed and event-driven. For effective monitoring and resolving problems, it is necessary to ensure comprehensive logging as well as tracking facilities that will allow collecting information about application operations.

As a consequence of serverless architectures, there are emerging new security challenges, in particular, controlling access to resources and data protection [16]. On the other hand, regulatory and standard adherence is possible only through implementing reliable security techniques like encryption and access management in order to curb the risks connected with serverless computing.

IMPLEMENTATION OF SERVERLESS COMPUTING

Implementing serverless solutions requires practical considerations crucial for successful deployment and operation. Function design and architecture are key, focusing on modularization, separation of concerns, and appropriate trigger mechanisms to ensure robust and scalable serverless functions. Architectural patterns like event sourcing, CQRS, [17] and microservices further boost flexibility and scalability.

Performance optimization is crucial for efficiency and responsiveness. Techniques to reduce cold start latency, improve code execution time, and manage memory allocation are vital. Using caching, batching, and asynchronous processing can also enhance system performance.

Security and compliance are top priorities in serverless environments. Strong security measures, including securing function endpoints, implementing access controls, and encrypting sensitive data, are critical. Compliance with regulatory requirements and maintaining data privacy builds trust and credibility.

Effective monitoring and logging practices are essential for maintaining application health and performance. Capturing metrics, tracing function invocations, and correlating events across distributed systems allow proactive issue identification and resolution, ensuring smooth operation of serverless applications.

Fig 2: Flowchart for implementing serverless computing

REAL-WORLD DEPLOYMENT OF SERVERLESS COMPUTING

Organisations across various sectors have embraced serverless solutions to tackle business challenges, showcasing the practical applications and benefits of serverless computing.

- AWS Lambda: Amazon Web Service Lambda offer the serverless computing platform executing code on demand[18], featuring automatic scaling and pay-per-use pricing. Lambda supports everything from simple scripts to complex microservices architectures.

- Microsoft Azure: Microsoft Azure provides a robust serverless computing platform emphasising developer productivity and intelligent applications. [19] Azure Functions enable running code in response to events without managing infrastructure, favoured by enterprises in the Microsoft ecosystem.

- Google Cloud Platform (GCP): Google Cloud Platform offer serverless computing services through Google Cloud Functions[20], integrated with Google’s infrastructure. This enables developers to build and deploy event-driven applications at scale, leveraging Google’s expertise in managing large workloads and data processing.

- IBM Cloud Functions: IBM Cloud Functions provides a serverless computing platform powered by Apache OpenWhisk. Developers can deploy functions written in various languages and integrate them with other IBM Cloud services, [21] offering scalability and flexibility for building cloud-native applications.

- Oracle Functions: Oracle Functions is Oracle’s serverless computing platform, allowing developers to deploy function written as popular programming language such as Java, Node.js, Python, and more. It seamlessly integrates with other Oracle Cloud services and offers automatic scaling and pay-per-use pricing.

- Alibaba Cloud Function Compute: The Alibaba Cloud Function Computes is serverless computing platform that enable developer to build and deploy functions without managing infrastructure. It integrates seamlessly with other Alibaba Cloud services, providing scalability and reliability for cloud-native applications.

FUTURE DIRECTIONS IN SERVERLESS COMPUTING

Recently, serverless architectures have been adopted for IoT[22] platforms, making it possible for real-time data processing at the edge of the network.

Containerization and Serverless: By incorporating containerization technologies such as Docker with serverless computing, the mobility and scalability of application deployment is improved.

With regard to Multi-Cloud and Hybrid Cloud deployments[23], serverless computing provides support by allowing its distribution over different providers for flexibility and reliability.

In Serverless Security and Compliance, runtime protection and access control are at the core of elevated security features, which are important to follow since serverless adoption is increasing. Thus, it is crucial to ensure that there is always alignment with the changing regulations.

Serverless computing goes beyond functions and encompasses databases, workflows, as well as event-driven architectures that ensure the quick creation of sophisticated applications.

CONCLUSION

To summarize, serverless computing provides companies with unlimited opportunities for improving flexibility, scalability, and cost savings. Adoption may be an obstacle in this context; however, well-designed strategies enable tapping into such potential benefits effectively.

By employing the multi-cloud approach, ensuring high performance, and strengthening security, organizations are able to outpace barriers in the path of transformation and reach the pace of innovation.

When we talk about serverless computing[24], it is worth mentioning that, although still developing, this technology may become the basis of the new cloud-native application and will certainly bring numerous sparks of creativity and innovation to many industries. It has been observed that organizations can achieve excellent results by following principles from previous success stories and adopting best practices; they can thus strengthen their position in the digital age.

REFERENCES

- Gong, C., Liu, J., Zhang, Q., Chen, H., & Gong, Z. (2010, September). The characteristics of cloud computing. In 2010 39th International Conference on Parallel Processing Workshops (pp. 275-279). IEEE.

- Shahrad, M., Balkind, J., & Wentzlaff, D. (2019, October). Architectural implications of function-as-a-service computing. In Proceedings of the 52nd annual IEEE/ACM international symposium on microarchitecture (pp. 1063-1075).

- McGrath, G., & Brenner, P. R. (2017, June). Serverless computing: Design, implementation, and performance. In 2017 IEEE 37th International Conference on Distributed Computing Systems Workshops (ICDCSW) (pp. 405-410). IEEE.

- Saini, T., Kumar, S., Vats, T., & Singh, M. (2020). Edge Computing in Cloud Computing Environment: Opportunities and Challenges. In International Conference on Smart Systems and Advanced Computing (Syscom-2021).

- Li, Y., Lin, Y., Wang, Y., Ye, K., & Xu, C. (2022). Serverless computing: state-of-the-art, challenges and opportunities. IEEE Transactions on Services Computing, 16(2), 1522-1539.

- Gourley, D., & Totty, B. (2002). HTTP: the definitive guide. ” O’Reilly Media, Inc.”.

- Kumar, S., Singh, S. K., & Aggarwal, N. (2023, September). Sustainable Data Dependency Resolution Architectural Framework to Achieve Energy Efficiency Using Speculative Parallelization. In 2023 3rd International Conference on Innovative Sustainable Computational Technologies (CISCT) (pp. 1-6). IEEE.

- Saini, T., Kumar, S., Vats, T., & Singh, M. (2020). Edge Computing in Cloud Computing Environment: Opportunities and Challenges.

- Gu, X., Zhang, H., Zhang, D., & Kim, S. (2016, November). Deep API learning. In Proceedings of the 2016 24th ACM SIGSOFT international symposium on foundations of software engineering (pp. 631-642).

- Sharma, A., Singh, S. K., Badwal, E., Kumar, S., Gupta, B. B., & Arya, V. & Santaniello, D.(2023, January). Fuzzy Based Clustering of Consumers’ Big Data in Industrial Applications. In 2023 IEEE International Conference on Consumer Electronics (ICCE) (pp. 01-03).

- Vats, T., Singh, S. K., Kumar, S., Gupta, B. B., Gill, S. S., Arya, V., & Alhalabi, W. (2023). Explainable context-aware IoT framework using human digital twin for healthcare. Multimedia Tools and Applications, 1-25.

- Singh, I., Singh, S. K., Singh, R., & Kumar, S. (2022, May). Efficient loop unrolling factor prediction algorithm using machine learning models. In 2022 3rd International Conference for Emerging Technology (INCET) (pp. 1-8). IEEE.

- Rastogi, A., Singh, S. K., Sharma, A., & Kumar, S. Capacity and Inclination of High Performance Computing in Next Generation Computing.

- Petcu, D. (2013, April). Multi-cloud: expectations and current approaches. In Proceedings of the 2013 international workshop on Multi-cloud applications and federated clouds (pp. 1-6).

- Golec, M., Walia, G. K., Kumar, M., Cuadrado, F., Gill, S. S., & Uhlig, S. (2023). Cold start latency in serverless computing: A systematic review, taxonomy, and future directions. arXiv preprint arXiv:2310.08437.

- Hassan, H. B., Barakat, S. A., & Sarhan, Q. I. (2021). Survey on serverless computing. Journal of Cloud Computing, 10(1), 1-29.

- Betts, D., Dominguez, J., Melnik, G., Simonazzi, F., & Subramanian, M. (2013). Exploring CQRS and Event Sourcing: A journey into high scalability, availability, and maintainability with Windows Azure.

- Wittig, A., & Wittig, M. (2023). Amazon Web Services in Action: An in-depth guide to AWS. Simon and Schuster.

- Collier, M., & Shahan, R. (2015). Microsoft Azure Essentials-Fundamentals of Azure. Microsoft Press.

- Switula, D. (2000). Principles of good clinical practice (GCP) in clinical research. Science and engineering ethics, 6(1), 71-77.

- Kochut, A., Deng, Y., Head, M. R., Munson, J., Sailer, A., Shaikh, H., … & Wagner, H. (2011). Evolution of the IBM Cloud: Enabling an enterprise cloud services ecosystem. IBM Journal of Research and Development, 55(6), 7-1.

- Singh, R., Singh, S. K., Kumar, S., & Gill, S. S. (2022). SDN-Aided Edge Computing-Enabled AI for IoT and Smart Cities. In SDN-Supported Edge-Cloud Interplay for Next Generation Internet of Things (pp. 41-70). Chapman and Hall/CRC.

- Verma, V., Benjwal, A., Chhabra, A., Singh, S. K., Kumar, S., Gupta, B. B., … & Chui, K. T. (2023). A novel hybrid model integrating MFCC and acoustic parameters for voice disorder detection. Scientific Reports, 13(1), 22719.

- Kaur, P., Singh, S. K., Singh, I., & Kumar, S. (2021, December). Exploring Convolutional Neural Network in Computer Vision-based Image Classification. In International Conference on Smart Systems and Advanced Computing (Syscom-2021).

Cite As

Diksha; Goyal D (2024) Exploring Serverless Computing, Insights2Techinfo, pp.1