By: Vanna karthik; Vel Tech University, Chennai, India

Abstract

A new type of cyberthreats, such as deepfake phishing and voice scams, has emerged in recent years due to the development of deepfake technology. By using artificial intelligence (AI) to produce highly realistic false text, audio, and video content, these advanced attacks make it harder for people and organizations to detect the difference between legitimate and fraudulent communications. Still, the technology created for combating the threat is always changing along with the threat landscape. AI is becoming essential in exposing voice scams and deepfake phishing, providing sophisticated detection tools and methods that identify and mitigate these risks. This article examines deepfake scams’ workings, the difficulties they provide, and the ways AI-powered solutions are being used to protect against them.

Introduction

Although the digital age has made things simpler and more connected than ever before, it has also made it easier for new types of cybercrimes to develop. One of the most troubling issues is the rise of deepfake technology, which uses artificial intelligence(AI) to produce realistic audio, video and text forgeries[1]. Because they make use of people’s emotions and trust to trick them, deepfake phishing and voice frauds have grown particularly dangerous. To influence public opinion, a modified video may spread misleading information, or a deepfake voice call imitating a CEO’s voice could direct an employee to transfer money.

Even though these frauds are getting more complex, artificial intelligence is being used to combat them. Experts in cybersecurity are creating tools to identify and eliminate deepfake risks by utilizing machine learning, neural networks, and other AI technologies. The paper explores how AI may both help and harm deepfake scams, highlighting how crucial it is to maintain the upper hand in this technological arms race.

The Rise of Deepfake Phishing and voice Scams

What are Deepfakes ?

Artificial intelligence (AI) techniques, specifically generative adversarial networks (GANs), are used to make deepfakes, which are artificial media[2]. By learning from large datasets of real-life material, these algorithms can produce realistic photos, movies, and audio. Deepfakes are being used more and more maliciously, despite their legitimate use in entertainment and education.

Phishing with Deepfakes : Cybercriminals generate fake emails, fake pictures, or social media profiles that look authentic by using deepfake technology. A deepfake video of a business CEO, for instance, may be utilized to get staff members to share private information or approve illegal transactions[3].

Voice Scams : AI-produced voice clones can imitate the voices of people you trust, like family members, coworkers, or well-known people. These clones are used by scammers to make fake phone calls, frequently requesting personal information or money[4].

The Difficulties in Identifying Deepfakes [5]

Getting More Complex : Deepfakes are getting more difficult to identify as AI technology develops. Replicating complex facial expressions, vocal inflections, and even writing styles, high-quality deepfakes are almost identical to authentic information.

Vulnerability of Humans : People have a natural capacity to believe what they see and hear, especially if it comes from someone they know. Because of this psychological tendency, deepfake scams are very successful.

Ignorance : Many people and organizations are at risk of attacks because they are ignorant of the existence and potential of deepfake technology.

How AI is Responding

Detection Tools Driven by AI

Leading the fight against deepfakes is artificial intelligence. AI systems can detect indications of manipulation by examining patterns and irregularities in text, audio, and video. Among the crucial methods are:

1. Digital Forensics : To identify discrepancies in deepfake content, artificial intelligence (AI) technologies analyze metadata, pixel patterns, and compression artifacts.

2. Behavioral Analysis: To identify disparities, machine learning algorithms examine facial expressions, speech patterns, and other behavioral indicators.

3. Blockchain Verification: To create a tamper-proof record of the origin of digital content, some systems employ blockchain technology to confirm its validity.

Case Studies: Artificial Intelligence in Action

The video authentication from Microsoft

A technology called video authentication, created by Microsoft, examines films to identify deepfake modification[6]. The application uses artificial intelligence (AI) to detect minute errors and abnormalities that are not evident to the human eye.

Voice Fraud Detection Using Pindrop

The cybersecurity film Pindrop employs AI to instantly identify voice deepfakes. To detect fake calls, their technology examines more than 1,300 aspects of voice.

The Deepfake Detection Challenge on Facebook

Facebook started the Deepfake Detection Challenge in 2020 to find crowd sourced AI solution for identifying videos that have been altered[6]. Highly precise detection models were developed because of the competition.

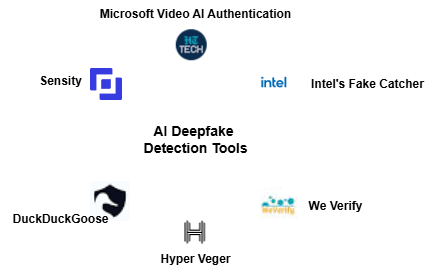

Figure : AI deepfake detection Tools

The future of AI and Deepfake defense

Continuing Improvements

The instruments created to counteract deepfake technology must advance along with it. To remain effective, AI systems will require frequent updates and training on fresh datasets.

Education and Public Awareness

It’s critical to spread knowledge about deepfake scams. It is necessary to educate people and organizations about the dangers and how to spot any dangers.

Regulations

Through laws and policies, governments and regulatory agencies are starting to deal with the deepfake danger. The DEEPFAKES Accountability Act, for instance, was introduced in the United States with the intention of preventing the malicious use of deepfake technology.

Conclusion

In the digital age, the emergence of voice scams and deepfake phishing poses a serious threat. But in the battle against these dangers, artificial intelligence is turning out to be an important partner. AI is exposing deepfake schemes and assisting in the protection of people and organizations by utilizing sophisticated detection tools, real-time analysis, and cooperative efforts. AI’s significance will only increase as the conflict between cybercriminals and cybersecurity professionals’ rages on. Navigating this changing environment and securing a safer digital future needs to be knowledgeable, watchful, and proactive.

References

- R. Mubarak, T. Alsboui, O. Alshaikh, I. Inuwa-Dutse, S. Khan, and S. Parkinson, “A Survey on the Detection and Impacts of Deepfakes in Visual, Audio, and Textual Formats,” IEEE Access, vol. 11, pp. 144497–144529, 2023, doi: 10.1109/ACCESS.2023.3344653.

- O. A. Paul, “Deepfakes Generated by Generative Adversarial Networks”.

- S. Alanazi, S. Asif, and I. Moulitsas, “Examining the Societal Impact and Legislative Requirements of Deepfake Technology: A Comprehensive Study,” Int. J. Soc. Sci. Humanity, 2024, doi: 10.18178/ijssh.2024.14.2.1194.

- A. Janjeva, A. Harris, S. Mercer, A. Kasprzyk, and A. Gausen, “The Rapid Rise of Generative AI”.

- “Berenzen_BA_BMS.pdf.” Accessed: Mar. 24, 2025. [Online]. Available: https://essay.utwente.nl/96206/1/Berenzen_BA_BMS.pdf

- “Deepfakes Creation and Detection Using Deep Learning.” Accessed: Mar. 24, 2025. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9447642

- M. Rahaman, P. Pappachan, S. M. Orozco, S. Bansal, and V. Arya, “AI safety and security,” in Advances in computational intelligence and robotics book series, 2024, pp. 354–383. .

- M. Rahaman, C.-Y. Lin, P. Pappachan, B. B. Gupta, and C.-H. Hsu, “Privacy-Centric AI and IoT solutions for smart rural farm monitoring and control,” Sensors, vol. 24, no. 13, p. 4157, Jun. 2024, doi: 10.3390/s24134157.

- Zhang, J., Li, X., Vijayakumar, P., Liang, W., Chang, V., & Gupta, B. B. (2024). Graph sparsification-based secure federated learning for consumer-driven Internet of Things. IEEE Transactions on Consumer Electronics.

- Spoorthi K.S. (2024) Generative AI in Personalized Medicine, Insights2Techinfo, pp.1

Cite As

Karthik V. (2025) How AI is Unmasking Deepfake Phishing and Voice Scams, Insights2techinfo pp.1