By: Dadapeer Agraharam Shaik, Department of Computer Science and Technology, Student of Computer Science and technology, Madanapalle Institute of Technology and Science, Angallu,517325, Andhra Pradesh.

Abstract:

Thus, the threat is evolving at a very high rate, and one has to design new forms of protection. Thus, one of the significant trends in cybersecurity is the use of machine learning (ML) as the technology capable of providing versatile and evolving protection against threats. This paper aims at understanding machine learning algorithms in cyber security whereby it seeks to analyze how the algorithms improve the ability of detecting threats, automate the response to threats and self-learn from the data in order to better control threats. Incorporation of ML in cybersecurity not only solves the problems of the existing methodologies but also offers better security against advanced cyber threats.

Keywords: Machine Learning , Cybersecurity , Threat Detection , Automated Response.

1.Introduction

The extensive and fast developments in the digital environment have heightened cyber threats to vulnerable statuses such as the individual, civil organizations, and even governments. With the evolution of the hacking techniques, the conventional security measures are no longer capable to protect an organization’s resources, causing efforts to consider a new level of security mechanisms and technologies; where Machine Learning (ML) is stepping up as a crucial element in the methodology of improving security techniques. Artificial intelligence or AI is the broader category of which machine learning is a part of it, which is the practice of constructing models that allow systems to automatically learn and make decisions based on data with the intent of recognizing such increasingly minute peculiarities that may be indicative of possible security threats. This capability is quite useful in cybersecurity because threats are often detected and resolved in a timely manner thus reducing the risk of data loss among other issues. In different uses, several of them include threat identification – abnormality identification, and predictive modeling of various cyber threats through scrutinizing network traffic, modeling user activities, and projecting future threats based on past trends. The incorporation of the ML into cybersecurity frameworks stands to benefit from aspects like speed to analyze large volumes of data than human analysts, and capability of the models to learn progressively to increase the efficiency of the threat detection while minimizing false alarms to constrict the effort of security teams towards genuine threats. However, the applicability of ML in cybersecurity is not without some concerns such as the availability of good data for training the models, as well as the constant development of ever new and sophisticated algorithms to counter acts of cyber criminals and finally there are issues of privacy and data security due to the large data sets necessary for feeding the ML models. Data collection processes must be secure as well as storage facilities and any other process involved. All in all, the fact that machine learning is an effective solution that boosts the possibilities of threat identification and prediction and despite the challenges that it has, it is a perspective direction for further development in cybersecurity.

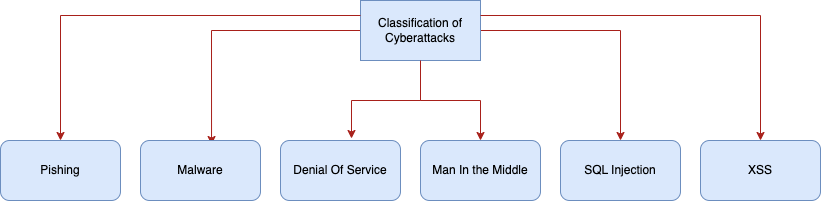

2.Classification Of Cyber Attacks

The first dimension to classify an attack is by looking at the objective of the attack. This is normally affiliated to how an opponent makes profits from the attack, for instance obtaining Identity theft, credit card details and selling them to marketers or criminals. Overall, the attack goals fall into one of the following categories: capture information such as data stored on your device, media content, or users’ logins; this action is made by spyware malware; monitoring the user’s data, for example, geolocation, actions, or health data; it is carried out by mobile malware; seizing control over the system, like Trojan, botnet, and rootkit do.

Another categorization that can be made regarding an attack is the attack vector – this refers to the chosen way or opening through which an adversary infiltrates a network or a computer system to execute his malicious intent. Attack vectors can be identified at three different layers: the first one is the hardware level that deals with the actual hardware components of the computer, the second one is the network level that concerns itself with networks and finally, there is the application level that works directly with applications.

Cybersecurity is another application of deep learning technologies, and intrusion is another related concept. ML is well adopted for malware analysis and the discovery of the unknown threats due to the software which contains viruses. [1]

3.Classification of Machine Learning Algorithms for Cyber Security

Machine learning may be understood as a set of constantly changing paradigms, which fluidly transit between each other. In addition, different perspectives and applications yield different classifications. Consequently, there is no single and universally acceptable taxonomy from literature but we would like to propose an alternative one that encompasses the variety of techniques used in cyber detection This taxonomy targets security operators explicitly without assuming it will offer a definitive classification that would cater for all AI researchers and application cases. The first category depicted in Figure 1 is the traditional ML algorithms that can now be called Shallow Learning (SL) vis-à-vis the recent Deep Learning (DL). With Shallow Learning, however, one needs a domain expert or feature engineer who does the crucial work of identifying relevant data attributes before running the SL algorithm. Deep Learning is reliant on multi-layered representations of input data and is capable of autonomous feature selection using representation learning[2].

To further distinguish between supervised and unsupervised approaches to SL and DL.

The first are supervised methods while rest are unsupervised approaches[3]

1.Shallow Learning

Shallow learning has three primary categories which include: (1) supervised learning; (2) unsupervised learning; and (3) reinforcement learning (RL). The goal of supervised learning is to learn a pattern from labelled data and predict the outcome of new inputs based on this pattern. Supervised learning incorporates a number of algorithms with Logistic Regression, Perceptron, and KNN being the main early ones. Before the Decision Tree algorithm was published, machine learning algorithms were fragmented and unstructured though Perceptron undeniably laid their foundation. SVM, AdaBoost, and Random Forest are the most widely used supervised learning algorithms in construction industry. Usually, they are employed for data classification.

Unsupervised learning uses unlabelled data to discover knowledge focusing on data reduction as well as clustering problems. In comparison to using labelled information, there is little information that can be extracted from having these datasets thus making it less employed in construction. Thus scientists prefer utilizing supervised methods for dealing with real issues in construction industry. Principal Component Analysis (PCA), kernel PCA and t-SNE represent the main techniques of dimensionality reduction via unsupervised approaches. Typical clustering algorithm[4]

Deep-Learning

Deep learning can be described as a deep neural network, which is an evolution of artificial neural networks. Backpropagation was initially proposed as the foundation for complete NN theory and hence started machine learning’s first wave. Before this time, ANN had no effective algorithmic support so that it could train multilayer neural networks. It led to two classical neural network frameworks after back propagation was introduced: LeNet and Long-Short Term Memory Networks (LSTM). During that period, there were three main barriers in the development of NNs: (1) The algorithm itself: the vanishing/explosion gradient problem makes it impossible to effectively train the network with increase in its depth; (2) Data: Powerful Neural Networks are difficult to be trained due to scarcity of labeled data; and (3) Hardware: The performance of hardware is poor when training complex neural networks because its computing resources requirement cannot be met. In 2006 Hinton published a science paper that introduced the concept of deep learning, new training strategies thus allowing for deeper neural networks to become trainable. This sequence is followed by AlexNet’s success in ImageNet – an image classification challenge – in 2012[5].

Reference:

- R. Geetha and T. Thilagam, “A Review on the Effectiveness of Machine Learning and Deep Learning Algorithms for Cyber Security,” Arch. Comput. Methods Eng., vol. 28, no. 4, pp. 2861–2879, Jun. 2021, doi: 10.1007/s11831-020-09478-2.

- A. M. Widodo et al., “Port-to-Port Expedition Security Monitoring System Based on a Geographic Information System,” Int. J. Digit. Strategy Gov. Bus. Transform. IJDSGBT, vol. 13, no. 1, pp. 1–20, Jan. 2024, doi: 10.4018/IJDSGBT.335897.

- G. Apruzzese, M. Colajanni, L. Ferretti, A. Guido, and M. Marchetti, “On the effectiveness of machine and deep learning for cyber security,” in 2018 10th International Conference on Cyber Conflict (CyCon), May 2018, pp. 371–390. doi: 10.23919/CYCON.2018.8405026.

- T. Toto Haksoro, A. Aisjah, M. Rahaman, and T. R. Biyanto, “Enhancing Techno Economic Efficiency of FTC Distillation Using Cloud-Based Stochastic Algorithm,” Int. J. Cloud Appl. Comput., vol. 13, pp. 1–16, Jan. 2023, doi: 10.4018/IJCAC.332408.

- Y. Xu, Y. Zhou, P. Sekula, and L. Ding, “Machine learning in construction: From shallow to deep learning,” Dev. Built Environ., vol. 6, p. 100045, May 2021, doi: 10.1016/j.dibe.2021.100045.

- Mishra, P., et al.(2024). CloudIntellMal: An advanced cloud based intelligent malware detection framework to analyze android applications. Computers and Electrical Engineering, 119, 109483.

- Bai, S., et al. (2024). Prioritizing user requirements for digital products using explainable artificial intelligence: A data-driven analysis on video conferencing apps. Future Generation Computer Systems, 158, 167-182.

- Vats, T., et al. (2023, August). OPTUNA—Driven Soft Computing Approach for Early Diagnosis of Diabetes Mellitus Using ANN. In International conference on soft computing for problem-solving (pp. 355-371). Singapore: Springer Nature Singapore.

Cite As

Shaik D. A. (2024) Machine Learning Algorithms in Cyber Defence, Insights2Techinfo, pp.1