By: K. Sai Spoorthi, Department of computer science and engineering, Student of computer science and engineering, Madanapalle Institute of Technology and Science, 517325, Angallu, Andhra Pradesh.

Major trends of generative AI have really developed the idea and applied it to different fields such as the healthcare sector, the marketing and the entertainment sector. But the problem of saturation or scale is still a major issue here and this constrains the potential of the AI systems in a way even more. This paper aims at identifying key technological, operational, and ethical issues of generative AI at scale. The areas of interest include computational constraints, the effects of distributed computing as well as parallel processing, and potential works in areas such as adaptive computing structures, and neuro computing. With these prospects in mind, the study intends to highlight the key issues related to the growth and utilization of generative AI and contribute to the creation of its sound and, therefore, ethical trajectories.

Key words: Generative AI, parallel processing, scalability, distributed computing, Ethical AI, Adaptive architecture

Introduction

The progression in generative AI has been revolutionized within recent years making an entrance in various industries including medical, marketing, and entertainment. However, as the need for innovative and sensitive AI systems increases, scalability has turned into the unsolved problem. Scalability means that the generative models will cause no harm to performances or new inputs in form of data and traffic from the users. Thus, the purpose of this essay is to uncover the width problems within generative AI technologies as well as point the major technology- and operations-related challenges, which have to be addressed to bring generative AI to significant scale. In this paper, the current methods and research study of generative AI will be reviewed and future possible strategies of dealing with these challenges will be discussed with insights into the possibilities of enhancing the full potential of generative AI structured with efficiency and stability[1]. However, the improvement of scalability is not just an administrative issue; it is fundamental for the future of generative AI as a technique in a growing climate complexity.

Generative AI and Its Importance in Modern Applications

In contemporary uses of generative AI, they have become a revolutionary tool in industries of today by improving creativity and decision making. This technology uses complex formulas to generate content close to what one can expect a human to come up with It will not only allow businesses to perfect how they market themselves but also how they engage the customer. For example, the ability of generative AI images to optimize with well-developed brand personalities, strengthens its application in the luxury fashion market; where picture appeal is central to brand-consumer relations. Moreover, it is worth pointing out that the sphere of education has also been impacted by such developments as traditional forms of assessment have been pushed back by capabilities of AI. I gather that through a survey about ‘generative AI responsible for 70% of assessments,’ educators examine students and teachers’ considerable awareness of the extent to which generative AI affects assessment and acknowledges that generative AI will push for change that aligns with deeper learning and genuine assessment.[2] Therefore, the relevance of generative AI in the contemporary applications is not only in the performance but in the room for growth.

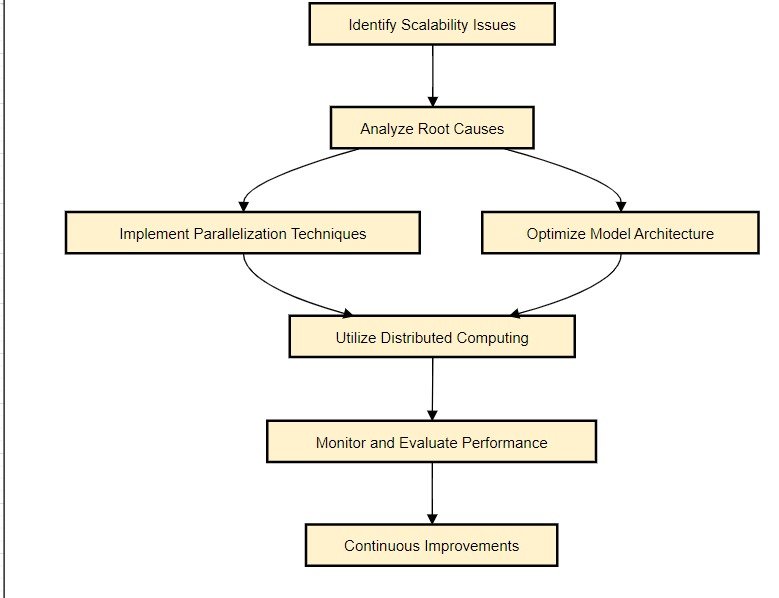

Identifying Scalability Challenges in Generative AI

Since more organisations turn to GAI to enhance improvement of organisational functions, certain questions of scale arise that are worth to consider. Of all the potential root causes, one of them can be described as interactions between the sets of technology on the one hand and ethical, legal, and managerial sets on the other. That is why the use of a large set of data implies certain measures of privacy and security, which allow containing and protecting data and, at the same time, sometimes may negatively affect scalability due to the passing of certain barriers in this area. Furthermore, while implementing the generative AI solutions there is cost and it includes immediate out right financial loss or the cost and benefit analysis of the ROI and further continuous operational cost, which are the factors restricting the implementation of generative AI solutions. Indeed, as it will be described in the contemporary literature, problematics such as dependence on GAI or the lack of a digital substrate may become organization-scale barriers in organizations that undergo change in this context. Addressing these sophisticated concerns is crucial to unleash the beneficial use of the generative AI and foster perpetual progression in the organizations’ practice while being legal.

Analysis of Computational Resource Limitations and Their Impact

The problems caused by computational resource constraints entail severe obstacles to the growth of generative AI for both functionality and practical purposes. As the algorithms involved in designing materials get more complex, as is the case with for example materials mentioned above, the need for higher computing capability becomes apparent. While researchers extend efforts to understand the properties of the material and increase mechanical performance using generative design, the complexity of the requirements rises and, in some cases, becomes beyond the capabilities of present generation technology. In addition, as shown in fig 1, the application of such complex approaches, including generative design, presupposes massive computational power needed for elaborating a great number of perspectives ensuring the concept of sustainability in the sphere of built environment. Hence the limitations characterized by the scarcity of computational power may slow the pace of development of new concepts and slow down the achievement of efficient material solutions to the many challenges that engineering and architecture face in the current world.

Exploration of Distributed Computing and Parallel Processing Techniques

As a result, two approaches – distributed computing and parallel processing – are now the primary solutions to the scalability problem in generative AI. Relatedly, by dividing a problem into sub-problems that would be solved simultaneously, these methodologies enhance computation rate and use of resources significantly. Distributed computing is the use of number of systems connected in a way that the load is shared; this is because large scale AI models can process large data in real time. On the other hand, parallel processing improves the speed and rate at which computations take place by assigning the workload to several units which to a great extent minimizes the time required by an operation to execute and sharpens it. [3]They also assist in improving the performance and guarantee that the applications of Artificial Intelligence in the cloud, on-premises and other edge devices are properly implemented. These are most significant because they are fundamental in making its generative form ready to host the currently emerging real-time applications/services making the system itself far more relevant and elastic. The fear of scalability will remain with organisations as even more of these technologies must be implemented to assist in charting the direction of future AI.

Future Directions for Research in Scalability Solutions

The review of the current methods in the approaches to scalability offers several implications that rise from this proposal concerning adaptive architectures and resource optimization. First, it has become clear that the use of distributed computing frameworks is efficient, especially in case of increased requirements for the speed of computation and the amount of data to be processed. But also novel approaches in model compression like quantization and pruning seem to still preserve the performance with less computational resources needed. Indeed, there are significant developments in the research environment which suggest that there are still major possibilities for the further enhancement of these methods.[4] The future research directions should therefore be directed towards combined models that combines the outstanding features of most of the scalability strategies put forward, with a view to optimizing for real time adaptability and energy efficiency. Furthermore, how these new hardware innovations might manifest themselves through neuromorphic computing, might open up new areas of development in terms of processing which in return could drive forward even more truthful generative AI for a series of sectors.[5] The journey towards eradicating scalability challenges as a process in generative AI continues to be a discussant that is very active and poses a lot of potential for further exploration.

Conclusion

In drawing a conclusion in the study, it can be realized that improving the scalability issues of generative AI entails a spectrum approach that integrates technology, policy, and human capital. Trust and ethical issues must be priorities as has been presented in prior works concerning ethical, technological, and privacy enablers/constraints when using generative AI tools. Furthermore, the ability of generative AI in material science to improve material characteristics through inventive engineering solutions, it puts a light to a potential solution to these scalability challenges, through creativity meet technology. As the field grows in the future the development of sound frameworks that allow for fostering advancements in the realm while at the same time being able to cover for issues in cost, security and overdependence on the AI systems will be important. These ways help generative AI find its opportunities in organizations and thus contributes to the possibilities of sustainable growth of various industries.

References

- L. Manduchi et al., “On the Challenges and Opportunities in Generative AI,” Feb. 28, 2024, arXiv: arXiv:2403.00025. doi: 10.48550/arXiv.2403.00025.

- C.-Y. Lin, M. Rahaman, M. Moslehpour, S. Chattopadhyay, and V. Arya, “Web Semantic-Based MOOP Algorithm for Facilitating Allocation Problems in the Supply Chain Domain,” Int J Semant Web Inf Syst, vol. 19, no. 1, pp. 1–23, Sep. 2023, doi: 10.4018/IJSWIS.330250.

- R. Jack and A. Heng, Generative AI in the Classroom: Overcoming Challenges for Effective Teaching. 2024. doi: 10.13140/RG.2.2.24826.71367.

- K. Kavanagh, Google Machine Learning and Generative AI for Solutions Architects: Build efficient and scalable AI/ML solutions on Google Cloud. Packt Publishing Ltd, 2024.

- B. D. Alfia, A. Asroni, S. Riyadi, and M. Rahaman, “Development of Desktop-Based Employee Payroll: A Case Study on PT. Bio Pilar Utama,” Emerg. Inf. Sci. Technol., vol. 4, no. 2, Art. no. 2, Dec. 2023, doi: 10.18196/eist.v4i2.20732.

- Gupta, B. B., Gaurav, A., Arya, V., Alhalabi, W., Alsalman, D., & Vijayakumar, P. (2024). Enhancing user prompt confidentiality in Large Language Models through advanced differential encryption. Computers and Electrical Engineering, 116, 109215.

- Raj, B., Gupta, B. B., Yamaguchi, S., & Gill, S. S. (Eds.). (2023). AI for big data-based engineering applications from security perspectives. CRC Press.

- Gupta, G. P., Tripathi, R., Gupta, B. B., & Chui, K. T. (Eds.). (2023). Big data analytics in fog-enabled IoT networks: Towards a privacy and security perspective. CRC Press.

Cite As

Spoorthi K.S. (2024) Overcoming Scalability Issues in Generative AI, Insights2Techinfo, pp.1