By: Sahil Kondal1, Anish Sharma2

1,2Department of CSE, Chandigarh College of Engineering and Technology, Chandigarh

Abstract:

Neural networks drive innovations like self-driving cars and social media recommendations, yet they also pose risks such as data manipulation and cyber threats. This article emphasizes the vital need for strong neural network security to protect against evolving cyberattacks. Strategies like anomaly detection and behavioural analysis strengthen defence mechanisms, but ongoing improvements are essential to address challenges like adversarial attacks and model transparency. Across various sectors, neural network security is crucial for maintaining the safety and integrity of modern technology environments.

Keyword: Artificial Intelligence, Explainable AI, Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM)

1. INTRODUCTION

Neural networks are important in today’s age of accessible technology. Many of the great things we use, like social media recommendations and self-driving cars, are powered by them. However, there are dangers associated with everything strong. Consider the potential consequences if someone managed to breach the cybersecurity of an autonomous vehicle. That can result in a risky scenario when driving. Also imagine, if someone altered the suggestions you see on social media in an attempt to influence your thoughts or stir up problems. While safeguarding our personal data is important, it extends beyond that. We must ensure that these modern innovations do not unintentionally hurt or endanger us. Finding creative solutions to make neural networks safe and secure is more crucial than ever as technology continues to evolve quickly. By doing this, we can minimise the risks associated with these amazing tools while maximising their potential to improve our lives.

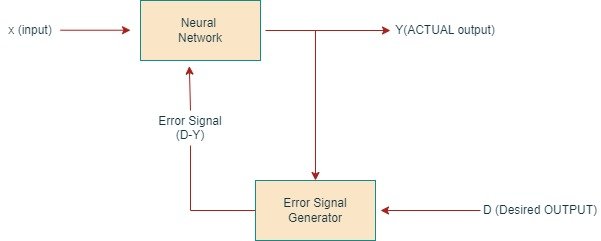

Figure 1. Block Diagram of Neural Network Security

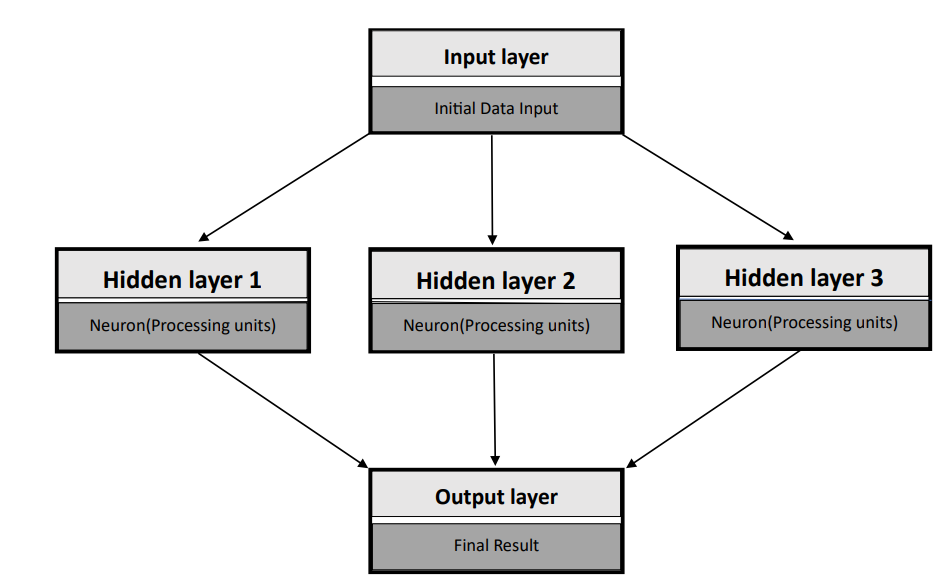

1.1 Understanding Neural Network

Neural networks are a fascinating type of computer system that draws inspiration from the way our brains work. Consider them as an array of layered, networked “neurons,” or connected dots. After receiving some information, each neuron briefly processes it before sending it to the layer below. Based on the patterns they identify in the data they are fed, these networks adapt by changing the connections between individual neurons. It’s similar to how humans learn in that our ability to identify patterns and make sense of the world improves with experience and knowledge [1]. Neural networks, then, essentially emulate this learning process, aiding computers in becoming increasingly intelligent and skilled at activities as a result of increasing data intake [2].

Figure 2. Neural network

1.2 Need of Neural Network Security

Neural network security is essential in safeguarding digital systems from sophisticated cyber threats. As traditional security measures prove insufficient against evolving attacks, neural networks offer adaptive and intelligent defence mechanisms[3]. They excel in anomaly detection, identifying irregular patterns indicative of cyber threats. Through continuous learning, these networks enhance intrusion detection and response, staying ahead of new attack vectors[4][5]. Neural network security also bolsters authentication protocols, reducing the risk of unauthorized access. With the increasing complexity of cyber threats, the need for dynamic, self-learning security measures provided by neural networks is paramount, ensuring robust protection for sensitive data and systems in our interconnected digital landscape. Additionally, Explainable AI (XAI) plays a crucial role in ensuring transparency and trustworthiness in the decision making processes of neural network-based security systems, enhancing their effectiveness and adoption[6].

1.3 Bridging the Gap with neural network security

Neural network security bridges gaps by fortifying digital defences against evolving threats. These networks enhance anomaly detection, identifying malicious patterns in real-time for proactive cybersecurity [7]. In intrusion detection systems, they discern subtle deviations from normal behaviour, strengthening resilience against cyber attacks. Neural networks also optimize authentication processes, mitigating risks of unauthorized access. Their adaptive learning bolsters threat intelligence, aiding in rapid response and adaptive defence strategies. By continuously evolving and learning from emerging threats, neural network security serves as a dynamic safeguard, closing vulnerabilities and ensuring robust protection in the ever-changing landscape of cybersecurity. Furthermore, edge computing can be employed to deploy neural network-based security solutions directly onto local devices or servers, enhancing efficiency and reducing dependency on centralized cloud-based systems[8].

1.4 Techniques in neural network security

Neural network security employs various techniques to enhance the resilience of digital systems against cyber threats:

- Anomaly Detection: Neural networks analyze normal behavior patterns and identify anomalies that may indicate potential security breaches or attacks [9].

- Intrusion Detection Systems (IDS): Utilizing neural networks for IDS helps in real-time monitoring of network activities, enabling the detection of suspicious or malicious behavior. Deep learning techniques, such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs), can be employed to effectively process large volumes of network data for intrusion detection [10].

- Training: Training neural networks with adversarial examples enhances their ability to withstand attacks by considering potential manipulations of input data[11].

- Encrypted Traffic Analysis: Neural networks can analyze patterns in encrypted traffic to detect anomalies or malicious activities without compromising the confidentiality of the data[12].

- Behavioural Analysis: Examining the behaviour of users or devices using neural networks helps in identifying deviations from typical patterns, signaling potential security risks. Recurrent neural networks (RNNs) and long short-term memory (LSTM) networks are particularly well-suited for sequential data analysis, making them effective for behavioral analysis tasks[13].

In neural network security, the focus is on achieving a harmonious blend of robust defense and transparency. Researchers and practitioners strive to develop systems that effectively combat cyber threats while remaining interpretable. Techniques like anomaly detection and dynamic authentication contribute to this goal, ensuring security measures are both adaptive and accountable in decision-making processes.

Figure 3. Techniques in neural network security

2. Challenges in Neural Network Security

1. Adversarial assaults: When malicious inputs are designed to trick the model into making incorrect predictions, neural networks are susceptible to adversarial assaults.

2. Overfitting: When neural networks overfit to training data, their capacity to generalize to new, unobserved data is compromised.

3. Explainability of the Model: Black-box models are frequently produced by neural networks due to their intrinsic complexity, which makes it difficult to understand and comprehend how they make decisions.

4.Data Privacy Concerns: Neural networks trained on sensitive data may inadvertently leak information, posing privacy risks.

5.Scalability: As neural networks grow in complexity, training and deploying large-scale models become computationally intensive.

3. Applications

Neural network security is a critical area of study and application due to the growing integration of artificial intelligence (AI) and machine learning (ML) systems across

various domains. Here are some applications of neural network security:

- Adversarial Attacks and Defenses: Neural network security involves countering attempts to deceive models with subtle input alterations, employing techniques like adversarial training and example detection[14].

- Privacy Preservation: To safeguard sensitive data, methods like differential privacy and federated learning are applied, ensuring model training while protecting individual data privacy[15].

- Model Watermarking and IP Protection: Unique identifiers embedded within models deter unauthorized usage or modifications, preserving owners’ intellectual property[16].

- Secure Multi-Party Computation (MPC): Collaborative model training techniques, maintaining dataset privacy, are explored through MPC, enabling joint computation while preserving data confidentiality[17].

- Model Stealing and Reverse Engineering: Defense techniques such as model distillation and obfuscation mitigate attempts to reverse-engineer neural networks for extracting proprietary knowledge or sensitive information[18].

- Security in Autonomous Systems and IoT Devices: Ensuring the resilience of neural network-based systems to cyber-physical attacks and adversarial manipulation is paramount for their safe operation within autonomous systems and IoT devices[19][20-27].

4. Conclusion

The importance of neural network security cannot be emphasized as we traverse the complex terrain of contemporary technology. Strong defenses against cyberattacks are clearly needed, from safeguarding driverless cars to maintaining user privacy on social media sites. Through the utilization of methods such as anomaly detection and encryption analysis, we are able to strengthen digital systems and lessen the dangers of malicious assaults and data breaches. However, issues like model explainability and scalability continue to exist, necessitating continued study and development. In the end, we can fully utilize these revolutionary technologies while preventing potential harm by emphasizing neural network security and encouraging interdisciplinary collaboration. This will guarantee a safer and more secure digital future for everybody.

References:

- National Research Council, Division of Behavioral, Social Sciences, Board on Behavioral, Sensory Sciences, Committee on Developments in the Science of Learning with additional material from the Committee on Learning Research, & Educational Practice. (2000). How people learn: Brain, mind, experience, and school: Expanded edition (Vol. 1). National Academies Press.

- Prieto, A., Prieto, B., Ortigosa, E. M., Ros, E., Pelayo, F., Ortega, J., & Rojas, I. (2016). Neural networks: An overview of early research, current frameworks and new challenges. Neurocomputing, 214, 242-268.

- Malhotra, P., Singh, Y., Anand, P., Bangotra, D. K., Singh, P. K., & Hong, W. C. (2021). Internet of things: Evolution, concerns and security challenges. Sensors, 21(5), 1809.

- Setia, H., Chhabra, A., Singh, S. K., Kumar, S., Sharma, S., Arya, V., … & Wu, J. (2024). Securing the road ahead: Machine learning-driven DDoS attack detection in VANET cloud environments. Cyber Security and Applications, 2, 100037.

- Peñalvo, F. J. G., Maan, T., Singh, S. K., Kumar, S., Arya, V., Chui, K. T., & Singh, G. P. (2022). Sustainable stock market prediction framework using machine learning models. International Journal of Software Science and Computational Intelligence (IJSSCI), 14(1), 1-15.

- Kabir, M. H., Hasan, K. F., Hasan, M. K., & Ansari, K. (2022). Explainable artificial intelligence for smart city application: a secure and trusted platform. In Explainable Artificial Intelligence for Cyber Security: Next Generation Artificial Intelligence (pp. 241-263). Cham: Springer International Publishing.

- Bouchama, F., & Kamal, M. (2021). Enhancing Cyber Threat Detection through Machine Learning-Based Behavioral Modeling of Network Traffic Patterns. International Journal of Business Intelligence and Big Data Analytics, 4(9), 1-9.

- Wang, X., Han, Y., Leung, V. C., Niyato, D., Yan, X., & Chen, X. (2020). Convergence of edge computing and deep learning: A comprehensive survey. IEEE Communications Surveys & Tutorials, 22(2), 869-904.

- Radford, B. J., Apolonio, L. M., Trias, A. J., & Simpson, J. A. (2018). Network traffic anomaly detection using recurrent neural networks. arXiv preprint arXiv:1803.10769.

- Khan, M. A. (2021). HCRNNIDS: Hybrid convolutional recurrent neural network-based network intrusion detection system. Processes, 9(5), 834.

- Yuan, X., He, P., Zhu, Q., & Li, X. (2019). Adversarial examples: Attacks and defenses for deep learning. IEEE transactions on neural networks and learning systems, 30(9), 2805-2824.

- Yu, T., Zou, F., Li, L., & Yi, P. (2019, October). An encrypted malicious traffic detection system based on neural network. In 2019 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC) (pp. 62-70). IEEE.

- Amiri, Z., Heidari, A., Navimipour, N. J., Unal, M., & Mousavi, A. (2023). Adventures in data analysis: A systematic review of Deep Learning techniques for pattern recognition in cyber-physical-social systems. Multimedia Tools and Applications, 1-65.

- Zhang, C., Costa-Perez, X., & Patras, P. (2022). Adversarial attacks against deep learning-based network intrusion detection systems and defense mechanisms. IEEE/ACM Transactions on Networking, 30(3), 1294-1311.

- Wu, X., Zhang, Y., Shi, M., Li, P., Li, R., & Xiong, N. N. (2022). An adaptive federated learning scheme with differential privacy preserving. Future Generation Computer Systems, 127, 362-372.

- Xue, M., Zhang, Y., Wang, J., & Liu, W. (2021). Intellectual property protection for deep learning models: Taxonomy, methods, attacks, and evaluations. IEEE Transactions on Artificial Intelligence, 3(6), 908-923.

- Jarin, I., & Eshete, B. (2021, April). Pricure: privacy-preserving collaborative inference in a multi-party setting. In Proceedings of the 2021 ACM Workshop on Security and Privacy Analytics (pp. 25-35).

- Sundaram, A., Abdel-Khalik, H. S., & Abdo, M. G. (2023). Preventing Reverse Engineering of Critical Industrial Data with DIOD. Nuclear Technology, 209(1), 37-52.

- Vats, T., Singh, S. K., Kumar, S., Gupta, B. B., Gill, S. S., Arya, V., & Alhalabi, W. (2023). Explainable context-aware IoT framework using human digital twin for healthcare. Multimedia Tools and Applications, 1-25.

- Aggarwal, K., Singh, S. K., Chopra, M., Kumar, S., & Colace, F. (2022). Deep learning in robotics for strengthening industry 4.0.: opportunities, challenges and future directions. Robotics and AI for Cybersecurity and Critical Infrastructure in Smart Cities, 1-19.

- Singh, R., Singh, S. K., Kumar, S., & Gill, S. S. (2022). SDN-Aided edge computing-enabled AI for IoT and smart cities. In SDN-supported edge-cloud interplay for next generation internet of things (pp. 41-70). Chapman and Hall/CRC.

- Malik, M., Prabha, C., Soni, P., Arya, V., Alhalabi, W. A., Gupta, B. B., … & Almomani, A. (2023). Machine Learning-Based Automatic Litter Detection and Classification Using Neural Networks in Smart Cities. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-20.

- Verma, V., Benjwal, A., Chhabra, A., Singh, S. K., Kumar, S., Gupta, B. B., … & Chui, K. T. (2023). A novel hybrid model integrating MFCC and acoustic parameters for voice disorder detection. Scientific Reports, 13(1), 22719.

- Chui, K. T., Gupta, B. B., Liu, J., Arya, V., Nedjah, N., Almomani, A., & Chaurasia, P. (2023). A survey of internet of things and cyber-physical systems: standards, algorithms, applications, security, challenges, and future directions. Information, 14(7), 388.

- Sharma, P. C., Mahmood, M. R., Raja, H., Yadav, N. S., Gupta, B. B., & Arya, V. (2023). Secure authentication and privacy-preserving blockchain for industrial internet of things. Computers and Electrical Engineering, 108, 108703.

- Upadhyay, U., Kumar, A., Sharma, G., Gupta, B. B., Alhalabi, W. A., Arya, V., & Chui, K. T. (2023). Cyberbullying in the metaverse: A prescriptive perception on global information systems for user protection. Journal of Global Information Management (JGIM), 31(1), 1-25.

- Alhalabi, W., Gaurav, A., Arya, V., Zamzami, I. F., & Aboalela, R. A. (2023). Machine learning-based distributed denial of services (DDoS) attack detection in intelligent information systems. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-17.

Cite As

Kondal S, Sharma A.(2024) Role of Neural Network Security in Modern Technology, Insights2Techinfo, pp.1