By: K. Sai Spoorthi, Department of computer science and engineering, Student of computer science and engineering, Madanapalle Institute of Technology and Science, 517325, Angallu, Andhra Pradesh.

Abstract

It is very innovative to witness that Generative AI is being developed day by day and possesses more wide and extensive opportunities and simultaneously, it embodies more wide and extensive ethical concerns in respective fields. As a result of the above findings on the generative AI this essay explores the ethical questions to the domain in which it is used, writing, authorship, ownership of copyrights, and effects on society. AI creative tools have pushed industrial creativeness and effectiveness in terms of generative AI issues but worrying issues relating to originality, bias and fairness naturally follow. Some of the patterns that lead to the biased environmental perception increase the risk of social justice or fairness in some AI models since the datasets used in developing a given model may also be biased. Moreover, the question of authorship and ownership is raised, connected with new issues to the concept of the Intellectual property[1]. The mitigation of these ethical issues therefore rests and the approach that ethicists, technologists and legal scholars will be engaged in developing the right paradigms useful in the right deployment of AI. Lastly, a way of ensuring that ethical issues will be incorporated into AI development and their deployment is a way of ensuring that Generative AI will not be used for purposes that are negative but will be a tool of positive change.

Key words: Generative Artificial Intelligence, ethical challenges, content creation, intellectual property, bias, societal impact, fairness

Introduction

AI is already increasing at a faster rate and with the introduction of generative AI an array of concerns concerning the subject has been brought up. The possibilities of generative AI in generating text and images, in creating music, in making forecasts, open an enormous perspective for different industries, but at the same time, they put forward varies ethical problems that should be discussed in detail. This is because when generative AI impacts societies’ various problems arise that include the following: Above all, it is not enough to be aware of these challenges in the theoretical sense, because awareness of these challenges forms the basis of real practice when creating frameworks for the proper further use and non-application of an equivalent in society. This way, it will be possible to elaborate and have a deep understanding of the ethical issues arising from the interaction with generative AI and thus have a necessary set of tools to analyse the complicated relations in the field and develop the progressive decision with the basis of moral values. It will help the stakeholders to regulate the possible outcomes and come up with correct regulatory measures.

Generative AI and its significance in contemporary society

Generative AI has revolutionized numerous fields due to the improvement of creativity and productivity in content creation. These progressive systems are based on the idea of utilizing the machine learning algorithms to produce text, images, and even music, which boosts up innovation in entertainment, marketing, and, to some extent, educational industries. Due to efficient elimination of tasks that would otherwise demand appreciable human input, generative AI encourages creators to spend more time dreaming up unique concepts and work out new ways of doing things in art and business.[2] Also, the ability to handle big data can enhance its competency for delivering better targeted content and therefore provide unique experiences for the audiences. The unprecedented level of profuse, novel text creation has sparked considerable debate about authorship, originality and copyright which has led to a somewhat fragile state of play, where there are no guidelines or rules to follow with high ethical standards. Therefore, it is essential to analyse the role of generative AI in contemporary social context building up concepts that enhance innovations’ positive impact and minimize the adverse effects.

Ethical Implications of Content Creation

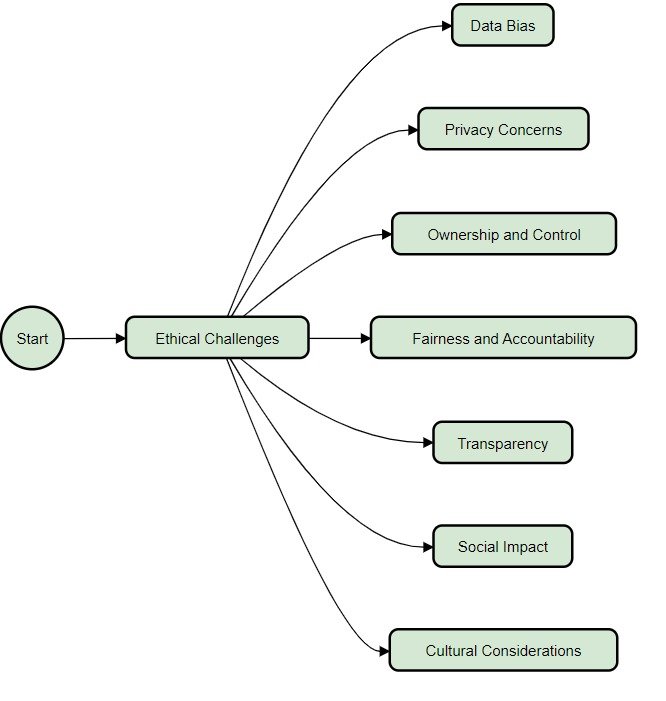

Technologically lucid generative AI techniques have significantly transformed content creation, thus, a change that raises the need for what ought to be the topic of ethical examinations. However, as shown in fig 1,when tapping big data and as algorithms create texts, images, and any kind of content, new questions concerned with authorship and originality as well as against plagiarism and software piracy emerged. However, even where AI is introduced in creating content, challenges arise including questions about the work’s originality, and seriously fundamental issues such as biasness and posting of fake news. Otherwise, the results produced by these systems would bear the prejudices of the given entity and, therefore, might input existing prejudices to such systems, spread stereotyped information, or even contribute to the creation of prejudice-negative input as the input is provided by such systems in most cases. These treatments extend far beyond Creators and directly into the sociological stratum of our subculture, thereby making engagement in ethical dialogue and moderations of ethical standards in the industry imperative. Due to such concerns, the stakeholders need to collaborate and develop frameworks on how to produce ethical content and on how to promote the appropriate use of such technologies.[3]

Issues of authorship and intellectual property in generative outputs

Due to this, generative AI technologies trigger deep philosophical issues related to authorship and ownership of ideas. Standard theories of copyright cannot capture the specifics of machine originals since those systems analyse large quantities of data from other creations without credit. This is especially the case due to a shifting of authorship responsibility that has the AI and its developers as well as end users as all potential authors of the generated content. Moreover, the nature of ownership that is not clear contributes to legal disputes that hamper the development of creativity fields. As generative AI advances, stakeholders need to deal with those implications, promoting the changes in policies that point to the fact that AI-generated outputs are created in cooperation and that authors and artists still can receive proper credits and remuneration as the whole AI systems’ creators.[4] Therefore, solving these questions is not only relevant to ensuring the legal protection of ideas, inventions, and creativity in terms of their legalized recognition but also to the development of a healthy and progressive creative environment during the era of deep learning and artificial intelligence.

Bias and Fairness in AI Models

The presence of bias in AI models is especially alarming due to the technologies’ growing integration into various spheres of people’s lives and existing to provide support in decision-making. The prejudice could arise from the training data set used when designing the AI systems and result in a reiteration of prejudice or creation of new types of prejudice. For example, in some facial recognition algorithms there is higher error rate in recognizing the members of some particular minorities, which does not let people doubt the fairness of such programs. Therefore, the fairness of AI models is not only about people’s perception of AI, and thus it does have implications to real life where such policies and attitudes may be adopted. Mitigating these biases requires a minutiae approach, including availability of multiple source data assortment and a constant model assessment, as well as setting of high organisational ethical principles, which incorporation of minority. Such an integrated strategy is necessary to avoid the bias and improve the fairness of AI solutions for making a society and digital environment as fair as possible.

The impact of biased training data on generative AI outputs and societal implications

Some types of generative AI, like those based on Large Language Models are deeply impacted by both the datasets in their development often containing the pre-existing – implicit bias. The effects of such biasing can be seen in the outcomes that were not only stereotypical in breadth but can further contribute to the perpetration of social injustice. For instance, GAI tools have shown the existence of critical hurdles to adoption in the service sectors by underlining the ethical issues as well as the technical constraints that defined the scope of the problem ((Rohit Gupta)). These barriers may prevent equal usage of AI technologies even when it comes to socially vulnerable population. Moreover, the use of these tools in education, including the process of curricula preparation, implies essential ethically doubtful questions, for example, concerning fairness of effectiveness indicators and attempts by ChatGPT to self-generate biased results that might compromise the relevance of educational testing in the future. Therefore, the nature of the bias in training data goes beyond generating individual discriminated outputs; it controls the narrative of the society and institutions, and it requires more comprehensive solutions for leveraging the ethical AI.[5]

Conclusion

Due to the vast opportunities in the usage of Generative AI, it is apparent that ethical considerations need to be approached in a complex manner in a way which composes the potential threats which may appear in the future. These three mechanisms of AI-generated content expose development, policy, and user audiences to changes in technology, and thus all need to pay attention to the impact that AI-generated content will have on society. The limitations include the risks of fake news, prejudice results, and violation of intellectual property rights make it imperative for you practical, ethical approaches that would inform the use of such technologies. Thus, with the help of various forms of communication among ethicists, technologists, and legal scholars, a better understanding of these difficulties is possible. Also, further educational sessions and awareness raising that enables users to evaluate the credibility of the source and processes used by the AI tools becomes essential a characteristic. Finally, the strategy to pursuance should be based on ethical accountability, to minimize the issues and controversies that surround Generative AI as the means that can be utilized to advance society. Lastly, integrating ethical issues contributes to utilising AI-based innovations to improve people’s quality of life rather than for the opposite, setting the foundation to create Generative AI as a tool for enabling rather than enfeebling. They will be required to undertake these dialogues to achieve the aim of promoting trust and accountability in the society due to the growing use of artificial intelligence.

References:

- M. Al-kfairy, D. Mustafa, N. Kshetri, M. Insiew, and O. Alfandi, “Ethical Challenges and Solutions of Generative AI: An Interdisciplinary Perspective,” Informatics, vol. 11, no. 3, Art. no. 3, Sep. 2024, doi: 10.3390/informatics11030058.

- P. Zlateva, L. Steshina, I. Petukhov, and D. Velev, “A Conceptual Framework for Solving Ethical Issues in Generative Artificial Intelligence,” in Electronics, Communications and Networks, IOS Press, 2024, pp. 110–119. doi: 10.3233/FAIA231182.

- T. Haksoro, A. S. Aisjah, Sreerakuvandana, M. Rahaman, and T. R. Biyanto, “Enhancing Techno Economic Efficiency of FTC Distillation Using Cloud-Based Stochastic Algorithm,” Int. J. Cloud Appl. Comput. IJCAC, vol. 13, no. 1, pp. 1–16, Jan. 2023, doi: 10.4018/IJCAC.332408.

- V. D. Kirova, C. S. Ku, J. R. Laracy, and T. J. Marlowe, “The Ethics of Artificial Intelligence in the Era of Generative AI,” J. Syst. Cybern. Inform., vol. 21, no. 4, pp. 42–50, Dec. 2023, doi: 10.54808/JSCI.21.04.42.

- M. Rahaman, C.-Y. Lin, and M. Moslehpour, “SAPD: Secure Authentication Protocol Development for Smart Healthcare Management Using IoT,” Oct. 2023, pp. 1014–1018. doi: 10.1109/GCCE59613.2023.10315475.

- Gupta, B. B., Arachchilage, N. A., & Psannis, K. E. (2018). Defending against phishing attacks: taxonomy of methods, current issues and future directions. Telecommunication Systems, 67, 247-267.

- Gupta, B. B., Tewari, A., Jain, A. K., & Agrawal, D. P. (2017). Fighting against phishing attacks: state of the art and future challenges. Neural Computing and Applications, 28, 3629-3654.

Cite As

Spoorthi K.S. (2024) Understanding the Ethical Challenges of Generative AI, Insights2Techinfo, pp.1