By: Praneetha Neelapareddigari, Department of Computer Science & Engineering, Madanapalle Institute of Technology and Science, Angallu (517325), Andhra Pradesh. praneetha867reddy@gmail.com

Abstract

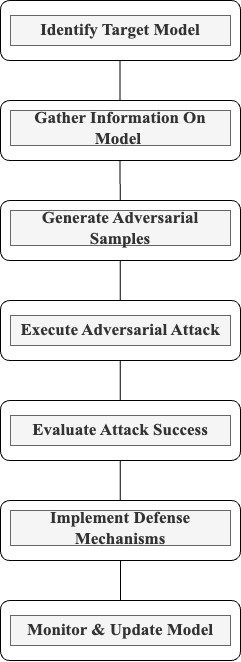

Adversarial Machine Learning or AML is thus a strategic and an emergent field which belongs to the realm of both AI and cyber security since it concerns with the novel challenge of adversarial attacks against AI systems. Since AI appears in various fields corresponding to the car-driving, healthcare, etc, such systems are vulnerable to adversarial examples, which are deliberately created for this purpose. This is quite eye opening not only to the stabilities of the AI applications but also regarding their security and especially the likelihood to be misused, especially in areas of high sensitivity. The very fact that both sides such as improving attackers and inhibitors, who, in their turn, develop more reliable models – remain active and thus, racist, confirms that this is an evolving threat. In this paper, AML as a fundamental idea is examined in as far as the various points of view fruitful in the examination concerning types of attacks inclusive of their examples, ethical issues, and future directions for research are illustrated. The different contemporary examples that have been provided or the recent development aimed at encouraging the advancement of AI stress on the fact that adversarial threats of AI systems demand continuous development and protection.

Keywords: Machine Learning, Adversarial attacks, Artificial Intelligence

Introduction

AI is gradually transforming several fields and our day to day lives; it is thus wise to ensure that these systems security is well boosted[1]. The highly advanced machine learning AI models are today powering it from the self-drive cars on the roads to the algorithms used on economic and medical milestones to the gadgets that diagnose our diseases[2]. However, when these systems will be in growth and being used to various extents, it also creates preferable targets to hackers. From this context, new tentative emerged and one of them is the adversarial machine learning, or AML for a shorthand, a field that is devoted to the AI models’ vulnerabilities towards adversarial attacks.

Adversarial machine learning in a process wherein the inputs provided are deceptive to the actual model developed known as adversarial example[3]. This can range from a slight change in the pixels of an image to large scale alterations in the data which makes it possible for AI systems to fail in the most spectacular of ways. For example, a change as small as a pixel in a road sign image may cause an AI car to read a stop sign as a speed limit sign with disastrous outcomes.

It is to the good fortune of this work that the consequences of these vulnerabilities are manifold. In areas such as health, adversarial attacks could mean that the doctor or medical team reaches the wrong conclusion about a patient’s condition. In the financial sector, they can manipulate trading programs or facilitate certain scams[4]. Thus, learning and combating adversarial threats increase the usage of AI systems, soon.

As such, this introduction fosters the further analysis of adversarial machine learning: a field that not only demonstrates the vulnerabilities of AI but also forces the scientific and application community to enhance algorithms towards being more robust and reliable. And so therefore, knowing what adversarial attacks are and what defences can be put in place will help in the fight of attempting to make AI better incorporated in our society.

1. Basic Concepts

Adversarial Attacks

Adversarial attacks refer to deceptive methods of either misleading AI models, or providing inputs that will make the model produce wrong predictions[5]. These inputs known as adversarial examples, are slightly perturbation of normal data but they force the AI to make wrong decisions such as misclassifying an image or wrongly interpreting a piece of text. The general goal of adversarial attack is to manipulate the AI system and force it to act in a certain manner it wasn’t designed to.

- Types of Adversarial Attacks

There are many types of adversarial attacks since each target’s different weak points in the models from machine learning. Evasion attacks are those in which input data are changed in a manner that would make the model misclassify inputs or fail to identify them as female. For instance, the attacker can overlay the image with small amounts of noise so that the AI will misclassify it while still being visually indistinguishable to a human. Therefore, poisoning attack happens at the training phase of the model whereby the adversary injects wrong or unfair data into the model training data set. This is unwholesome to the learning process of the model and hence comes out with one that is poor in performance or behaves in an unpredictable nature. Thus, the attacks of this type are based on the analysis of the model’s output, as the goal is to obtain sensitive information[6]. For example, one can easily identify some details of the data that was used to train the model by simply interrogating the model. Membership inference attacks enable an attacker to decide whether the data instance was included in the training set of a model, which simultaneously means the leakage of the original dataset’s information and potential privacy and confidentiality breaches. All the discussed adversarial attack methods show that machine learning models can be vulnerable in a vast number of ways.

2. Techniques Used in Adversarial Attacks

There are several adversarial attack strategies, and gradient-based attacks groups represent among the most frequent ones. These methods like the Fast Gradient Sign Method (FGSM) involve utilizing gradient data to create adversarial sample variations of the original sample set that will mislead the model and yield inferior outcomes. These attacks take place if the adversary has zero knowledge about the internal state of the model, meaning that he or she manipulates inputs and observes model’s responses[7]. On the other hand, white-box attacks encompass situations where the adversarial has full knowledge of the model’s structure, its parameters and training data. Moreover, the transferability of adversarial inputs is another issue that is when one model is attacked by certain inputs that mislead the model, other models even if they have different architectures and trained on different data might also be fooled by the same inputs. This transferability is a big problem in defending against adversarial attacks since it prescribes that weaknesses may be transferred from one model to another.

3. Defensive Techniques Against Adversarial Attacks

The defence mechanisms go several important strategies to build the resilience of the machine learning models against antagonistic attacks. Adversarial Training is one of the essential approaches of training the models using adversarial instances to enhance their resistance against such attacks. Defensive Distillation tries to decrease the model’s sensitivity to small perturbations, and thus, threats from the attackers. Gradient Masking or Obfuscation techniques’ general purpose is to remove or somehow ‘mask’ gradient information so that the adversary cannot use it to design new adversarial examples. Another approach that can be discussed is Input Preprocessing, which deals with the methods such as feature squeezing and input normalization to reduce the effects of adversarial manipulations. Also, Ensemble Methods involves the use of several models which in a way reduces the overall impact of adversarial attacks if the system probably has one weak point that the attack can exploit.

4. The Role of AI in Adversarial Attacks

AI and more specifically, machine learning contribute greatly to creating the adversarial attacks, because the creation of an adversarial example is automated. Adversaries can use machine learning techniques to learn specific patterns in a target model and input these patterns to the model in such a way as to look innocent to a human but are ‘adversarial’ to the model. Such adversarial examples can be created with the help of the techniques like gradient-based approaches, which assist in computing the minimum changes that are needed to fool the model.

This has created a continuous state of AI cyber offense against AI cyber defence. Because nowadays adversarial attacks are evolving and effectively using incompetent AI to overcome past defences, researchers do the same, creating new, AI-based defence mechanisms[8]. This made it a never-ending process of evolution for both the attacking and the defending strategies in which each time a new method of attacking pushes for new ways of defence and vice versa, continuously advancing the use of AI in cyberspace.

5. Adversarial Machine Learning in Cybersecurity

Adversarial machine learning is extremely influential in the field of cybersecurity through using methods that threaten the visibility and performance of machine learning-based security measures. Such attacks can compromise such fundamental functionality leading to adversarial ML being a threat that is on the rise in the cybersecurity domain.

In fraud detection adversarial attacks can capture synthetic legitimate transactions or activities that cannot be distinguished from those generated by ‘normal’ customers by any of the machine learning models. This is a departure from the typical fraud detection systems, which means that there must be better mechanisms applied to be able to detect these forms of manipulation.

Even the biometric authentication such as, face recognition or fingerprint scanner is not safe from adversarial examples. Based on attacker’s strategies, it is feasible to modify biometrics inputs with different variations to deceive the authentication models resulting to unauthorized entry into secure systems.

In malware classification, the attacks from the adversary are such that they alter slight details of the malware samples in a way which will not attract attention but will also prevent the software from performing its intended evil deeds. This makes it challenging for the models of malware detection to tag and prevent new threats, and the attackers always must look for ways and means on how to evade the detection systems.

In response to the threat, cybersecurity frameworks are evolving to include more prominent and sophisticated defence mechanisms including adversarial training where the model is trained on adversarial examples. Also, there is a growing interest in creating accurate but more interpretable and less susceptible to adversarial manipulations of models. The deployment of artificial intelligence solutions, proficient observation, and ability to adapt in real time has become a standard in current protection paradigms because it makes the systems ready to face the constantly changing nature of threats.

6. Future Trends

New arising trends of attack-methods and a great emphasis on the further enhancement of the defence-strategies make the future of adversarial machine learning research very promising. Scientific objectives include new approaches to training by generating other adversarial examples to get a more comprehensive understanding of adversarial threats as well as the creation of new robust, explainable models vulnerable to malicious changes. Also, new developments in AD will be expected as systems that counter detected adversarial threats in real-time are expected to mitigate threat effectiveness. As hostile threats persist to mutate with time and increasing concealment, it is evident that there is a need to encourage constant research on the subject. Future course of action will probably be attempted in the form of layered security, consolidating more than one approaches into systems that are strong enough to repel attacks that are in the domain of contemporary threats. The dynamic relations between the attackers and defenders will ensure that there are innovations producing constant shifts of adversarial machine learning hence making it an important path forward in the journey to having secure AI systems.

Conclusion

In conclusion, adversarial machine learning can be considered as the new threat and an expanding issue in the integration of AI and cybersecurity. Unfortunately, as AI systems are incorporated into the central functionalities of society there is no way to ignore the potential for adversarial attacks. These attacks that target the weaknesses in the machine learning models show how necessary it is to develop and implement stronger and better AI systems. To overcome these issues, more investigations need to be done, new and complex security measures must be created, and adherence to the proper standards of AI application must be followed. failure is also always around the corner, particularly with adversarial machine learning attempting to manipulate AI-driven systems, but through comprehending some of the measures to take, this makes AI safe and beneficial in the future.

References

- Y. Vorobeychik and M. Kantarcioglu, Adversarial Machine Learning. Springer Nature, 2022.

- B. Mahesh, Machine Learning Algorithms -A Review, vol. 9. 2019. doi: 10.21275/ART20203995.

- I. Rosenberg, A. Shabtai, Y. Elovici, and L. Rokach, “Adversarial Machine Learning Attacks and Defense Methods in the Cyber Security Domain,” ACM Comput Surv, vol. 54, no. 5, p. 108:1-108:36, May 2021, doi: 10.1145/3453158.

- L. Triyono, R. Gernowo, P. Prayitno, M. Rahaman, and T. R. Yudantoro, “Fake News Detection in Indonesian Popular News Portal Using Machine Learning For Visual Impairment,” JOIV Int. J. Inform. Vis., vol. 7, no. 3, pp. 726–732, Sep. 2023, doi: 10.30630/joiv.7.3.1243.

- A. Chakraborty, M. Alam, V. Dey, A. Chattopadhyay, and D. Mukhopadhyay, “A survey on adversarial attacks and defences,” CAAI Trans. Intell. Technol., vol. 6, no. 1, pp. 25–45, 2021, doi: 10.1049/cit2.12028.

- H. Xu et al., “Adversarial Attacks and Defenses in Images, Graphs and Text: A Review,” Int. J. Autom. Comput., vol. 17, no. 2, pp. 151–178, Apr. 2020, doi: 10.1007/s11633-019-1211-x.

- K. Ren, T. Zheng, Z. Qin, and X. Liu, “Adversarial Attacks and Defenses in Deep Learning,” Engineering, vol. 6, no. 3, pp. 346–360, Mar. 2020, doi: 10.1016/j.eng.2019.12.012.

- P. Pappachan, Sreerakuvandana, and M. Rahaman, “Conceptualising the Role of Intellectual Property and Ethical Behaviour in Artificial Intelligence,” in Handbook of Research on AI and ML for Intelligent Machines and Systems, IGI Global, 2024, pp. 1–26. doi: 10.4018/978-1-6684-9999-3.ch001.

- Aldweesh, A., Alauthman, M., Al Khaldy, M., Ishtaiwi, A., Al-Qerem, A., Almoman, A., & Gupta, B. B. (2023). The meta-fusion: A cloud-integrated study on blockchain technology enabling secure and efficient virtual worlds. International Journal of Cloud Applications and Computing (IJCAC), 13(1), 1-24.

- M. Casillo, F. Colace, B. B. Gupta, A. Lorusso, F. Marongiu and D. Santaniello, “Blockchain and NFT: a novel approach to support BIM and Architectural Design,” 2022 International Conference on Innovation and Intelligence for Informatics, Computing, and Technologies (3ICT), Sakheer, Bahrain, 2022, pp. 616-620, doi: 10.1109/3ICT56508.2022.9990815.

- P. Chaudhary, B. B. Gupta, K. T. Chui and S. Yamaguchi, “Shielding Smart Home IoT Devices against Adverse Effects of XSS using AI model,” 2021 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 2021, pp. 1-5, doi: 10.1109/ICCE50685.2021.9427591.

Cite As

Neelapareddigari P. (2024) Adversarial Machine Learning, Insights2Techinfo, pp.1