By: K. Yadav, B. B. Gupta ![]()

![]() , D. Peraković

, D. Peraković ![]()

In recent times, the advancement in deep learning algorithms like Autoencoder and Generative Adversarial Network(GAN) has helped tremendously in content generation. These contain ranges from text and images to videos. At one side, the content generation has benefitted to the industry, whereas on the other side, this generated content, when misused, can be harmful too. One popular technology which is being widely used for artificial content generation is Deepfake.

What is DeepFake?

The term “deepfake” is a combination of two words, deep and fake. The word deep comes from deep learning, which is a field of learning interesting information from large amounts of datasets. When these deep learning-based algorithms are used to create some fake content, then the given technology is called a deep fake.

Types of DeepFake

The creation of deepfake can be divided into five different categories, a. face swap, b. lip-syncing, c. puppet-mastery, d. face synthesis and attribute manipulation, and e. audio deep fakes. In face-swap [1], the face of the person in the original video is replaced by the face of the person from the target video. Figure 1. represents this process of face-swapping.

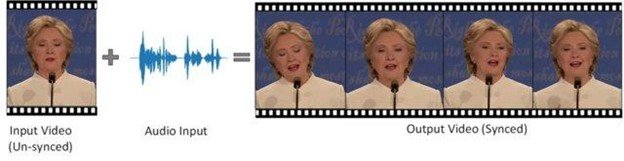

In lip-syncing[2] we use arbitrary audio to generate a new video such that the video generated properly syncs with the facial expressions and audio. In figure 2, we can see that the input video which, with the help of targeted audio, is used to generate different fake clips. In puppet-master[3], the facial expression inside the targeted video or an image is controlled by the person in the source video.

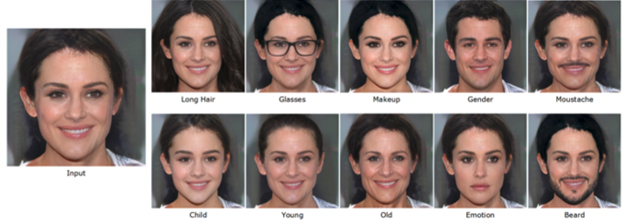

Face synthesis[4] deals with the creation of fake facial faces and manipulates the different attributes of original faces. In figure 3, on the left side, we have the original image, which is then being manipulated in different ways. We can add long hair, glasses, change the gender by manipulating different facial regions of the photo. Audio deep fakes[5] deal with the creation of targeted speech. Let’s say if the politician of any country wants to give a speech across different states where different language is followed, but the politician knows only one language. The recorded video of a politician in one language can be converted into the desired language along with synced facial expressions.

Generation of DeepFake

There are several methods for creating deep fakes, but the most widely used methods are autoencoder and GAN. Autoencoder is a deep learning algorithm that studies the given input data from different angles and environmental conditions and can replicate the same input content with very accuracy. To create a deepfake with the help of an autoencoder[6], we need two pairs of encoder and decoder. The source image is first trained on one encoder-decoder and the targeted image on the next encoder-decoder. Once the training is complete, the decoder of the source and targeted images are swapped. The swapping is done so that the original encoder can generate the targeted image by generating the features from the original image.

The approach like FSGAN [7] can help in manipulating different facial regions along with the pose and expression. Such manipulated images have been found of very high quality and have even outperformed the image generated with the help of autoencoder methods []. There are several apps developed, such as the Chinese app Zao, FaceApp, Face Swap, that generated deep fakes. Many other deep fakes software can be found on github.

Detecting DeepFake

As deepfake has the ability to generate fake content, if these fake contents are misused, then it may bring huge consequences. For example, a Belgian political party published a video in 2018 showing Donald Trump making a speech in which he proposed withdrawing Belgium from the Paris climate accord; however, Trump never delivered this statement. Deepfake has the ability to incite political conflict, bloodshed, and even warfare between various areas if it is used effectively. Hence, it becomes very important to develop methodologies that can detect these deep fakes.

For the time being, the best way to spot deepfakes is to look for artefacts that appear during the creation or data-driven categorization processes. Finding anomalies and irregularities in a scene is the focus of spatial artefacts. [8]. The temporal artifacts[9] rely on detecting a change in inconsistency in person behavior, physiological signals, video frame synchronization, and coherence. The data-driven approach[10] relies on detecting anomalies in the given deepfake. Well-crafted features play a very important role in detecting deepfakes. Such features can be handcrafted or extracted through some deep learning methods.

Open challenges in DeepFake detection

- Limited datasets.: To detect whether the given content is deepfake or not, we need a large amount of source and targeted data. A comparison between these two datasets can reveal the ambiguity that may give rise to the necessary features for deepfake detection. However, the availability of these two datasets simultaneously is a big challenge.

- Performance Evaluation: Most of the deepfake detection approaches can only classify the given deepfake into either fake or not fake, but these deepfakes exist in many forms. There may be situations where there are 10 people in the videos, and the facial expression of only one person is compromised. In this situation, if the given detection approach classifies deepfake as not fake, then the given detection is not accurate.

- Alteration in the input data: Images and videos are compressed before being uploaded over the internet. Such compression leads to the removal of important features for deepfake detection and increases the false-positive rates.

References

- Y. Nirkin, I. Masi, A. T. Tuan, T. Hassner, and G. Medioni, “On face segmentation, face swapping, and face perception,” in 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), 2018, pp. 98-105: IEEE.

- Y. Choi, M. Choi, M. Kim, J.-W. Ha, S. Kim, and J. Choo, “Stargan: Unified generative adversarial networks for multi-domain image-to-image translation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 8789-8797.

- J. Thies, M. Zollhöfer, C. Theobalt, M. Stamminger, and M. Nießner, “real-time reenactment of human portrait videos,” ACM Trans. Graph., vol. 37, no. 4, pp. 164:1-164:13, / 2018.

- I. Goodfellow et al., “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672-2680.

- 18.09.2020). FakeApp 2.2.0. Available: https://www.malavida.com/en/soft/fakeapp/

- (18.09.2020). Faceswap: Deepfakes software for all. Available: https://github.com/deepfakes/faceswap

- Y. Nirkin, Y. Keller, and T. Hassner, “FSGAN: Subject Agnostic Face Swapping and Reenactment,” in Proceedings of the IEEE International Conference on Computer Vision, 2019, pp. 7184-7193.

- P. Korshunov and S. Marcel, “Speaker inconsistency detection in tampered video,” in 2018 26th European Signal Processing Conference (EUSIPCO), 2018, pp. 2375-2379: IEEE.

- S. Agarwal, H. Farid, Y. Gu, M. He, K. Nagano, and H. Li, “Protecting World Leaders Against Deep Fakes,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2019, pp. 38-45.

- Y. Zhang, L. Zheng, and V. L. Thing, “Automated face swapping and its detection,” in 2017 IEEE 2nd International Conference on Signal and Image Processing (ICSIP), 2017, pp. 15-19: IEEE

Cite this article as:

K. Yadav, B. B. Gupta, D. Peraković (2022), DeepFake: A Deep Learning Approach in Artificial Content Generation, Insights2Techinfo, pp.1