By: Simran Jaggi1, Anish Sharma1, Stephen Jonathan2, and Ranggi Cahyana Mariandani2

1CSE Department, Chandigarh College of Engineering and Technology, Chandigarh, India.

2Department of computer science, Esa Unggul University, Indonesia

Abstract:

XAI has emerged as a critical solution to address the opacity of AI algorithms, fostering transparency and trust in decision-making processes. As AI increasingly shapes diverse domains, concerns about the inscrutability of these systems necessitate comprehensible explanations. XAI, a burgeoning research focus, aims to unravel the intricacies of AI models, particularly their black-box nature, ensuring users can grasp and trust the rationale behind decisions. This article targets XAI researchers seeking to enhance the trustworthiness of their models, offering insights for effectively communicating meaningful information from data across various disciplines.

Keywords: Artificial Intelligence, AI Regulation, XAI, Explainable AI

1. Introduction

The rapid adoption of AI technologies in critical decision-making processes has transformed multiple industries, allowing AI systems to make precise decisions and this has highlighted the importance of understanding the rationale behind AI decisions. Explainability in AI is crucial for ensuring accountability and fostering trust among users and stakeholders. As our reliance on intelligent machines grows, the imperative for transparent and interpretable models becomes increasingly evident.To meet this requirement, explainable artificial intelligence (XAI) offers machine learning techniques that empower human users to comprehend, trust, and generate more explainable models [1]. However, concerns arise due to the lack of transparency, especially in critical human life scenarios. Complex AI models, like deep neural networks, are criticized for their opaque “black-box” nature, causing skepticism among stakeholders. In healthcare, finance, autonomous vehicles, and criminal justice, the opacity impedes widespread adoption. XAI emerged to make AI systems more transparent and interpretable. This research explores XAI’s application across domains, contributing to the development of accountable and ethical AI technologies, and addressing the interpretability challenge and its implications for various sectors, including healthcare, finance, and autonomous systems[2].

1.1 Explainable AI

Explainable Artificial Intelligence (XAI) is an essential discipline that tackles the inherent lack of transparency in intricate machine learning models, offering a means to comprehend and interpret their decision-making processes. In the realm of AI, where opaque algorithms often produce results without clear justifications, XAI endeavors to bridge the divide between the complexity of models and human understanding. Fundamentally, XAI strives to augment transparency, accountability, and trust in AI systems by rendering their outputs more interpretable for users, stakeholders, and regulatory bodies. The significance of XAI has surged in parallel with the escalating integration of AI technologies in critical domains such as healthcare, finance, autonomous vehicles, and legal systems.The field of XAI, or Explainable Artificial Intelligence, encompasses a range of techniques and methodologies specifically designed to uncover the internal workings of algorithms. This, in turn, offers valuable insights into the decision-making processes employed by these algorithms. The importance of XAI cannot be overstated, as it plays a pivotal role in ensuring the ethical use of AI. By enabling users to comprehend the reasoning behind AI-generated outputs, XAI empowers them to identify and address any biases or errors that may be present. This ultimately leads to more transparent and accountable AI systems.

As the demand for trustworthy and understandable AI systems intensifies, the development and application of XAI methodologies become essential in shaping a future where artificial intelligence provides clear, interpretable explanations for its decisions.

1.2 Need of XAI

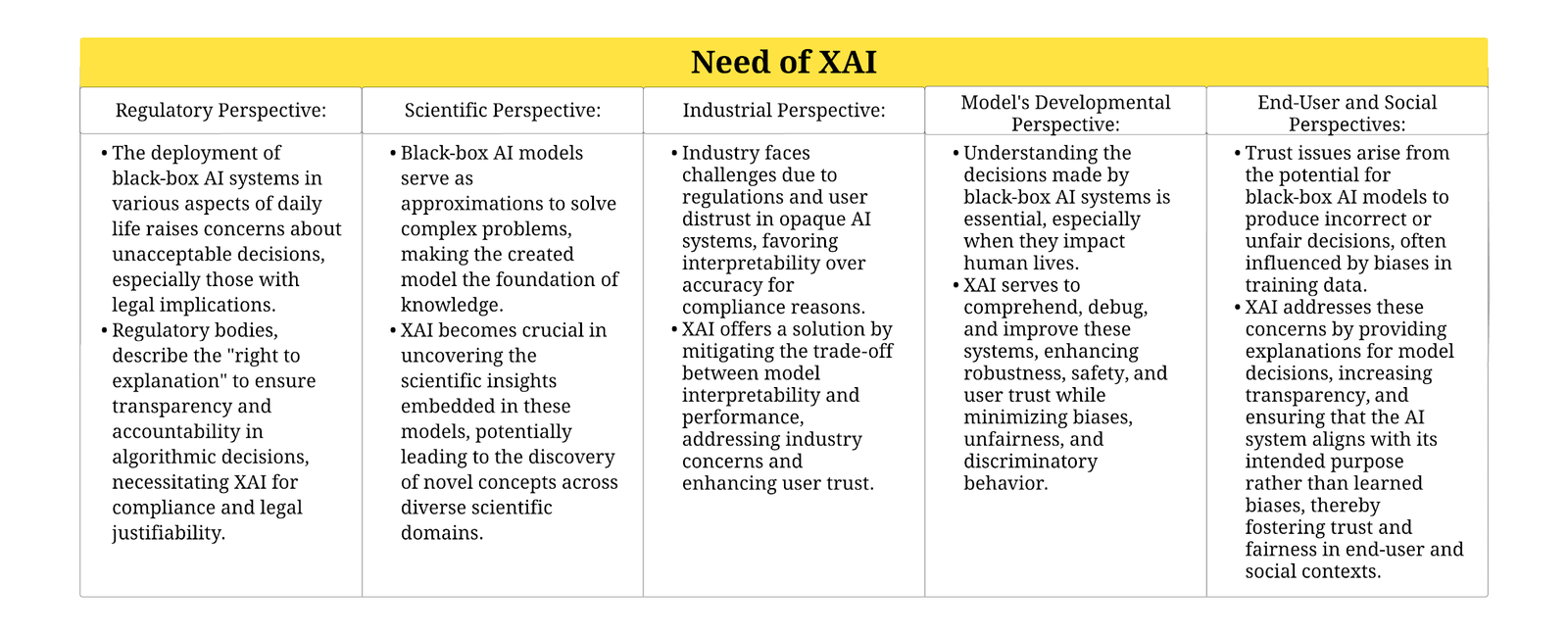

Explainable Artificial Intelligence addresses a critical need in the rapidly evolving landscape of AI applications. As we increasingly rely on complex, black-box AI systems in autonomous vehicles, social networks, and medical technologies, understanding the reasons behind their decisions becomes imperative. While not all AI systems require explanations for each decision, instances with severe consequences or legal implications demand transparency[4]. The various aspects are explained in Table 1.

Table 1: Need of XAI

1.3 Bridging the Gap with XAI

Explainable AI [5] serves as a bridge between the complexity of AI models and the human need for understanding. Integrating methods that generate explanations for AI decisions, makes these systems more interpretable and trustworthy.

A. Enhancing Accountability: The significance of XAI lies in its ability to establish the responsibility of AI systems for their decisions. When individuals such as users, stakeholders, or regulatory bodies can grasp the reasoning behind AI outputs, it becomes simpler to recognize and tackle any potential biases, errors, or unintended consequences.

B. Building Trust in Critical Domains: In sectors like healthcare, where AI aids in diagnostics and treatment recommendations, trust is paramount. XAI ensures that healthcare professionals and patients can understand why a specific diagnosis or treatment plan is suggested, fostering confidence in AI-driven decisions.

C. Navigating Ethical Considerations: Ethical concerns surrounding AI, such as bias in decision-making, are gaining prominence. XAI facilitates a transparent exploration of how AI algorithms weigh different factors, helping identify and mitigate biases that may impact certain groups unfairly [6-9].

1.4 Techniques in Explainable AI

XAI is an evolving field that employs various techniques required to enhance the interpretability of AI models, making them more transparent and understandable [10] [11]. Several key strategies contribute to achieving this goal as shown in Figure 2 :

- Feature Importance: Determining the key input variables that hold the most significance in a model’s decision-making process is crucial.

- Local Explanations: Providing explanations for individual predictions, allowing users to understand the reasoning behind specific outcomes.

- Model-Agnostic technique: This can be applied to different types of models, promoting flexibility and usability.

- Rule-Based Systems: including decision trees and rule sets, offer explicit, human-readable decision rules. These models inherently provide transparency by representing decision logic in a clear, rule-based format.

- Attention Mechanisms: Particularly effective in deep learning models, attention mechanisms highlight specific parts of input data that significantly contribute to the model’s decision, offering insights into the decision-making process [12][13][14].

Figure 2. Techniques in XAI

The aim of researchers and practitioners in the realm of XAI is to find a middle ground between the complexity and interpretability of models. By utilizing a range of techniques, they strive to ensure that AI systems are not only precise but also transparent and accountable in their decision-making processes.

2. Challenges

Explainable AI (XAI) [15] has emerged as a critical aspect of AI development, as Artificial Intelligence (AI) continues to penetrate various sectors, transparency and interpretability required in decision-making systems is increased thus providing transparency in decision-making processes. However, the path to attaining transparency is not devoid of obstacles. Some challenges that developers, researchers, and industries face when integrating XAI principles into decision-making systems are shown in Figure 3.

1. Complexity of AI Models: The challenges in creating interpretable explanations arise from the inherent complexity of advanced AI models, including deep neural networks. When model-based methods fail to provide accurate predictions, practitioners seek more accurate models. Therefore, the development of new modeling methods that preserve interpretability while improving prediction accuracy can enhance the utilization of model-based approaches [16].

2. Balancing Accuracy and Simplicity: Explore the trade-off between creating models that accurately capture intricate patterns and keeping them simple enough to provide understandable explanations.

3. Context Sensitivity: Investigate how contextual variations in data can lead to challenges in creating universally interpretable explanations, especially in dynamic environments.

4. Scalability and Performance Impact: The integration of XAI into decision-making systems has a profound influence on their scalability and performance, specifically in terms of the computational resources needed to generate explanations. When confronted with a large volume of cases that demand prediction and explanation, scalability emerges as a critical challenge [17].

5. User Understanding: Investigate how end-users may struggle to comprehend complex AI explanations, leading to potential misinterpretation or distrust.

Figure 3. Challenges of XAI

3. Applications

Explainable AI (XAI) plays an essential role in decision-making systems, fostering transparency, accountability, and user trust.The importance of this reaches beyond specific domains and encompasses industries where automated systems are highly prevalent, with a particular focus on lending and financial services. The ability of XAI to be applied across different sectors ensures that even though the specific applications may differ, the core principles remain constant. Consequently, decision-making processes are enhanced in diverse organizational settings[18]. The integration of Explainable AI (XAI) has emerged as a transformative force in enhancing transparency, trust, and accountability within decision-making systems In various industries as shown in Figure 4, across these domains, XAI serves as a cornerstone, preventing decision-making systems from being perceived as opaque “black boxes,” thereby promoting ethical and accountable utilization of AI technologies. A few industries are discussed below:

- In healthcare diagnostics: XAI plays a pivotal role by elucidating the rationale behind specific diagnoses. This assists healthcare professionals in validating AI-generated recommendations, crucially establishing trust between clinicians and AI systems.

- In finance and risk assessment: XAI offers clarity by providing understandable explanations for credit-related decisions, contributing to compliance, customer satisfaction, and dispute resolution.

- Autonomous vehicles: benefit from XAI, elucidating critical decision-making processes during emergencies, thereby enhancing passenger safety and facilitating regulatory compliance.

- In legal systems: XAI is used in predicting case outcomes and contract analysis fosters interpretability, ensuring fairness and accountability in legal proceedings.

- In Insurance: XAI proves instrumental in building trust, understanding, and auditing AI systems, resulting in improved customer acquisition, conversion rates, efficiency, and decreased fraudulent claims.

- The defense sector: leverages XAI to elucidate AI system choices in military training applications, mitigating ethical concerns and enhancing decision justification.

Figure 4. Applications of Explainable AI (XAI)

4. Conclusion

The incorporation of Explainable AI (XAI) emerges as a transformative solution for addressing the interpretability challenge prevalent in decision-making systems across diverse industries. The rapid integration of artificial intelligence (AI) in critical domains necessitates a nuanced understanding of AI decision rationale to ensure transparency, trust, and accountability[19]. The importance of XAI in demystifying the intricate mechanisms of complex AI algorithms is underscored by this research. It provides clear and comprehensible explanations for the decisions made by these algorithms. Despite challenges such as model complexity and the trade-off between accuracy and simplicity, XAI serves as a crucial bridge between the complexities of AI models and the human need for understanding. By facilitating the comprehension of AI outputs, XAI enhances accountability by ensuring transparency. It provides a comprehensive overview of XAI, including its applications in healthcare, finance, autonomous vehicles, and other sectors, underscoring its versatility and impact. In essence, XAI acts as a cornerstone in preventing decision-making systems from being perceived as opaque, fostering ethical, accountable, and responsible utilization of AI technologies in shaping our future[20].It contributes to the ongoing discourse on the importance of Explainable AI in ensuring transparency and trust in decision-making systems. As AI continues to shape various aspects of our lives, understanding and addressing the interpretability challenge is essential for fostering responsible and ethical AI practices.

5. References

- Dwivedi, R., Dave, D., Naik, H., Singhal, S., Omer, R., Patel, P., … & Ranjan, R. (2023). Explainable AI (XAI): Core ideas, techniques, and solutions. ACM Computing Surveys, 55(9), 1-33.

- Kurniawan, D., & Pujiastuti, L. (2023). Explainable artificial intelligence (XAI) for trustworthy decision-making. Jurnal Teknik Informatika CIT Medicom, 15(5), 240-246.

- V. Chamola, V. Hassija, A. R. Sulthana, D. Ghosh, D. Dhingra and B. Sikdar, “A Review of Trustworthy and Explainable Artificial Intelligence (XAI),” in IEEE Access, vol. 11, pp. 78994-79015, 2023, doi: 10.1109/ACCESS.2023.3294569.

- Saeed, W., & Omlin, C. (2023). Explainable AI (XAI): A systematic meta-survey of current challenges and future opportunities. Knowledge-Based Systems, 263, 110273.

- Hassija, V., Chamola, V., Mahapatra, A., Singal, A., Goel, D., Huang, K., … & Hussain, A. (2023). Interpreting black-box models: a review on explainable artificial intelligence. Cognitive Computation, 1-30.

- Kaur, P., Singh, S. K., Singh, I., & Kumar, S. (2021, December). Exploring Convolutional Neural Network in Computer Vision-based Image Classification. In International Conference on Smart Systems and Advanced Computing (Syscom-2021).

- Aggarwal, K., Singh, S. K., Chopra, M., Kumar, S., & Colace, F. (2022). Deep learning in robotics for strengthening industry 4.0.: opportunities, challenges and future directions. Robotics and AI for Cybersecurity and Critical Infrastructure in Smart Cities, 1-19.

- Singh, I., Singh, S. K., Singh, R., & Kumar, S. (2022, May). Efficient loop unrolling factor prediction algorithm using machine learning models. In 2022 3rd International Conference for Emerging Technology (INCET) (pp. 1-8). IEEE.

- Kaur, P., Singh, S. K., Singh, I., & Kumar, S. (2021, December). Exploring Convolutional Neural Network in Computer Vision-based Image Classification. In International Conference on Smart Systems and Advanced Computing (Syscom-2021).

- Setia, H., Chhabra, A., Singh, S. K., Kumar, S., Sharma, S., Arya, V., … & Wu, J. (2024). Securing the Road Ahead: Machine Learning-Driven DDoS Attack Detection in VANET Cloud Environments. Cyber Security and Applications, 100037.

- Mengi, G., Singh, S. K., Kumar, S., Mahto, D., & Sharma, A. (2021, September). Automated Machine Learning (AutoML): The Future of Computational Intelligence. In International Conference on Cyber Security, Privacy and Networking (pp. 309-317). Cham: Springer International Publishing

- Peñalvo, F. J. G., Maan, T., Singh, S. K., Kumar, S., Arya, V., Chui, K. T., & Singh, G. P. (2022). Sustainable Stock Market Prediction Framework Using Machine Learning Models. International Journal of Software Science and Computational Intelligence (IJSSCI), 14(1), 1-15.

- Gupta, A., Singh, S. K., & Chopra, M. (2023). Impact of Artificial Intelligence and the Internet of Things in Modern Times and Hereafter: An Investigative Analysis. In Advanced Computer Science Applications (pp. 157-173). Apple Academic Press.

- Peñalvo, F. J. G., Maan, T., Singh, S. K., Kumar, S., Arya, V., Chui, K. T., & Singh, G. P. (2022). Sustainable Stock Market Prediction Framework Using Machine Learning Models. International Journal of Software Science and Computational Intelligence (IJSSCI), 14(1), 1-15.

- Singh, I., Singh, S. K., Singh, R., & Kumar, S. (2022, May). Efficient loop unrolling factor prediction algorithm using machine learning models. In 2022 3rd International Conference for Emerging Technology (INCET) (pp. 1-8). IEEE.

- Chopra, M., Singh, S. K., Aggarwal, K., & Gupta, A. (2022). Predicting catastrophic events using machine learning models for natural language processing. In Data mining approaches for big data and sentiment analysis in social media (pp. 223-243). IGI Global.

- Dwivedi, R. K. (2022). Density-Based Machine Learning Scheme for Outlier Detection in Smart Forest Fire Monitoring Sensor Cloud. International Journal of Cloud Applications and Computing (IJCAC), 12(1), 1-16.

- Dwivedi, R. K., Kumar, R., & Buyya, R. (2021). Gaussian Distribution-Based Machine Learning Scheme for Anomaly Detection in Healthcare Sensor Cloud. International Journal of Cloud Applications and Computing (IJCAC), 11(1), 52-72.

- Kumar, J., Gupta, D. L., & Umrao, L. S. (2022). Fault-Tolerant Algorithm for Software Prediction Using Machine Learning Techniques. International Journal of Software Science and Computational Intelligence (IJSSCI), 14(1), 1-18.

- Costa-Climent, R. (2022). The Role of Machine Learning in Creating and Capturing Value. International Journal of Software Science and Computational Intelligence (IJSSCI), 14(1), 1-19.

Cite As:

Jaggi S, Sharma A., Stephen Jonathan S, and Mariandani RC (2024) Exploring XAI for Trustworthy Decision Making, Insights2Techinfo. pp.1