By: Himanshu Tiwari, International Center for AI and Cyber Security Research and Innovations (CCRI), Asia University, Taiwan, nomails1337@gmail.com

Federated mastering has emerged as a promising paradigm for training gadget studying models, even preserving facts’ privacy. It lets multiple events collaborate on version schooling without sharing their uncooked statistics. However, like any emerging technology, federated learning is not immune to protection risks. This study’s article explores the safety-demanding situations associated with federated getting-to-know and gives potential solutions to mitigate those dangers. We study the threat landscape, privacy preservation techniques, and excellent practices for boosting the safety of federated mastering systems.

1.Introduction

Federated gaining knowledge is a decentralized device getting-to-know method that enables version schooling throughout distributed devices or servers while preserving the localized underlying records. It offers benefits in information privacy, reduced statistics transfer, and the potential to collaborate on gadget-mastering responsibilities without exposing sensitive statistics. However, federated learning is only sometimes without security concerns. This article offers an in-intensity analysis of the safety-demanding situations associated with federated studying and gives insights into ability solutions[1].

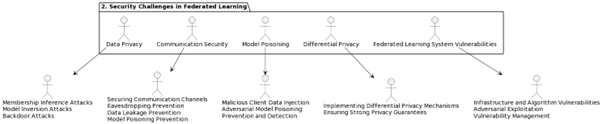

2.Security Challenges in Federated Learning

2.1. Data Privacy

One of the primary motivations for adopting federated mastering is preserving facts’ privacy. However, numerous challenges exist, which include membership inference attacks, model inversion attacks, and backdoor assaults. These threats could compromise the privacy of contributors’ information.

2.2. Communication Security

Data is exchanged among clients and a crucial server or among customers in federated mastering. Securing those conversation channels is essential to prevent eavesdropping, facts leakage, and version poisoning attacks.

2.3. Model Poisoning

Malicious customers can inject incorrect or biased data into the education method, leading to adversarial version poisoning. Preventing and detecting such assaults is critical to preserve model integrity.

2. 4. Differential Privacy

Differential privateness mechanisms commonly used to protect individual records points will only sometimes be honest to implement in federated learning situations. Ensuring strong differential privacy guarantees is an assignment.

2. 5. Federated Learning System Vulnerabilities

The infrastructure and algorithms used in federated studying systems can have vulnerabilities that adversaries may also make the most of. Vulnerability control is a crucial thing in securing federated learning.

3. Security Solutions

3.1. Secure Aggregation

Secure aggregation protocols, consisting of stable multi-birthday party computation (MPC) and homomorphic encryption, may be applied for specific privacy-preserving version aggregation[3].

3.2. Federated Learning Frameworks

Using solid, well-maintained, federated studying frameworks with integrated security capabilities is a sensible way to address many protection-demanding situations. TensorFlow Federated and PySyft are examples of frameworks that offer security-improved options.

3.3. Secure Communication

Implementing secure communication protocols and Transport Layer Security (TLS) or Virtual Private Networks (VPNs) can shield records in transit during federated learning.

3.4 Anomaly Detection

Deploying anomaly detection techniques to identify malicious clients or model poisoning can assist in maintaining model integrity.

3.5. Regular Security Audits

Daily safety audits of federated learning structures can uncover vulnerabilities and improve everyday machine protection.

4. Conclusion

Federated learning gives a promising technique to collaborative gadget mastering with robust records privacy maintenance. Nevertheless, safety remains a critical situation. Understanding the safety challenges and imposing appropriate answers is essential to gaining knowledge of a steady and truthful technology. By addressing troubles related to statistics privacy, conversation safety, version poisoning, and extra, corporations can harness the strength of federated gaining knowledge while safeguarding touchy information and version integrity. As federated learning adapts, the research network and enterprise stakeholders must work together to construct a stable foundation for this ground-breaking era.

Reference

- Zhang C, Xie Y, Bai H, Yu B, Li W, Gao Y. A survey on federated learning. Knowledge-Based Systems. 2021 Mar 15;216:106775.

- Mammen PM. Federated learning: Opportunities and challenges. arXiv preprint arXiv:2101.05428. 2021 Jan 14.

- Li T, Sahu AK, Talwalkar A, Smith V. Federated learning: Challenges, methods, and future directions. IEEE signal processing magazine. 2020 May 1;37(3):50-60.

- Bhatti, M. H., Khan, J., Khan, M. U. G., Iqbal, R., Aloqaily, M., Jararweh, Y., & Gupta, B. (2019). Soft computing-based EEG classification by optimal feature selection and neural networks. IEEE Transactions on Industrial Informatics, 15(10), 5747-5754.

- Sahoo, S. R., & Gupta, B. B. (2019). Hybrid approach for detection of malicious profiles in twitter. Computers & Electrical Engineering, 76, 65-81.

- Gupta, B. B., Yadav, K., Razzak, I., Psannis, K., Castiglione, A., & Chang, X. (2021). A novel approach for phishing URLs detection using lexical based machine learning in a real-time environment. Computer Communications, 175, 47-57.

Cite As:

Tiwari H. (2023) Federated Learning Security: Challenges and Solutions, Insights2Techinfo, pp.1