By: Vanna karthik; Vel Tech University, Chennai, India

Abstract

Through Generative AI technology a user can now engage with technology differently because chatbots can generate realistic text and images along with code. Technological advancements have delivered many advantages yet they introduced new possibilities for criminal purposes. Generative AI provides cybercriminals with chatbots they can use to perform advanced phishing attacks because of their AI capabilities. Phishing criminals manage to breach security protocols and harvest human weaknesses through their AI-operated strategy for generating authentic and individualized messages. This document examines the conjunction of generative AI with phishing through the analysis of chatbots as cybercrime instruments along with their menacing aspects and available solutions to block these dangers.

Introduction

Traditional cyber attackers utilize social engineering to practice phishing attacks for securing sensitive information like passwords and credit card numbers and personal data. Phishing attacks during the past used to be detectable through language mistakes and non-specific correspondence as well as unreliable links. The release of OpenAI’s GPT along with other large language models (LLMs) brought a transformative change to the entire phishing sector[1]. These AI-based tools produce elite simulations and relevant textual content that create significant difficulties for people alongside organizations to identify genuinely from fake communications.

Cybercriminals have started to turn chatbots against their original purpose of customer service improvement through interaction optimization towards malicious ends. Through automation, attackers can create phishing emails and messages as well as fake websites to perpetrate fraudulent attempts against victims on a large and targeted scale. This report explores the operational framework of this developing danger and its direct impact on cybersecurity.

The Rise of Generative AI in Phishing

China teaching AI models through large datasets gives them the ability to imitate and understand patterns from human language. The language adaptation skills of this technology have proven essential for legal operations that require content creation and customer support as well as language translation tasks. These models deliver high usefulness, but they present safety hazards when utilized by unscrupulous actors.

1. Personalization at Scale :

Converters can benefit from generative AI through their powerful features which develop personalized content. Computer criminals use chatbots to manufacture individualized phishing communications that bring in specific target information such as victim names along with their job roles and their most recent actions. When attackers customize their messages to individual recipients the messages become more likely to succeed because victims trust information that seems personalized to them[2].

2. Overcoming Language Barriers: The traditional phishing technique regularly failed to reach non-English users because of language barriers[3]. Generative AI removes this obstacle because it generates fluent text with correct grammar which supports multiple languages. The attackers gain access to worldwide targets thanks to this feature.

3. Dynamic Content Generation: Generative AI produces flexible content that changes according to the ongoing interaction when compared to fixed phishing templates. The Phyto kinetic method allows a chatbot to respond naturally to victims’ inquiries through conversations until trust develops which prepares the victim for malicious software delivery.

How Chatbots Are Weaponized [4]

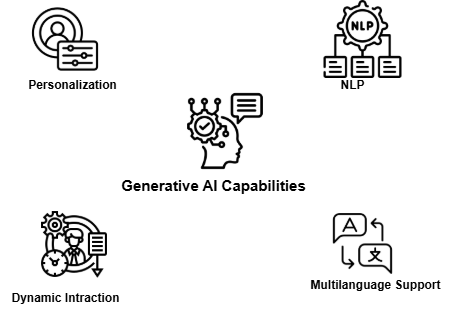

Chatbots used for phishing purposes employ various techniques which take advantage of generative AI capabilities. Several noteworthy methods are used to weaponize chatbots as follows.

1. Phishing Email Generation: Generation through automated processes enables chatbots to produce phishing emails which pretend to be legitimate bank messages and government alerts as well as notifications from popular online platforms. Emails prompt victims to perform quick actions by demanding account data verification or authorization updates because their technique relies on immediate compliance from victims.

2. Impersonation Attacks: The impersonation of trusted individuals happens through generative AI platforms which target colleagues and friends and family members. Analysis of public information enables attackers to create fake messages that imitate communications from trusted contacts which makes their attempts of deception more likely to succeed.

3. Fake Customer Support: Cyber criminals use AI-powered chatbots to deceive users through fake platforms both on websites and messaging applications by pretending to be customer service professionals. Artificial agents established for victim interactions will successfully extract private data and install destructive programs from their targets.

4. Deepfake Audio and Video: Although text-based phishing is still the most common type, deepfake audio and video content is also being produced using generative AI. AI can be used, for instance, to create a speech recording that sounds like the voice of a CEO telling a worker to provide money or reveal private information.

Mitigating the Threat[5]

The successful reduction of chatbots and generative AI weaponization must incorporate technological answers with educational teaching about these threats and policy-regulation methods. Some potential countermeasures include:

1. AI-Powered Detection Systems:

Secure systems which monitor reputation also use generative AI for phishing purposes can deploy the same technology to scan and prevent malicious content from reaching their targets. Through AI-based security systems patterns of communication get examined to find anomalies which indicate phishing activity.

2. User Training and Awareness:

The protection of users from AI-powered phishing depends heavily on their sufficient education about its dangers. Educational programs need to stress to employees the need to validate message authenticity alongside warning them about refraining from following any unknown links or suspicious attachments found in their email.

3. Multi-Factor Authentication (MFA):

The enforcement of an extra security measure through MFA helps decrease the effectiveness of phishing attacks. The perpetrators would need two types of verification when they possess login information but still lack access to the account.

4. Regulatory Measures:

Government entities together with their industry groups must create clear regulations that aim to stop generative AI misuse. Governments should prohibit both the progress of AI devices suitable for weaponization and their distribution capabilities to end-users.

Conclusion

With generative AI as a foundation cybercriminal have established a new level of phishing which allows them to launch complex targeted attacks. A critical danger to people as well as organizations exists from bot-chats which need immediate measures to reduce the damage they cause. Using cutting-edge detection systems together with educational programs for users alongside government guidelines will decrease dangerous AI-powered phishing threats while enabling generative AI to maintain beneficial functions. Cybersecurity will need continuous innovation combined with constant vigilance to maintain lead over cybercriminals because technology remains in a state of advancement.

References

- I. Hasanov, S. Virtanen, A. Hakkala, and J. Isoaho, “Application of Large Language Models in Cybersecurity: A Systematic Literature Review,” IEEE Access, vol. 12, pp. 176751–176778, 2024, doi: 10.1109/ACCESS.2024.3505983.

- M. Schmitt and I. Flechais, “Digital deception: generative artificial intelligence in social engineering and phishing,” Artif. Intell. Rev., vol. 57, no. 12, p. 324, Oct. 2024, doi: 10.1007/s10462-024-10973-2.

- A. A. Hasegawa, N. Yamashita, M. Akiyama, and T. Mori, “Why They Ignore English Emails: The Challenges of Non-Native Speakers in Identifying Phishing Emails”.

- S. S. Roy, P. Thota, K. V. Naragam, and S. Nilizadeh, “From Chatbots to PhishBots? — Preventing Phishing scams created using ChatGPT, Google Bard and Claude,” Mar. 10, 2024, arXiv: arXiv:2310.19181. doi: 10.48550/arXiv.2310.19181.

- S. Neupane, I. A. Fernandez, S. Mittal, and S. Rahimi, “Impacts and Risk of Generative AI Technology on Cyber Defense,” Jun. 22, 2023, arXiv: arXiv:2306.13033. doi: 10.48550/arXiv.2306.13033.

- AI Safety and Security M Rahaman, P Pappachan, SM Orozco, S Bansal… – Challenges in Large Language Model Development …, 2024

- Sedik, A., Hammad, M., Abd El-Samie, F. E., Gupta, B. B., & Abd El-Latif, A. A. (2022). Efficient deep learning approach for augmented detection of Coronavirus disease. Neural Computing and Applications, 1-18.

- Deveci, M., Pamucar, D., Gokasar, I., Köppen, M., & Gupta, B. B. (2022). Personal mobility in metaverse with autonomous vehicles using Q-rung orthopair fuzzy sets based OPA-RAFSI model. IEEE Transactions on Intelligent Transportation Systems, 24(12), 15642-15651.

- Lv, L., Wu, Z., Zhang, L., Gupta, B. B., & Tian, Z. (2022). An edge-AI based forecasting approach for improving smart microgrid efficiency. IEEE Transactions on Industrial Informatics, 18(11), 7946-7954.

- Kasa A.S. (2024) AI Strategies for Phishing Email Detection, Insights2Techinfo, pp.1

Cite As

Karthik V. (2025) Generative AI and Phishing : How Chatbots are Being Weaponized, Insights2techinfo pp.1