By: Chitwan Gupta1 & Aditi Sharma2

1,2Department of CSE, Chandigarh College of Engineering and Technology, Chandigarh, India

e-mail: 1co22320@ccet.ac.in, 2lco22393@ccet.ac.in

Abstract

In the era dominated by data, Federated Learning emerges as a powerful pillar for maintaining privacy of an individual. Federated learning is a model where each client trains the data locally and produces a new model that is transported to the server. After this, the collection of all locally made models averages out and makes the master model. For security features they add some characters or code with our data so that others can’t obfuscate our data. The model doesn’t take the data from our devices but copies our suggestions and other data to the server. This article helps to look at two powerful privacy-preserving tools like PyTorch and TensorFlow Federated (TFF). We also gain perspective on how to choose the best tool for our work. It examines how federated learning is a combo of decentralized learning and blockchain technology. At the end it highlights its benefits and the challenges faced in its emergence.

Keywords: Federated Learning, Privacy-Preserving Machine Learning, Blockchain, Pytorch, Tensorflow Federated (TFF), Decentralized Learning, Security, Governance.

Introduction

In the digital epoch, where the currency of innovation is inexorably tied to the surging tide of data, Federated Learning [1] emerges not merely as a solution but as a vanguard defending the citadel of individual privacy. It serves as a testament to a more profound metamorphosis, transcending the realm of algorithmic refinement and stepping into the very ethos of how we grapple with the colossal reservoirs of data that shape our interconnected existence. Far beyond a mere technological leap, Federated Learning becomes the keystone in the reconstruction of the ethical and trust-laden foundation upon which our digital society rests. It introduces a novel modus operandi, one that facilitates collaborative improvement of machine learning [2] models across a network of decentralized data sources. This heralds an epoch where the acquisition of insights and advancements is achieved without imperiling the sanctity of personal information. At its essence, Federated Learning orchestrates a reversal of the traditional paradigm of data handling. It ingeniously dispatches models to where data resides, ensuring the secure and inviolate nature of raw data. This transformative approach not only fortifies the confidentiality of personal information but, perhaps more significantly, empowers individuals with a newfound agency, granting them control and consent over their contributions to the vast tapestry of collective intelligence. The dynamic force of Federated Learning reverberates across the tapestry of various sectors, casting its influence with notable resonance in domains such as healthcare and banking. In these realms, where the intersection of predictive model enhancement and data privacy is a delicate dance, Federated Learning emerges as an indispensable ally. Yet, it extends its reach far beyond, permeating industries where the imperative of data privacy is equally paramount. However, Federated Learning is not just a marvel of technical intricacy; it embodies a commitment to reshaping the delicate equilibrium between innovation and privacy. It charts a course toward a more responsible, sustainable, and human-centric digital future. In a world where the inexorable march of technology collides with the inviolable right to privacy, Federated Learning stands as a testament to the possibility of forging a future where progress and protection coalesce in harmony.

Federated Learning (FL) is a machine learning approach that enables model training across decentralized devices or servers while keeping the data localized. Cloud computing [3-5] can play a crucial role in federated learning by providing resources, storage, and infrastructure support. Cloud computing enhances the scalability, efficiency, and security of federated learning by providing a centralized infrastructure for model aggregation, parameter management, data storage, and overall system coordination. The combination of federated learning and cloud computing is particularly beneficial in scenarios where privacy, edge computing, and collaborative model training are essential.

Working of Federated Learning

In the intricate dance of Federated Learning on the blockchain[6], the process unfolds through a meticulously orchestrated series of steps, each playing a vital role in safeguarding privacy and fostering collaborative intelligence:

Smart Contracts as Architects: At the outset, the stage is set with the establishment of smart contracts on the blockchain. These digital architects codify the terms and conditions that govern the participation of entities within the Federated Learning network. Smart contracts serve as the bedrock upon which the collaborative journey is structured, ensuring transparency and adherence to predefined rules.

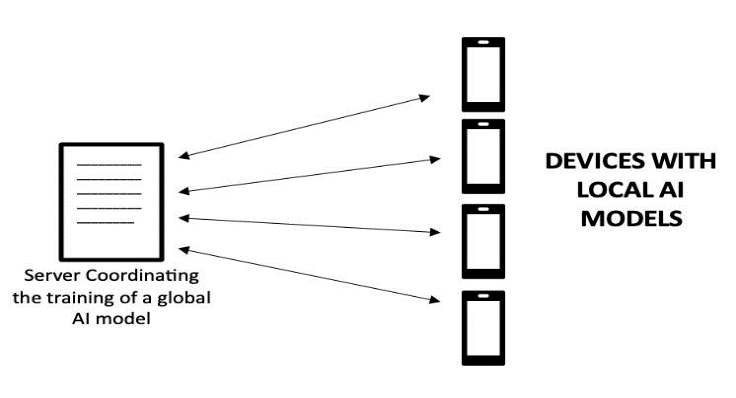

Local Data, Global Impact: Participants within the network, be they nodes or devices, retain custody of their data locally. Instead of engaging in the perilous exchange of raw data, they embark on the transformative journey of training machine learning models within their secure enclaves as shown in Fig 1. This localized approach not only upholds data privacy but also lays the foundation for collective intelligence to emerge without compromising individual data integrity.

Proposing Evolution Through Model Updates: In the crucible of machine learning training, participants craft model update proposals, encapsulating the refinements and alterations to the global model. These proposals ripple through the network, a symphony of potential enhancements traversing the decentralized landscape. Each proposal bears the fingerprints of its creator’s local data, contributing to the richness and diversity of the collective intelligence.

Consensus Forging Through Blockchain: The blockchain’s consensus mechanism, whether it be the robust Proof of Stake (PoS) or the energy-intensive Proof of Work (PoW), steps onto the center stage. It undertakes the formidable task of scrutinizing and validating the myriad model update proposals circulating within the network. Through this intricate dance of consensus forging, a harmonious agreement is reached, ensuring that only the most robust and democratically accepted model updates find their way into the global ledger.

Fig 1. Various client interaction with the server

Empowering Global Model Evolution: With consensus firmly established, the approved model updates seamlessly integrate into the global model, etching their presence onto the immutable ledger of the blockchain. This global model, a repository of collective intelligence, becomes a beacon for participants, offering them access to the distilled insights and refinements contributed by the entire network. It stands as a testament to the power of collaboration, where the whole is truly greater than the sum of its decentralized parts.

In the symphony of Federated Learning on the blockchain, each note plays a crucial role, weaving a narrative of privacy, collaboration, and technological innovation. The smart contracts orchestrate the rules, local data fuels the training grounds, model updates become the artistic expression, consensus forging is the meticulous choreography, and the global model, a testament to the collective brilliance that emerges when privacy and collaboration coalesce.

Tools for Federated Learning: Pytorch Vs. Tensorflow

Federated Learning is more than just a notion; it is a dynamic interaction between technology and privacy. When it comes to adopting this paradigm, choosing the correct tools is critical. PyTorch and TensorFlow Federated (TFF) are two popular options, each with its own set of strengths, and making the proper decision is similar to choosing the ideal instrument for an orchestra, tailored to the symphony of privacy-preserving Federated Learning. In the realm of Federated Learning, the fusion of technology and privacy is not a mere abstraction but a vibrant, dynamic interaction that shapes the very essence of collaborative intelligence. Central to this intricate dance is the choice of tools, and in the symphony of privacy-preserving Federated Learning, PyTorch and TensorFlow Federated (TFF) stand as two virtuoso instruments, each with its unique strengths, offering a spectrum of possibilities akin to selecting the perfect instruments for an orchestra.

PyTorch: The Master of Dynamic Computational Graph The dynamic computation graphs in PyTorch bring a distinctive advantage to the realm of Federated Learning, amplifying flexibility and adaptability in handling decentralized, evolving datasets. The dynamic nature of PyTorch’s graph creation allows for real-time modification and adjustment of the machine learning model during the training process. This flexibility becomes particularly advantageous when dealing with dynamic datasets sourced from multiple decentralized contributors in the context of Federated Learning. In the orchestra of Federated Learning, PyTorch can be envisioned as a conductor who possesses the ability to improvise and alter the musical score while the performance is underway. This dynamic adaptability ensures that the model can evolve in response to the nuances and variations present in the diverse and decentralized data sources. The

on-the-fly modifications to the model enable it to better capture the ever-changing patterns and characteristics inherent in data contributed by different nodes or devices. However, it is important to note that PyTorch’s adaptability comes with a slightly steeper learning curve. The dynamic nature of its computation graphs demands a nuanced understanding, and developers may require additional experience to fully harness its potential. Despite the initial learning curve, once developers become adept at navigating PyTorch’s dynamic capabilities, it can prove to be an exceptionally effective tool for Federated Learning. The ability to seamlessly adapt the model to the dynamic nature of decentralized datasets makes PyTorch a valuable asset in the pursuit of collaborative intelligence while preserving privacy across diverse sources.

Tailoring TensorFlow Federated (TFF for Federated Learning TensorFlow Federated (TFF), often referred to as TFF, stands in contrast to PyTorch with its specialized focus on federated learning workflows. Unlike PyTorch’s general-purpose approach, TFF is purpose-built from the ground up to navigate the intricacies of decentralized data, positioning itself as an optimal choice for privacy-preserving machine learning endeavors. At the heart of TFF’s design is its commitment to federated settings. It furnishes specific abstractions and constructs within its Federated Core, laying the groundwork for essential components in the Federated Learning symphony. These include federated types and federated computations, serving as the foundational building blocks for orchestrating collaborative intelligence across distributed [7] data sources. In this analogy, TFF can be envisioned as a conductor with a profound expertise in orchestrating complex symphonies, with Federated Learning being a prime example of such a composition. While TFF’s specialization in Federated Learning is a notable strength, it does come with a caveat. The framework may not be as adaptable to tasks beyond the federated domain. Its design and functionalities are tailored for the nuances of decentralized data and the unique challenges posed by federated learning workflows. Consequently, for those seeking a tool with a broader scope across various machine learning tasks, TFF’s specialization might be a limitation. In the grand theatre of privacy-preserving machine learning, PyTorch and TFF emerge as distinctive performers, each with its own strengths and nuances. PyTorch, the dynamic conductor, thrives on adaptability and real-time adjustments, making it well-suited for the evolving landscape of federated datasets. On the other side, TFF, the specialized maestro, excels in orchestrating the intricate symphony of Federated Learning but may not take center stage in more general machine learning tasks. The choice between these virtuosos depends on the specific requirements of the performance, where the nuances of federated workflows or the versatility of general-purpose machine learning will determine the ideal conductor for the symphony of collaborative intelligence.

How to Choose the Right Tool?

The choice between PyTorch and TensorFlow Federated (TFF) mirrors the nuanced decision of selecting the right instrument for a particular musical composition. PyTorch, akin to a versatile instrument, boasts the capability to play a diverse array of tunes, while TFF serves as an expert, meticulously tuned for a specific genre, particularly in the context of privacy-preserving Federated Learning. In navigating the Federated Learning orchestra, it becomes paramount to consider the nature of your project and the proficiency of your team with these tools. PyTorch, with its adaptability and broad applicability, resembles a versatile instrument that can seamlessly transition between different musical pieces.

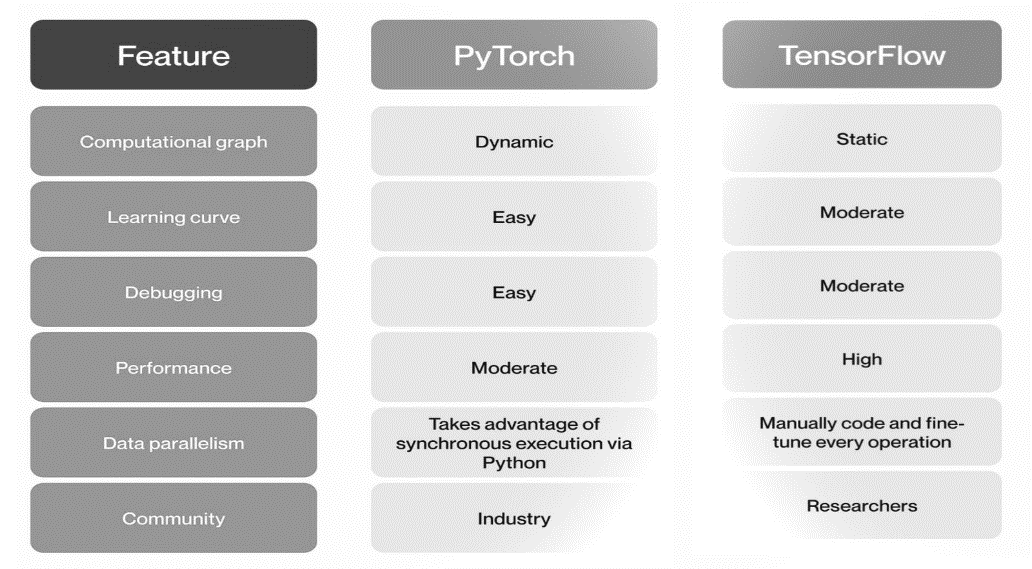

Fig 2. PyTorch VS TensorFlow

If your project requires versatility and your team is well-versed in the nuances of PyTorch, it may be the fitting choice. On the other hand, if your symphony is exclusively focused on the privacy-preserving realm of Federated Learning, TFF emerges as the instrument finely crafted for this specialized genre. Like a virtuoso performer, TFF offers a customized experience tailored to the unique demands of federated workflows as shown in Fig 2. The decision ultimately hinges on a thoughtful consideration of your project’s objectives and the expertise of your team. Whether you opt for the dynamic adaptability of PyTorch or the specialized finesse of TFF, both tools have their strengths and play essential roles in the grand orchestration of privacy-preserving machine learning. In the symphony of Federated Learning, it’s not a question of one tool being superior to the other; it’s about the harmonious alignment of the correct tool with the precise needs of your privacy-preserving composition. The choice should resonate with the unique requirements of your project, ensuring that the selected instrument contributes seamlessly to the symphony of collaborative intelligence while preserving the privacy of decentralized data sources.

Digging The Benefits

In the landscape of privacy-preserving machine learning, the fusion of decentralized learning, exemplified by Federated Learning, and blockchain technology creates a robust framework that addresses various challenges while fostering trust, transparency, and security[8-10]. Here are key aspects of the synergy between decentralized learning and blockchain:

- Privacy Protection – Localized Raw Data-Decentralized Learning, particularly in the context of Federated Learning, champions data privacy by keeping raw data localized. The model updates shared across the network exclude sensitive information, mitigating the risk of exposing individual-level data.

- Trust and Transparency – Immutable Ledger- The blockchain’s immutable ledger ensures transparency and instills confidence in the model update process. Each model update, recorded on the blockchain, can be independently verified by every participant, promoting a trustworthy and transparent collaborative environment.

- Security – Data Integrity : Blockchain’s robust security measures, including cryptographic hashing and consensus mechanisms, fortify the network against data breaches and unauthorized access. This ensures the integrity and confidentiality of critical information throughout the decentralized learning process.

- Decentralization- Reduced Single Point of Failure- The decentralized nature of both Federated Learning and blockchain technology mitigates the risk of a single point of failure. This decentralization enhances the resilience of the network, making it more robust against potential attacks or disruptions.

- Incentive Mechanisms- Bitcoin Awards and Beyond- Blockchain introduces the capability to create incentive mechanisms, such as bitcoin awards, for individuals contributing valuable model updates or computational resources. This encourages active participation and collaboration within the decentralized learning ecosystem, aligning the interests of participants with the overall goals of the network. The convergence of decentralized learning and blockchain not only addresses privacy concerns but also establishes a foundation for a trustworthy, secure, and incentivized collaborative environment. This powerful synergy empowers participants in the network, enhances the overall integrity of the learning process, and fosters a community-driven approach to privacy-preserving machine learning.

Challenges

The convergence of blockchain and machine learning introduces several challenges that need careful consideration for the successful development and deployment of scalable, interoperable, and well-governed systems-

- Scalability- Performance Maintenance: Scaling a network while maintaining performance is a formidable challenge. As the network grows, ensuring that computational and processing resources can handle the increasing volume of transactions, model updates, and data becomes imperative. Solutions may involve optimizing consensus mechanisms, improving network infrastructure, or exploring off-chain scaling solutions to alleviate the scalability concerns. Interoperability: Integration Complexity: Integrating various blockchain systems with diverse machine learning frameworks poses a significant challenge. Ensuring seamless communication and data exchange between different platforms and protocols requires standardized interfaces and protocols. Developing common standards for interoperability is crucial to foster collaboration between disparate systems and frameworks within the blockchain and machine learning ecosystem.

- Governance- Decision-Making Structures: Establishing effective governance models is paramount for making decisions regarding network rules, updates, and other critical aspects. Decentralized networks often require consensus on governance to avoid conflicts and ensure the smooth evolution of the system. Striking a balance between decentralization and the need for coordinated decision-making is a challenge, as it involves defining clear governance structures and mechanisms for all participants in the network. Addressing these challenges involves a collaborative effort across the blockchain and machine learning communities. Some potential strategies include: Research and Development: Ongoing research and development efforts are essential to address scalability concerns. Innovations in consensus algorithms, sharding, and off-chain solutions can contribute to improved scalability.

- Standardization Efforts-Collaboration between blockchain and machine learning communities to establish standards for interoperability can simplify integration challenges. Standardizing interfaces and communication protocols ensures smoother interactions between different systems. Community Engagement: Establishing inclusive governance models that actively involve participants in decision-making processes is crucial. Community-driven approaches foster transparency and build trust, ensuring that governance decisions align with the diverse interests of network participants. In navigating these challenges, a holistic and collaborative approach is necessary. As the intersection of blockchain and machine learning continues to evolve, addressing scalability, interoperability, and governance concerns will be pivotal for creating robust and sustainable ecosystems that harness the synergies of these transformative technologies.

Conclusion

Federated Learning serves as a light of innovation and privacy preservation in the digital sphere. Its blockchain composition, together with the use of technologies such as PyTorch and TensorFlow Federated (TFF), illustrates a collaborative and responsible approach to machine learning. Federated Learning, in collaboration with blockchain technology, lays the framework for a reliable and robust ecosystem of privacy-preserving machine learning. However, scalability, interoperability, and governance remain significant challenges. These issues can be resolved by continued research, standardization efforts, and community engagement, paving the path for a future in which privacy and technology growth can coexist. By implementing the concept of Federated Learning and continuously using the transformative potential of blockchain technology, we can have a more ethical, fair, and privacy-centric digital future.

References

- Sharma, A., Singh, S. K., Chhabra, A., Kumar, S., Arya, V., & Moslehpour, M. (2023). A Novel Deep Federated Learning-Based Model to Enhance Privacy in Critical Infrastructure Systems. International Journal of Software Science and Computational Intelligence (IJSSCI), 15(1), 1-23.

- Mengi, G., Singh, S. K., Kumar, S., Mahto, D., & Sharma, A. (2021, September). Automated Machine Learning (AutoML): The Future of Computational Intelligence. In International Conference on Cyber Security, Privacy and Networking (pp. 309-317). Cham: Springer International Publishing.

- Peñalvo, F. J. G., Sharma, A., Chhabra, A., Singh, S. K., Kumar, S., Arya, V., & Gaurav, A. (2022). Mobile cloud computing and sustainable development: Opportunities, challenges, and future directions. International Journal of Cloud Applications and Computing (IJCAC), 12(1), 1-20.

- Saini, T., Kumar, S., Vats, T., & Singh, M. (2020). Edge Computing in Cloud Computing Environment: Opportunities and Challenges. In International Conference on Smart Systems and Advanced Computing (Syscom-2021).

- Gupta, A., Sharma, A., Singh, S. K., & Kumar, S. Cloud Computing & Fog Computing: A solution for High Performance Computing. Proceedings of the 11th INDIACom. IEEE.

- Signla, D., & Kr, S. (2021). Blockchain for Data Science.

- Singh, M., & Kumar, S. (2020). Distributed ledger technology.

- Dubey, H. A. R. S. H. I. T., Kumar, S. U. D. H. A. K. A. R., & Chhabra, A. N. U. R. E. E. T. (2022). Cyber Security Model to Secure Data Transmission using Cloud Cryptography. Cyber Secur. Insights Mag, 2, 9-12.

- Sharma, A., Singh, S. K., Kumar, S., Chhabra, A., & Gupta, S. (2021, September). Security of Android Banking Mobile Apps: Challenges and Opportunities. In International Conference on Cyber Security, Privacy and Networking (pp. 406-416). Cham: Springer International Publishing.

- Singh, M., Singh, S. K., Kumar, S., Madan, U., & Maan, T. (2021, September). Sustainable Framework for Metaverse Security and Privacy: Opportunities and Challenges. In International Conference on Cyber Security, Privacy and Networking (pp. 329-340). Cham: Springer International Publishing.

- Malik, M., Prabha, C., Soni, P., Arya, V., Alhalabi, W. A., Gupta, B. B., … & Almomani, A. (2023). Machine Learning-Based Automatic Litter Detection and Classification Using Neural Networks in Smart Cities. International Journal on Semantic Web and Information Systems (IJSWIS), 19(1), 1-20.

- Verma, V., Benjwal, A., Chhabra, A., Singh, S. K., Kumar, S., Gupta, B. B., … & Chui, K. T. (2023). A novel hybrid model integrating MFCC and acoustic parameters for voice disorder detection. Scientific Reports, 13(1), 22719.

- Chui, K. T., Gupta, B. B., Liu, J., Arya, V., Nedjah, N., Almomani, A., & Chaurasia, P. (2023). A survey of internet of things and cyber-physical systems: standards, algorithms, applications, security, challenges, and future directions. Information, 14(7), 388.

- Sharma, P. C., Mahmood, M. R., Raja, H., Yadav, N. S., Gupta, B. B., & Arya, V. (2023). Secure authentication and privacy-preserving blockchain for industrial internet of things. Computers and Electrical Engineering, 108, 108703.

Cite As

Gupta C., Sharma A. (2024) PRESERVING PRIVACY THROUGH THE ERA OF FEDERATED LEARNING, Insights2Techinfo, pp.1