By: Mavneet Kaur

Self-driving automobiles, another name for autonomous cars, are capable of detecting their surroundings and navigating safely even when no person is present. This technology is a game-changer that might influence influencers and situations. Leading AI firms have already produced several self-driving automobiles. Because a completely driverless vehicle has yet to be made, autonomy is also a point of debate. Let’s look at the many levels at which these cars are configured to be self-driving.

Figure 1: Levels of self-driving classificationThe autonomy of a vehicle can be characterized on six levels ranging from 0 to 5, as shown in Figure 1.

No Automation: The vehicle is operated manually by people at this level, just like most cars today.

Driver Assistance: This is the most prevalent kind of automation. The vehicle is equipped with a one-of-a-kind automated technology that assists the driver with tasks such as acceleration and steering. It is considered Level 1 since all other driving components, like as braking and Adaptive Cruise Control, which keeps the car at a safe distance from the following vehicle, are supervised by humans.

Partial Automation: At this level, both the acceleration and the steering are controlled by the vehicle. Level 2 technologies include Tesla Autopilot and Cadillac Super Cruise.

Conditional Automation: Level 3 cars contain environmental sensing and decision-making capabilities, such as the ability to pass a slow-moving vehicle. They do, however, need human support. The Audi A8 is the world’s first mass-produced Level 3 car.

High Automation: Level 4 cars can intervene if something goes wrong or the system fails. As a result, most scenarios do not require human interaction with these vehicles. A human may, however, manually override the mechanism. Level 4 cars, which operate entirely on electricity, are already being deployed by companies like NAVYA, Waymo, and Magna.

Full Automation: Human assistance is not necessary at this level. There isn’t even a steering wheel on the cars. Geofencing will be removed, letting them go wherever they want and do anything a good human driver can. Many places throughout the world are testing fully autonomous vehicles, but none are yet available to the general public.

So, what is causing the delay in making a fully automated car?

Anything more than Level 2 is still a few years away in general manufacturing because of security, not because of technological competence. Vehicles have many physical safety features, such as seatbelts, airbags, and antilock brakes, but they don’t have nearly as many digital security safeguards. Connected vehicles aren’t yet ready for prime time for what’s required for safe performance in an online atmosphere. The main issue with self-driving automobiles is their safety. How can we verify that these computers comprehend the nuances of driving and that they will be able to deal with the endless variety of hitherto unknown events that they will undoubtedly encounter? To grasp the security issue, consider the technology utilized to create autonomous cars and how they contribute to security concerns.

A deep learning system interprets data from the car’s array of sensors and adds to the vehicle’s high-level control in a self-driving automobile. Categorization of items surrounding the automobile is one component of the deep learning system, which allows it to safely travel and obey the laws of the road by distinguishing people, bicycles, traffic signs, and other objects. Deep learning enables a computer to see patterns in enormous amounts of data, which it can then make decisions. A deep learning system interprets data from the car’s array of sensors and adds to the vehicle’s high-level control in a self-driving automobile. Categorization of items surrounding the automobile is one component of the deep learning system, which allows it to safely travel and obey the laws of the road by distinguishing people, bicycles, traffic signs, and other objects.

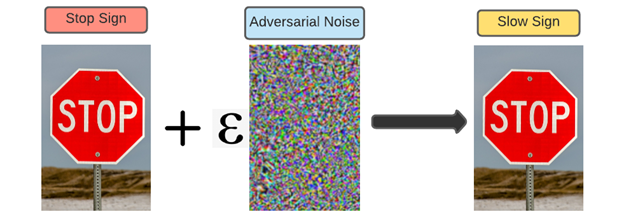

Figure 2: Adversarial noise addition in the input to generate adversarial inputUnfortunately, researches have revealed that deep learning image categorization systems are highly defenseless to well-crafted adversarial attacks [8]. It is possible to force a deep learning system to severely misinterpret a picture by adding a carefully determined noise to it. According to the “Safety First” industry consortium report [1], adversarial attacks [2] to autonomous vehicles (AV) can pose significant security risks. The attacker causes tiny but consistent changes in crucial model layers such as filters and input in this form of attack, as seen in Figure 2. A stop sign can be mistaken for a slow down sign if noise is added, causing safety trouble. Although this layer of noise is hardly visible to the human eye, it can cause significant misinterpretation in crucial scene components such as road symbols and traffic lights. This might lead to colliding with other cars or people in the future. The most typical physical hostile attempts that might affect the vehicular system’s performance are stickers or paints on traffic signboards. Adversarial attacks can be categorized into two types.

White box: The attacker has access to the model’s parameters in white-box attacks. Adversary customizes disturbances to a distinguished deep neural network, such as training data, architecture, and parameter values, in a white-box environment.

Black box: In a black-box situation, the adversary understands next to nothing about the network. While white-box attacks have been investigated, they may be inaccessible to adversarial systems owing to the presence of numerous active variables, the most notable of which is sensor data. According to our state-of-the-art review, there is relatively little research on black-box hostile attacks.

Is it, however, possible to tinker with the model at all times? In most situations, safety is jeopardized by external forces. Changing the physical environment rather than the vehicle’s perception of it is a significantly more viable method like in Figure 3. It is possible to fool a deep learning system into misclassifying road signs by tinkering with them, even while they are fully intelligible to people. With physical attacks, there are a number of new obstacles to contend with. There are so many separate ways to look at an object, any physical changes must still operate at various viewing angles and distances.

Removing perturbations is a possibility for avoiding adversarial attacks, but it comes at a high cost. A more logical strategy would be to build machine learning systems resistant to these types of attacks, primarily by teaching them to detect hostile samples accurately. One of the drawbacks of adversarial attacks is that they frequently necessitate white-box models, which demand access to the model’s internal workings, such as particular parameters and architectures. Fortunately, autonomous car makers have heavily safeguarded these elements, making it extremely difficult for attackers to gain access to them in order to design their attacks. However, some ways can help make attacks less severe or models more practical, like training with perturbation [3], gradient masking [4], input regularisation [5], defense distillation [6], and feature squeezing [7].

Future of self-driving automobiles and other challenges

Fully self-driving automobiles appear to be a long way from reality due to security issues. While roadways are typically tidy and well-known places, what happens on them is everything from predictable. Humans are capable to steer, but they’re also clumsy and erratic at times. So, until all vehicles on the road are fully autonomous, every autonomous vehicle will have to be able to respond to edge situations as well as the myriad idiosyncrasies and tics that human drivers display on a regular basis. It’s the kind of thing we can swat away while driving without skipping a beat, but getting computers to attempt to control it is a significant matter. It will take a long time to transition from a few vehicles on the road to 100 percent autonomous vehicles, which will take a decade once completely autonomous automobiles that do not require human supervision are developed.

References:

- M. Wood, P. Robbel, D. Wittmann et al., “Safety first for automated driving,” 2019. [Online].

- C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” in International Conference on Learning Representations, 2014. [Online].

- Tramer, Florian, and Dan Boneh. “Adversarial training and robustness for multiple perturbations.” arXiv preprint arXiv:1904.13000 (2019).

- Goodfellow, Ian. “Gradient masking causes clever to overestimate adversarial perturbation size.” arXiv preprint arXiv:1804.07870 (2018).

- Finlay, Chris, and Adam M. Oberman. “Scaleable input gradient regularization for adversarial robustness.” arXiv preprint arXiv:1905.11468 (2019).

- Carlini, Nicholas, and David Wagner. “Defensive distillation is not robust to adversarial examples.” arXiv preprint arXiv:1607.04311 (2016).

- Xu, Weilin, David Evans, and Yanjun Qi. “Feature squeezing: Detecting adversarial examples in deep neural networks.” arXiv preprint arXiv:1704.01155 (2017).

- Holstein, Tobias, Gordana Dodig-Crnkovic, and Patrizio Pelliccione. “Ethical and social aspects of self-driving cars.” arXiv preprint arXiv:1802.04103 (2018).

Related Articles

- Electric Vehicles: Future of Automobile Industry

- Driving Status Modeling: From Detection to Prediction

Cite this article

Mavneet Kaur (2021), Self-Driving Cars and Adversarial Attacks, Insights2Techinfo, pp.1